机器学习

集成学习

集成学习是一种技术,它创建多个模型并将它们组合起来以产生改进的结果。集成学习通常比单个模型产生更准确的解决方案。

- 集成学习方法适用于回归和分类问题。

- 回归的集成学习创建多个回归器,即多个回归模型,如线性、多项式等。

- 分类的集成学习创建多个分类器,即多个分类模型,如逻辑回归、决策树、KNN、SVM 等。

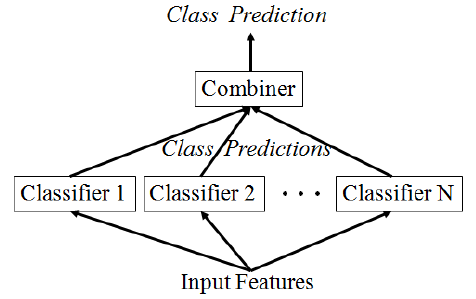

图 1:集成学习视图

组合哪些组件?

- 不同的学习算法

- 相同的学习算法以不同的方式训练

- 相同的学习算法以相同的方式训练

集成学习有两个步骤:

使用相同或不同的机器学习算法生成多个机器学习模型。这些模型被称为“基模型”。预测是基于这些基模型进行的。

集成学习中的技术/方法

投票 (Voting)、纠错输出码 (Error-Correcting Output Codes)、Bagging(装袋法):随机森林树 (Random Forest Trees)、Boosting(提升法):AdaBoost (Adaboost)、Stacking(堆叠法)。

Ensemble Learning

Ensemble learning usually produces more accurate solutions than a single model woulEnsemble Learning is a technique that create multiple models and then combine them them to produce

improved results. Ensemble learning usually produces more accurate solutions than a single model

would.

Ensemble learning methods is applied to regression as well as classification.

o Ensemble learning for regression creates multiple repressors i.e. multiple regression

models such as linear, polynomial, etc.

o Ensemble learning for classification creates multiple classifiers i.e. multiple classification

models such as logistic, decision tress, KNN, SVM, etc.

Figure 1: Ensemble learning view

Which components to combine?

• different learning algorithms

• same learning algorithm trained in different ways

• same learning algorithm trained the same way

There are two steps in ensemble learning:

Multiples machine learning models were generated using same or different machine learning

algorithm. These are called “base models”. The prediction perform on the basis of base models.

Techniques/Methods in ensemble learning

Voting, Error-Correcting Output Codes, Bagging: Random Forest Trees, Boosting: Adaboost, Stacking.