机器学习

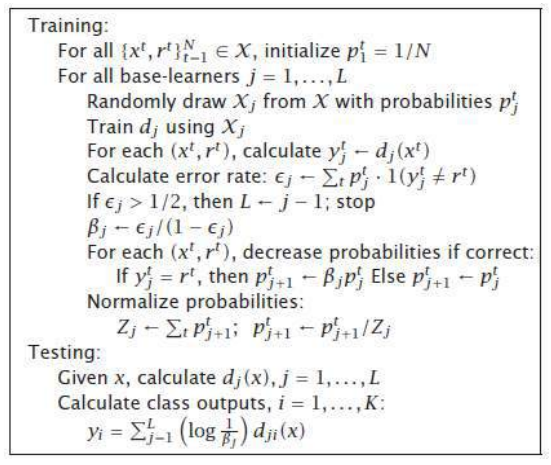

Freund 和 Schapire (1996) 提出了一种名为 AdaBoost(即自适应提升)的变体。它反复使用同一个训练集,因此不需要很大的训练集,但要求分类器必须简单,以免过拟合。AdaBoost 还可以组合任意数量的基学习器,而不限于三个。

AdaBoost 算法

其核心思想是根据错误来调整实例的抽样概率。假设 ptj 表示实例对 (xt,rt) 被抽取来训练第 j 个基学习器的概率。

最初,所有 。然后,从 开始,我们按以下方式添加新的基学习器:ϵj 表示 dj 的错误率。

AdaBoost 要求学习器是弱学习器,即对于所有 j,;如果不是,我们就停止添加新的基学习器。请注意,这个错误率不是针对原始问题,而是针对在步骤 j 中使用的数据集。我们定义 。如果 dj 正确分类了 xt,我们设置 ;否则,设置 。

由于 ptj+1 应该表示概率,因此需要进行归一化,我们将 ptj+1 除以 ∑ptj+1,使它们的总和为 1。这样做的好处是,正确分类实例的概率会降低,而错误分类实例的概率会增加。然后,根据这些修改后的概率 ptj+1,从原始样本中有放回地抽取相同大小的新样本,并用于训练 dj+1。

这样做的效果是,dj+1 会更侧重于被 dj 错误分类的实例;这就是为什么基学习器被选择为简单且不那么精确的原因,因为否则下一个训练样本将只包含少量重复多次的异常值和噪声实例。例如,对于决策树,通常使用决策树桩(decision stumps),即只生长一两层的树。很明显,这些树会有偏差,但方差的减小更大,并且总体误差会降低。像线性判别器这样的算法方差较低,因此我们无法通过 AdaBoosting 线性判别器来获得收益。

3.5.1AdaBoost

Freund and Schapire (1996) proposed a variant, named AdaBoost, short for adaptive boosting,

that uses the same training set over and over and thus need not be large, but the classifiers should be

simple so that they do not overfit. AdaBoost can also combine an arbitrary number of baselearners, not

three.

AdaBoost algorithm

The idea is to modify the probabilities of drawing the instances as a function of the error. Let us say ptj

denotes the probability that the instance pair (xt, r t) is drawn to train the jth base-learner.

Initially, all pt1 = 1/N. Then we add new base-learners as follows, starting from j = 1: Є j denotes the

error rate of dj .

AdaBoost requires that learners are weak, that is, Є j < 1/2,∀ j; if not, we stop adding new base-

learners. Note that this error rate is not on the original problem but on the dataset used at step j. We

define βj = Є j /(1 −Є j) < 1, and we set ptj+1 = βj p tj if dj correctly classifies xt ; otherwise, ptj +1 = ptj.

Because ptj+1 should be probabilities, there is a normalization where we divide ptj+1 by t ptj+1 , so that

they sum up to 1. This has the effect that the probability of a correctly classified instance is decreased,

and the probability of a misclassified instance increases. Then a new sample of the same size is drawn

from the original sample according to these modified probabilities, p tj+1 with replacement, and is used to

train dj+1.

This has the effect that dj+1 focuses more on instances misclassified by dj ; that is why the base-learners

are chosen to be simple and not accurate, since otherwise the next training sample would contain only

a few outlier and noisy instances repeated many times over. For example, with decision trees, decision

stumps, which are trees grown only one or two levels, are used. So it is clear that these would have bias

but the decrease in variance is larger and the overall error decreases. An algorithm like the linear

discriminant has low variance, and we cannot gain by AdaBoosting linear discriminants.