机器学习

我们之前介绍了验证集的概念,或者在数据不足时使用交叉验证。然而,这样做是用计算时间来换取数据,因为许多模型是在不同的数据集上训练的。

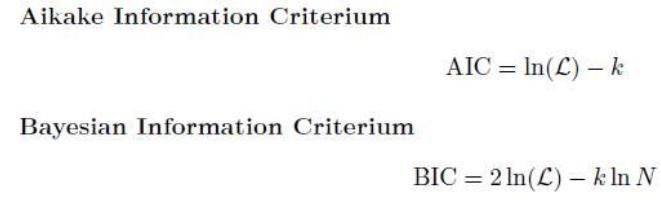

另一种思路是找到某种衡量标准,能够告诉我们训练好的模型预期表现如何。**概率模型选择(或“信息准则”)**提供了一种分析技术,用于对候选模型进行评分和选择。模型根据其在训练数据集上的表现和模型的复杂性进行评分。通常使用以下两种信息准则:

- 赤池信息准则(Akaike Information Criterion, AIC)

- 贝叶斯信息准则(Bayesian Information Criterion, BIC)

在这些公式中,k 是模型中的参数数量,N 是训练样本的数量,L 是模型的最佳(最大)似然值。在这两种情况下,根据公式的写法,选择值最大的模型。

we introduced the idea of a validation set, or using cross-validation if there was not enough

data. However, this replaces data with computation time, as many models are trained on different

datasets.

An alternative idea is to identify some measure that tells us about how well we can expect this

trained model to perform. Probabilistic model selection (or “information criteria”) provides an

analytical technique for scoring and choosing among candidate models. Models are scored both on

their performance on the training dataset and based on the complexity of the model.There are two

such information criteria that are commonly used:

In these equations, k is the number of parameters in the model, N is the number of training

examples, and L is the best (largest) likelihood of the model. In both cases, based on the way that they

are written here, the model with the largest value is taken.