机器学习Python教程

引言 (INTRODUCTION)

人们可能会认为,要获得用于文本分类的好文本材料可能并非难事。毕竟,在我们的日常生活中,几乎每时每刻都在处理书面语言。报纸、书籍,以及最重要的是,大部分互联网可能仍然是基于文本的。然而,对于我们的示例分类器来说,文本必须是机器可读的形式,最好是简单的文本文件,即不以 Word 或其他格式进行格式化。此外,这些文本可能不受版权保护。

我们使用古腾堡项目中的示例小说。

第一个任务是训练一个分类器,该分类器能够预测小说中某个段落的作者。

第二个示例将使用各种语言的小说,即德语、瑞典语、丹麦语、荷兰语、法语、意大利语和西班牙语。

作者预测 (AUTHOR PREDICTION)

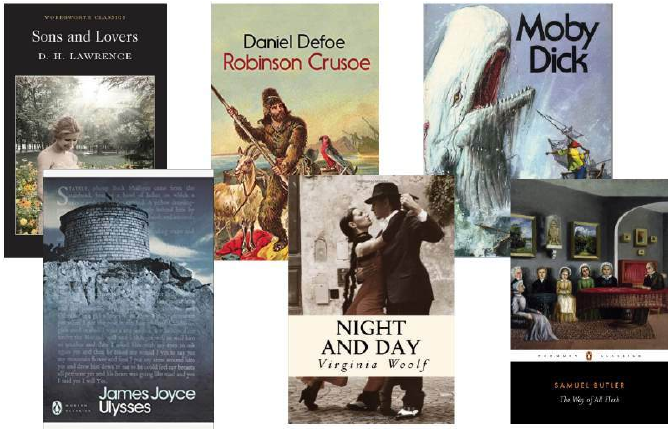

我们希望通过一个扩展的示例来演示我们的机器学习教程前一章的概念。我们将使用以下小说:

-

弗吉尼亚·伍尔夫 (Virginia Woolf): 《昼与夜》(Night and Day)

-

塞缪尔·巴特勒 (Samuel Butler): 《众生之路》(The Way of all Flesh)

-

赫尔曼·梅尔维尔 (Herman Melville): 《白鲸》(Moby Dick)

-

戴维·赫伯特·劳伦斯 (David Herbert Lawrence): 《儿子与情人》(Sons and Lovers)

-

丹尼尔·笛福 (Daniel Defoe): 《鲁滨逊漂流记》(The Life and Adventures of Robinson Crusoe)

-

詹姆斯·乔伊斯 (James Joyce): 《尤利西斯》(Ulysses)

我们将使用这些小说来训练一个分类器。这个分类器应该能够从任意文本段落中预测作者。

我们将把书籍分割成段落列表。我们将使用一个 text2paragraphs 函数,这个函数我们已经在文件处理章节作为练习介绍过。

def text2paragraphs(filename, min_size=1):

"""

将文件 'filename' 中包含的文本读取并切分成段落。

字符串长度小于 min_size 的段落将被忽略。

将返回一个段落字符串列表。

"""

with open(filename, 'r', encoding='utf-8') as f: # 显式指定编码

txt = f.read()

paragraphs = [para for para in txt.split("\n\n") if len(para) > min_size]

return paragraphs

labels = ['Virginia Woolf', 'Samuel Butler', 'Herman Melville',

'David Herbert Lawrence', 'Daniel Defoe', 'James Joyce']

files = ['night_and_day_virginia_woolf.txt', 'the_way_of_all_flash_butler.txt', # 注意:原文可能是 'flash'

'moby_dick_melville.txt', 'sons_and_lovers_lawrence.txt',

'robinson_crusoe_defoe.txt', 'james_joyce_ulysses.txt']

path = "books/" # 确保 'books' 目录存在且包含这些文件

data = []

targets = []

counter = 0

for fname in files:

paras = text2paragraphs(path + fname, min_size=150)

data.extend(paras)

targets += [counter] * len(paras)

counter += 1

# 此单元格无用,因为 train_test_split 会执行混洗!

import random

data_targets = list(zip(data, targets))

# 创建列表的随机排列:

data_targets = random.sample(data_targets, len(data_targets))

data, targets = list(zip(*data_targets))

# 分割成训练集和测试集:

from sklearn.model_selection import train_test_split

res = train_test_split(data, targets,

train_size=0.8,

test_size=0.2,

random_state=42)

train_data, test_data, train_targets, test_targets = res

print(len(train_data), len(test_data), len(train_targets), len(test_targets))

我们创建一个朴素贝叶斯分类器:

from sklearn.feature_extraction.text import CountVectorizer, ENGLISH_STOP_WORDS

from sklearn.naive_bayes import MultinomialNB

from sklearn import metrics

vectorizer = CountVectorizer(stop_words=ENGLISH_STOP_WORDS)

vectors = vectorizer.fit_transform(train_data)

# 创建分类器

classifier = MultinomialNB(alpha=.01)

classifier.fit(vectors, train_targets)

vectors_test = vectorizer.transform(test_data)

predictions = classifier.predict(vectors_test)

accuracy_score = metrics.accuracy_score(test_targets, predictions)

f1_score = metrics.f1_score(test_targets, predictions, average='macro')

print("accuracy score: ", accuracy_score)

print("F1-score: ", f1_score)

Output:

accuracy score: 0.9123571039738705

F1-score: 0.9097752590254707

现在我们将用弗吉尼亚·伍尔夫的另一本书来测试这个分类器。

paras = text2paragraphs(path + "the_voyage_out_virginia_woolf.txt", min_size=250)

first_para, last_para = 100, 500

vectors_test = vectorizer.transform(paras[first_para: last_para])

#vectors_test = vectorizer.transform(["To be or not to be"]) # 示例,被注释掉

predictions = classifier.predict(vectors_test)

print(predictions)

targets = [0] * (last_para - first_para) # 假设 0 是弗吉尼亚·伍尔夫的标签

accuracy_score = metrics.accuracy_score(targets, predictions)

precision_score = metrics.precision_score(targets, predictions, average='macro', zero_division=0) # 加上 zero_division 参数

f1_score = metrics.f1_score(targets, predictions, average='macro', zero_division=0) # 加上 zero_division 参数

print("accuracy score: ", accuracy_score)

print("precision score: ", precision_score) # 这里原文将 accuracy_score 再次赋值给 precision_score,已修正

print("F1-score: ", f1_score)

Output:

[5 0 5 5 0 5 5 0 2 5 0 0 5 0 5 0 0 0 1 0 1 0 0 5 1 5 0 0 1 0 0 0

5 2 2 5 0

2 2 5 0 0 0 0 0 3 0 0 0 0 0 4 2 5 2 3 0 0 0 0 0 0 5 0 0 2 0 0 0

0 0 5 5 5

0 0 1 0 0 2 2 3 0 2 2 0 5 5 0 5 1 0 0 1 0 5 0 0 5 0 0 3 5 5 0 5

5 5 5 0 5

0 0 0 0 0 0 1 2 0 0 0 5 0 1 2 2 2 5 5 0 0 0 1 3 0 0 5 1 3 0 0 0

0 3 0 0 0

0 0 5 0 5 0 5 5 1 1 1 0 0 0 0 0 0 5 0 1 0 0 0 5 5 5 5 0 2 3 5 0

0 0 0 0 0

5 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 5 5 5 0 0 0 5 5 5 3 0 5 0 0 3

0 0 0 5 0

0 5 2 0 0 0 0 0 3 0 0 0 0 2 0 0 5 3 5 1 0 5 5 0 5 0 5 0 1 1 1 0

0 0 1 1 3

1 0 0 5 0 0 5 2 3 0 0 0 5 0 2 2 0 1 0 0 0 0 0 0 3 0 4 0 0 0 0 1

0 0 0 0 1

1 0 5 5 5 0 5 0 0 0 0 0 5 3 0 0 0 5 3 1 3 0 0 5 0 0 0 0 0 0 3 0

5 5 0 0 0

3 3 5 0 3 3 0 0 1 5 1 0 0 0 0 2 0 3 0 0 1 1 0 0 0 0 0 0 0 0 0 0

2 2 3 0 0

0 1 0 0 0 5 0 0 0 0 0 0 0 0 3 0 0 0 0 0 1 5 0 0 0 0 0 0 0 0]

accuracy score: 0.595

precision score: 0.147987012987013

F1-score: 0.12434691745036573

predictions = classifier.predict_proba(vectors_test)

print(predictions)

Output:

[[6.26578058e-004 2.51943113e-002 4.85163038e-008 4.75065393e-005

4.00835263e-014 9.74131556e-001]

[7.12081909e-001 4.92957656e-002 5.37096844e-003 1.68824845e-009

4.99835718e-013 2.33251355e-001]

[1.11615265e-001 1.70149726e-009 8.02170949e-013 1.93038351e-008

3.38381992e-017 8.88384714e-001]

...

[9.99433053e-001 5.66946558e-004 6.87847449e-032 2.49682983e-019

9.56365457e-038 3.61259105e-033]

[9.99999991e-001 7.95355880e-009 9.29384687e-029 2.81898441e-033

1.49766211e-060 8.27077882e-010]

[1.00000000e+000 2.80028853e-054 1.53409474e-068 4.12917577e-086

3.33829236e-115 1.78467356e-057]]

您可能曾希望能有更好的结果,并且可能会感到失望。然而,另一方面,这个结果也相当令人印象深刻。在将近 60% 的情况下,我们得到了标签 0,这代表弗吉尼亚·伍尔夫和她的《昼与夜》小说。我们可以说,我们的分类器仅仅通过词语,就识别出了伍尔夫的写作风格,在将近 60% 的段落中,即使这是一本不同的新小说。

让我们看看我们测试的前 10 个段落:

for i in range(0, 10):

print(predictions[i], paras[i+first_para])

Output:

[6.26578058e-04 2.51943113e-02 4.85163038e-08 4.75065393e-05

4.00835263e-14 9.74131556e-01] "That's the painful thing about pets," said Mr. Dalloway; "they die. The

first sorrow I can remember was for the death of a dormouse. I regret to

say that I sat upon it. Still, that didn't make one any the less sorry.

Here lies the duck that Samuel Johnson sat on, eh? I was big for my

age."

[7.12081909e-01 4.92957656e-02 5.37096844e-03 1.68824845e-09

4.99835718e-13 2.33251355e-01] "Please tell me--everything." That was what she wanted to say. He had

drawn apart one little chink and showed astonishing treasures. It seemed

to her incredible that a man like that should be willing to talk to her.

He had sisters and pets, and once lived in the country. She stirred her

tea round and round; the bubbles which swam and clustered in the cup

seemed to her like the union of their minds.

[1.11615265e-01 1.70149726e-09 8.02170949e-13 1.93038351e-08

3.38381992e-17 8.88384714e-01] The talk meanwhile raced past her, and when Richard suddenly stated in a

jocular tone of voice, "I'm sure Miss Vinrace, now, has secret leanings

towards Catholicism," she had no idea what to answer, and Helen could

not help laughing at the start she gave.

[1.94979929e-05 4.16423135e-06 1.30402613e-13 4.90014758e-03

1.02628751e-18 9.95076190e-01] However, breakfast was over and Mrs. Dalloway was rising. "I always

think religion's like collecting beetles," she said, summing up the

discussion as she went up the stairs with Helen. "One person has a

passion for black beetles; another hasn't; it's no good arguing about

it. What's _your_ black beetle now?"

[1.00000000e+00 2.88701360e-46 1.83061388e-38 5.54119421e-32

7.87165681e-71 1.33908569e-29] It was as though a blue shadow had fallen across a pool. Their eyes

became deeper, and their voices more cordial. Instead of joining them

as they began to pace the deck, Rachel was indignant with the prosperous

matrons, who made her feel outside their world and motherless, and

turning back, she left them abruptly. She slammed the door of her room,

and pulled out her music. It was all old music--Bach and Beethoven,

Mozart and Purcell--the pages yellow, the engraving rough to the finger.

In three minutes she was deep in a very difficult, very classical fugue

in A, and over her face came a queer remote impersonal expression of

complete absorption and anxious satisfaction. Now she stumbled; now she

faltered and had to play the same bar twice over; but an invisible

line seemed to string the notes together, from which rose a shape,

a building. She was so far absorbed in this work, for it was really

difficult to find how all these sounds should stand together, and drew

upon the whole of her faculties, that she never heard a knock at the

door. It was burst impulsively open, and Mrs. Dalloway stood in the room

leaving the door open, so that a strip of the white deck and of the blue

sea appeared through the opening. The shape of the Bach fugue crashed to

the ground.

[3.01049983e-02 2.33225150e-01 1.44790362e-07 2.08470928e-02

1.21445899e-20 7.15822614e-01] "He wrote awfully well, didn't he?" said Clarissa; "--if one likes

that kind of thing--finished his sentences and all that. _Wuthering_

_Heights_! Ah--that's more in my line. I really couldn't exist without

the Brontes! Don't you love them? Still, on the whole, I'd rather live

without them than without Jane Austen."

[8.44480345e-03 4.79211117e-16 5.36229064e-04 1.94962600e-08

1.93352536e-27 9.91018948e-01] How divine!--and yet what nonsense!" She looked lightly round the room.

"I always think it's _living_, not dying, that counts. I really respect

some snuffy old stockbroker who's gone on adding up column after column

all his days, and trotting back to his villa at Brixton with some old

pug dog he worships, and a dreary little wife sitting at the end of the

table, and going off to Margate for a fortnight--I assure you I know

heaps like that--well, they seem to me _really_ nobler than poets whom

every one worships, just because they're geniuses and die young. But I

don't expect _you_ to agree with me!"

[9.99929790e-01 2.75362913e-05 7.08502304e-14 4.80647305e-11

3.30471723e-13 4.26739511e-05] "When you're my age you'll see that the world is _crammed_ with

delightful things. I think young people make such a mistake about

that--not letting themselves be happy. I sometimes think that happiness

is the only thing that counts. I don't know you well enough to say, but

I should guess you might be a little inclined to--when one's young and

attractive--I'm going to say it!--_every_thing's at one's feet." She

glanced round as much as to say, "not only a few stuffy books and

Bach."

[1.06997945e-10 1.91268645e-22 9.99999647e-01 6.84957708e-12

3.46586775e-07 5.86836045e-09] The shores of Portugal were beginning to lose their substance; but

the land was still the land, though at a great distance. They could

distinguish the little towns that were sprinkled in the folds of the

hills, and the smoke rising faintly. The towns appeared to be very small

in comparison with the great purple mountains behind them.

[4.71639134e-05 1.59969960e-12 3.57196090e-02 3.39541813e-12

2.99749181e-17 9.64233227e-01] Rachel followed her eyes and found that they rested for a second, on the

robust figure of Richard Dalloway, who was engaged in striking a match

on the sole of his boot; while Willoughby expounded something, which

seemed to be of great interest to them both.

索引为 100 的段落被预测为“詹姆斯·乔伊斯的《尤利西斯》”。这个段落包含名字“塞缪尔·约翰逊 (Samuel Johnson)”。《尤利西斯》中有很多“塞缪尔”和“约翰逊”的出现,而《昼与夜》中既没有“塞缪尔”也没有“约翰逊”。所以,这可能是预测的原因之一。

我们已经使用 MultinomialNB 训练了一个朴素贝叶斯分类器。现在我们想训练一个神经网络。接下来我们将使用 MLPClassifier。请注意:这需要很长时间,除非你有一台速度极快的电脑。在我的电脑上大约需要五分钟!

from sklearn.feature_extraction.text import CountVectorizer, ENGLISH_STOP_WORDS

from sklearn.neural_network import MLPClassifier

from sklearn import metrics

vectorizer = CountVectorizer(stop_words=ENGLISH_STOP_WORDS)

vectors = vectorizer.fit_transform(train_data)

print("Creating a classifier. This will take some time!")

classifier = MLPClassifier(random_state=1, max_iter=300).fit(vectors, train_targets)

# Output: Creating a classifier. This will take some time!

vectors_test = vectorizer.transform(test_data)

predictions = classifier.predict(vectors_test)

accuracy_score = metrics.accuracy_score(test_targets, predictions)

f1_score = metrics.f1_score(test_targets, predictions, average='macro')

print("accuracy score: ", accuracy_score)

print("F1-score: ", f1_score)

Output:

accuracy score: 0.9085465432770822

F1-score: 0.9125873156984565

语言预测 (LANGUAGE PREDICTION)

现在我们将训练一个分类器,它能够识别以下语言的文本:德语、丹麦语、英语、西班牙语、法语、意大利语、荷兰语和瑞典语。

我们将使用每种语言的两本书进行训练和测试。作者和书名应该在以下文件名中可以识别:

import os

os.listdir("books/various_languages")

Output:

['it_alessandro_manzoni_i_promessi_sposi.txt',

'es_antonio_de_alarcon_novelas_cortas.txt',

'de_nietzsche_also_sprach_zarathustra.txt',

'nl_lodewijk_van_deyssel.txt',

'de_goethe_leiden_des_jungen_werther2.txt',

'se_august_strindberg_röda_rummet.txt',

'license', # 许可证文件,不是书籍

'it_amato_gennaro_una_sfida_al_polo.txt',

'nl_cornelis_johannes_kieviet_Dik_Trom_en_sijn_dorpgenooten.txt',

'fr_emile_zola_la_bete_humaine.txt',

'se_selma_lagerlöf_bannlyst.txt',

'de_goethe_leiden_des_jungen_werther1.txt',

'en_virginia_woolf_night_and_day.txt',

'original', # 可能是原始文件,不是书籍

'es_mguel_de_cervantes_don_cuijote.txt', # 可能是拼写错误,通常是 'miguel' 和 'quijote'

'en_herman_melville_moby_dick.txt',

'dk_andreas_lauritz_clemmensen_beskrivelser_og_tegninger.txt',

'fr_emile_zola_germinal.txt']

labels = ['Virginia Woolf', 'Samuel Butler', 'Herman Melville', # 这些标签是之前作者预测的,这里应为语言标签

'David Herbert Lawrence', 'Daniel Defoe', 'James Joyce']

path = "books/various_languages/"

files = os.listdir("books/various_languages")

# 从文件名中提取语言标签

labels = {fname[:2] for fname in files if fname.endswith(".txt")}

labels = sorted(list(labels))

print(labels)

Output:

['de', 'dk', 'en', 'es', 'fr', 'it', 'nl', 'se']

print(files)

Output:

['it_alessandro_manzoni_i_promessi_sposi.txt', 'es_antonio_de_alarcon_novelas_cortas.txt', 'de_nietzsche_also_sprach_zarathustra.txt', 'nl_lodewijk_van_deyssel.txt', 'de_goethe_leiden_des_jungen_werther2.txt', 'se_august_strindberg_röda_rummet.txt', 'license', 'it_amato_gennaro_una_sfida_al_polo.txt', 'nl_cornelis_johannes_kieviet_Dik_Trom_en_sijn_dorpgenooten.txt', 'fr_emile_zola_la_bete_humaine.txt', 'se_selma_lagerlöf_bannlyst.txt', 'de_goethe_leiden_des_jungen_werther1.txt', 'en_virginia_woolf_night_and_day.txt', 'original', 'es_mguel_de_cervantes_don_cuijote.txt', 'en_herman_melville_moby_dick.txt', 'dk_andreas_lauritz_clemmensen_beskrivelser_og_tegninger.txt', 'fr_emile_zola_germinal.txt']

data = []

targets = []

for fname in files:

if fname.endswith(".txt"): # 只处理 .txt 文件,忽略 license 和 original

paras = text2paragraphs(path + fname, min_size=150)

data.extend(paras)

country = fname[:2] # 从文件名中获取语言代码

index = labels.index(country) # 获取语言代码对应的索引

targets += [index] * len(paras)

import random

data_targets = list(zip(data, targets))

# 创建列表的随机排列:

data_targets = random.sample(data_targets, len(data_targets))

data, targets = list(zip(*data_targets))

from sklearn.model_selection import train_test_split

res = train_test_split(data, targets,

train_size=0.8,

test_size=0.2,

random_state=42)

train_data, test_data, train_targets, test_targets = res

from sklearn.feature_extraction.text import CountVectorizer, ENGLISH_STOP_WORDS

from sklearn.naive_bayes import MultinomialNB

from sklearn import metrics

# 这里注释掉了使用 ENGLISH_STOP_WORDS,可能因为多语言语料库不需要英文停用词

vectorizer = CountVectorizer(stop_words=ENGLISH_STOP_WORDS)

#vectorizer = CountVectorizer() # 如果不使用英文停用词,可以使用这个

vectors = vectorizer.fit_transform(train_data)

# 创建分类器

classifier = MultinomialNB(alpha=.01)

classifier.fit(vectors, train_targets)

vectors_test = vectorizer.transform(test_data)

predictions = classifier.predict(vectors_test)

accuracy_score = metrics.accuracy_score(test_targets, predictions)

f1_score = metrics.f1_score(test_targets, predictions, average='macro')

print("accuracy score: ", accuracy_score)

print("F1-score: ", f1_score)

Output:

accuracy score: 0.9946569178852643

F1-score: 0.9966453736745848

让我们用一些不同语言的任意文本来检查这个分类器:

some_texts = ["Es ist nicht von Bedeutung, wie langsam du gehst, solange du nicht stehenbleibst.", # 德语

"Man muss das Unmögliche versuchen, um das Mögliche zu erreichen.", # 德语

"It's so much darker when a light goes out than it would have been if it had never shone.", # 英语

"Rien n'est jamais fini, il suffit d'un peu de bonheur pour que tout recommence.", # 法语

"Girano le stelle nella notte ed io ti penso forte forte e forte ti vorrei"] # 意大利语

sources = ["Konfuzius", "Hermann Hesse", "John Steinbeck", "Emile Zola", "Gianna Nannini" ] # 文本来源,与预测无关

vtest = vectorizer.transform(some_texts)

predictions = classifier.predict(vtest)

for label_index in predictions:

print(label_index, labels[label_index]) # 打印预测的标签索引和对应的语言代码

Output:

0 de

0 de

2 en

4 fr

5 it

脚注 (FOOTNOTES)

-

从逻辑上讲,

toarray和todense是相同的东西,但toarray返回一个ndarray而todense返回一个matrix。 如果您考虑官方 Numpy 文档关于numpy.matrix类所说的内容,您就不应该使用todense!“不再推荐使用此类,即使是用于线性代数。相反,请使用常规数组。该类将来可能会被移除。” (numpy.matrix) (返回)

INTRODUCTION

One might think that it might not be that difficult to get good

text material for examples of text classification. After all, hardly

a minute goes by in our daily lives that we are not dealing with

written language. Newspapers, books, and most of all, most of

the internet is probably still text-based. For our example

classifiers, however, the texts must be in machine-readable form

and preferably in simple text files, i.e. not formatted in Word or

other formats. In addition, the texts may not be protected by

copyright.

We use our example novels from the Gutenberg project.

The first task consists in training a classifier which can predict

the author of a paragraph from a novel.

The second example will use novels of various languages, i.e.

German, Swedish, Danish, Dutch, French, Italian and Spanish.

AUTHOR PREDICTION

We want to demonstrate the concepts of the previous chapter of our Machine Learning tutorial in an extended

example. We will use the following novels:

•

•

•

•

•

•

Virginia Woolf: Night and Day

Samuel Butler: The Way of all Flesh

Herman Melville: Moby Dick

David Herbert Lawrence: Sons and Lovers

Daniel Defoe: The Life and Adventures of Robinson Crusoe

James Joyce: Ulysses

Will will train a classifier with these novels. This classifier should be able to predict the author from an

arbitrary text passage.

399

We will segment the books into lists of paragraphs. We will use a function 'text2paragraphs', which we had

introduced as an exercise in our chapter on file handling.

def text2paragraphs(filename, min_size=1):

""" A text contained in the file 'filename' will be read

and chopped into paragraphs.

Paragraphs with a string length less than min_size will be ign

ored.

A list of paragraph strings will be returned"""

txt = open(filename).read()

paragraphs = [para for para in txt.split("\n\n") if len(para)

> min_size]

return paragraphs

labels = ['Virginia Woolf', 'Samuel Butler', 'Herman Melville',

'David Herbert Lawrence', 'Daniel Defoe', 'James Joyce']

files = ['night_and_day_virginia_woolf.txt', 'the_way_of_all_flas

h_butler.txt',

'moby_dick_melville.txt', 'sons_and_lovers_lawrence.txt',

'robinson_crusoe_defoe.txt', 'james_joyce_ulysses.txt']

path = "books/"

400

data = []

targets = []

counter = 0

for fname in files:

paras = text2paragraphs(path + fname, min_size=150)

data.extend(paras)

targets += [counter] * len(paras)

counter += 1

# cell is useless, because train_test_split will do the shuffling!

import random

data_targets = list(zip(data, targets))

# create random permuation on list:

data_targets = random.sample(data_targets, len(data_targets))

data, targets = list(zip(*data_targets))

Split into train and test sets:

from sklearn.model_selection import train_test_split

res = train_test_split(data, targets,

train_size=0.8,

test_size=0.2,

random_state=42)

train_data, test_data, train_targets, test_targets = res

len(train_data), len(test_data), len(train_targets), len(test_targets)

We create a Naive Bayes classifiert:

from sklearn.feature_extraction.text import CountVectorizer, ENGLI

SH_STOP_WORDS

from sklearn.naive_bayes import MultinomialNB

from sklearn import metrics

vectorizer = CountVectorizer(stop_words=ENGLISH_STOP_WORDS)

vectors = vectorizer.fit_transform(train_data)

401

# creating a classifier

classifier = MultinomialNB(alpha=.01)

classifier.fit(vectors, train_targets)

vectors_test = vectorizer.transform(test_data)

predictions = classifier.predict(vectors_test)

accuracy_score = metrics.accuracy_score(test_targets,

predictions)

f1_score = metrics.f1_score(test_targets,

predictions,

average='macro')

print("accuracy score: ", accuracy_score)

print("F1-score: ", f1_score)

accuracy score: 0.9123571039738705

F1-score: 0.9097752590254707

We will test this classifier now with a different book of Virginia Woolf.

paras = text2paragraphs(path + "the_voyage_out_virginia_woolf.tx

t", min_size=250)

first_para, last_para = 100, 500

vectors_test = vectorizer.transform(paras[first_para: last_para])

#vectors_test = vectorizer.transform(["To be or not to be"])

predictions = classifier.predict(vectors_test)

print(predictions)

targets = [0] * (last_para - first_para)

accuracy_score = metrics.accuracy_score(targets,

predictions)

precision_score = metrics.precision_score(targets,

predictions,

average='macro')

f1_score = metrics.f1_score(targets,

predictions,

average='macro')

print("accuracy score: ", accuracy_score)

print("precision score: ", accuracy_score)

402

print("F1-score: ", f1_score)

[5 0 5 5 0 5 5 0 2 5 0 0 5 0 5 0 0 0 1 0 1 0 0 5 1 5 0 0 1 0 0 0

5 2 2 5 0

2 2 5 0 0 0 0 0 3 0 0 0 0 0 4 2 5 2 3 0 0 0 0 0 0 5 0 0 2 0 0 0

0 0 5 5 5

0 0 1 0 0 2 2 3 0 2 2 0 5 5 0 5 1 0 0 1 0 5 0 0 5 0 0 3 5 5 0 5

5 5 5 0 5

0 0 0 0 0 0 1 2 0 0 0 5 0 1 2 2 2 5 5 0 0 0 1 3 0 0 5 1 3 0 0 0

0 3 0 0 0

0 0 5 0 5 0 5 5 1 1 1 0 0 0 0 0 0 5 0 1 0 0 0 5 5 5 5 0 2 3 5 0

0 0 0 0 0

5 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 5 5 5 0 0 0 5 5 5 3 0 5 0 0 3

0 0 0 5 0

0 5 2 0 0 0 0 0 3 0 0 0 0 2 0 0 5 3 5 1 0 5 5 0 5 0 5 0 1 1 1 0

0 0 1 1 3

1 0 0 5 0 0 5 2 3 0 0 0 5 0 2 2 0 1 0 0 0 0 0 0 3 0 4 0 0 0 0 1

0 0 0 0 1

1 0 5 5 5 0 5 0 0 0 0 0 5 3 0 0 0 5 3 1 3 0 0 5 0 0 0 0 0 0 3 0

5 5 0 0 0

3 3 5 0 3 3 0 0 1 5 1 0 0 0 0 2 0 3 0 0 1 1 0 0 0 0 0 0 0 0 0 0

2 2 3 0 0

0 1 0 0 0 5 0 0 0 0 0 0 0 0 3 0 0 0 0 0 1 5 0 0 0 0 0 0 0 0]

accuracy score: 0.595

precision score: 0.595

F1-score: 0.12434691745036573

predictions = classifier.predict_proba(vectors_test)

print(predictions)

[[6.26578058e-004 2.51943113e-002 4.85163038e-008 4.75065393e-005

4.00835263e-014 9.74131556e-001]

[7.12081909e-001 4.92957656e-002 5.37096844e-003 1.68824845e-009

4.99835718e-013 2.33251355e-001]

[1.11615265e-001 1.70149726e-009 8.02170949e-013 1.93038351e-008

3.38381992e-017 8.88384714e-001]

...

[9.99433053e-001 5.66946558e-004 6.87847449e-032 2.49682983e-019

9.56365457e-038 3.61259105e-033]

[9.99999991e-001 7.95355880e-009 9.29384687e-029 2.81898441e-033

1.49766211e-060 8.27077882e-010]

[1.00000000e+000 2.80028853e-054 1.53409474e-068 4.12917577e-086

3.33829236e-115 1.78467356e-057]]

You may have hoped for a better result and you may be disappointed. Yet, this result is on the other hand quite

impressive. In nearly 60 % of all cases we got the label 0, which stand for Virginia Woolf and her novel "Night

403

and Day". We can say that our classifier recognized the Woolf writing style just by the words in nearly 60

percent of all the paragraphs, even though it is a different novel.

Let us have a look at the first 10 paragraphs which we have tested:

for i in range(0, 10):

print(predictions[i], paras[i+first_para])

404

[6.26578058e-04 2.51943113e-02 4.85163038e-08 4.75065393e-05

4.00835263e-14 9.74131556e-01] "That's the painful thing about pe

ts," said Mr. Dalloway; "they die. The

first sorrow I can remember was for the death of a dormouse. I reg

ret to

say that I sat upon it. Still, that didn't make one any the less s

orry.

Here lies the duck that Samuel Johnson sat on, eh? I was big for m

y

age."

[7.12081909e-01 4.92957656e-02 5.37096844e-03 1.68824845e-09

4.99835718e-13 2.33251355e-01] "Please tell me--everything." Tha

t was what she wanted to say. He had

drawn apart one little chink and showed astonishing treasures. It

seemed

to her incredible that a man like that should be willing to talk t

o her.

He had sisters and pets, and once lived in the country. She stirre

d her

tea round and round; the bubbles which swam and clustered in the c

up

seemed to her like the union of their minds.

[1.11615265e-01 1.70149726e-09 8.02170949e-13 1.93038351e-08

3.38381992e-17 8.88384714e-01] The talk meanwhile raced past he

r, and when Richard suddenly stated in a

jocular tone of voice, "I'm sure Miss Vinrace, now, has secret lea

nings

towards Catholicism," she had no idea what to answer, and Helen co

uld

not help laughing at the start she gave.

[1.94979929e-05 4.16423135e-06 1.30402613e-13 4.90014758e-03

1.02628751e-18 9.95076190e-01] However, breakfast was over and Mr

s. Dalloway was rising. "I always

think religion's like collecting beetles," she said, summing up th

e

discussion as she went up the stairs with Helen. "One person has a

passion for black beetles; another hasn't; it's no good arguing ab

out

it. What's _your_ black beetle now?"

[1.00000000e+00 2.88701360e-46 1.83061388e-38 5.54119421e-32

7.87165681e-71 1.33908569e-29] It was as though a blue shadow ha

d fallen across a pool. Their eyes

became deeper, and their voices more cordial. Instead of joining t

hem

as they began to pace the deck, Rachel was indignant with the pros

perous

405

matrons, who made her feel outside their world and motherless, and

turning back, she left them abruptly. She slammed the door of her

room,

and pulled out her music. It was all old music--Bach and Beethove

n,

Mozart and Purcell--the pages yellow, the engraving rough to the f

inger.

In three minutes she was deep in a very difficult, very classical

fugue

in A, and over her face came a queer remote impersonal expression

of

complete absorption and anxious satisfaction. Now she stumbled; no

w she

faltered and had to play the same bar twice over; but an invisible

line seemed to string the notes together, from which rose a shape,

a building. She was so far absorbed in this work, for it was reall

y

difficult to find how all these sounds should stand together, and

drew

upon the whole of her faculties, that she never heard a knock at t

he

door. It was burst impulsively open, and Mrs. Dalloway stood in th

e room

leaving the door open, so that a strip of the white deck and of th

e blue

sea appeared through the opening. The shape of the Bach fugue cras

hed to

the ground.

[3.01049983e-02 2.33225150e-01 1.44790362e-07 2.08470928e-02

1.21445899e-20 7.15822614e-01] "He wrote awfully well, didn't h

e?" said Clarissa; "--if one likes

that kind of thing--finished his sentences and all that. _Wutherin

g_

_Heights_! Ah--that's more in my line. I really couldn't exist wit

hout

the Brontes! Don't you love them? Still, on the whole, I'd rather

live

without them than without Jane Austen."

[8.44480345e-03 4.79211117e-16 5.36229064e-04 1.94962600e-08

1.93352536e-27 9.91018948e-01] How divine!--and yet what nonsens

e!" She looked lightly round the room.

"I always think it's _living_, not dying, that counts. I really re

spect

some snuffy old stockbroker who's gone on adding up column after c

olumn

all his days, and trotting back to his villa at Brixton with some

406

old

pug dog he worships, and a dreary little wife sitting at the end o

f the

table, and going off to Margate for a fortnight--I assure you I kn

ow

heaps like that--well, they seem to me _really_ nobler than poets

whom

every one worships, just because they're geniuses and die young. B

ut I

don't expect _you_ to agree with me!"

[9.99929790e-01 2.75362913e-05 7.08502304e-14 4.80647305e-11

3.30471723e-13 4.26739511e-05] "When you're my age you'll see tha

t the world is _crammed_ with

delightful things. I think young people make such a mistake about

that--not letting themselves be happy. I sometimes think that happ

iness

is the only thing that counts. I don't know you well enough to sa

y, but

I should guess you might be a little inclined to--when one's youn

g and

attractive--I'm going to say it!--_every_thing's at one's feet." S

he

glanced round as much as to say, "not only a few stuffy books and

Bach."

[1.06997945e-10 1.91268645e-22 9.99999647e-01 6.84957708e-12

3.46586775e-07 5.86836045e-09] The shores of Portugal were beginn

ing to lose their substance; but

the land was still the land, though at a great distance. They coul

d

distinguish the little towns that were sprinkled in the folds of t

he

hills, and the smoke rising faintly. The towns appeared to be ver

y small

in comparison with the great purple mountains behind them.

[4.71639134e-05 1.59969960e-12 3.57196090e-02 3.39541813e-12

2.99749181e-17 9.64233227e-01] Rachel followed her eyes and foun

d that they rested for a second, on the

robust figure of Richard Dalloway, who was engaged in striking a m

atch

on the sole of his boot; while Willoughby expounded something, whi

ch

seemed to be of great interest to them both.

The paragraph with the index 100 was predicted as being "Ulysses by James Joyce". This paragraph contains

the name "Samuel Johnson". "Ulysses" contains many occurences of "Samuel" and "Johnson", whereas "Night

407

and Day" doesn't contain neither "Samuel" and "Johnson". So, this might be one of the reasons for the

prediction.

We had trained a Naive Bayes classifier by using MultinomialNB . We want to train now a Neural

Network. We will use MLPClassifier in the following. Be warned: It will take a long time, unless you

have an extremely fast computer. On my computer it takes about five minutes!

from sklearn.feature_extraction.text import CountVectorizer, ENGLI

SH_STOP_WORDS

from sklearn.neural_network import MLPClassifier

from sklearn import metrics

vectorizer = CountVectorizer(stop_words=ENGLISH_STOP_WORDS)

vectors = vectorizer.fit_transform(train_data)

print("Creating a classifier. This will take some time!")

classifier = MLPClassifier(random_state=1, max_iter=300).fit(vecto

rs, train_targets)

Creating a classifier. This will take some time!

vectors_test = vectorizer.transform(test_data)

predictions = classifier.predict(vectors_test)

accuracy_score = metrics.accuracy_score(test_targets,

predictions)

f1_score = metrics.f1_score(test_targets,

predictions,

average='macro')

print("accuracy score: ", accuracy_score)

print("F1-score: ", f1_score)

accuracy score: 0.9085465432770822

F1-score: 0.9125873156984565

LANGUAGE PREDICTION

We will train now a classifier which will be capable of recognizing the language of a text for the languages:

German, Danish, English, Spanish, French, Italian, Dutch and Swedish

We will use two books of each language for training and testing purposes. The authors and book titles should

be recognizable in the following file names:

408

import os

os.listdir("books/various_languages")

Output:['it_alessandro_manzoni_i_promessi_sposi.txt',

'es_antonio_de_alarcon_novelas_cortas.txt',

'de_nietzsche_also_sprach_zarathustra.txt',

'nl_lodewijk_van_deyssel.txt',

'de_goethe_leiden_des_jungen_werther2.txt',

'se_august_strindberg_röda_rummet.txt',

'license',

'it_amato_gennaro_una_sfida_al_polo.txt',

'nl_cornelis_johannes_kieviet_Dik_Trom_en_sijn_dorpgenoote

n.txt',

'fr_emile_zola_la_bete_humaine.txt',

'se_selma_lagerlöf_bannlyst.txt',

'de_goethe_leiden_des_jungen_werther1.txt',

'en_virginia_woolf_night_and_day.txt',

'original',

'es_mguel_de_cervantes_don_cuijote.txt',

'en_herman_melville_moby_dick.txt',

'dk_andreas_lauritz_clemmensen_beskrivelser_og_tegninger.tx

t',

'fr_emile_zola_germinal.txt']

labels = ['Virginia Woolf', 'Samuel Butler', 'Herman Melville',

'David Herbert Lawrence', 'Daniel Defoe', 'James Joyce']

path = "books/various_languages/"

files = os.listdir("books/various_languages")

labels = {fname[:2] for fname in files if fname.endswith(".txt")}

labels = sorted(list(labels))

labels

Output:['de', 'dk', 'en', 'es', 'fr', 'it', 'nl', 'se']

print(files)

409

['it_alessandro_manzoni_i_promessi_sposi.txt', 'es_antonio_de_alar

con_novelas_cortas.txt', 'de_nietzsche_also_sprach_zarathustra.tx

t', 'nl_lodewijk_van_deyssel.txt', 'de_goethe_leiden_des_jungen_we

rther2.txt', 'se_august_strindberg_röda_rummet.txt', 'license', 'i

t_amato_gennaro_una_sfida_al_polo.txt', 'nl_cornelis_johannes_kiev

iet_Dik_Trom_en_sijn_dorpgenooten.txt', 'fr_emile_zola_la_bete_hum

aine.txt', 'se_selma_lagerlöf_bannlyst.txt', 'de_goethe_leiden_de

s_jungen_werther1.txt', 'en_virginia_woolf_night_and_day.txt', 'or

iginal', 'es_mguel_de_cervantes_don_cuijote.txt', 'en_herman_melvi

lle_moby_dick.txt', 'dk_andreas_lauritz_clemmensen_beskrivelser_o

g_tegninger.txt', 'fr_emile_zola_germinal.txt']

data = []

targets = []

for fname in files:

if fname.endswith(".txt"):

paras = text2paragraphs(path + fname, min_size=150)

data.extend(paras)

country = fname[:2]

index = labels.index(country)

targets += [index] * len(paras)

import random

data_targets = list(zip(data, targets))

# create random permuation on list:

data_targets = random.sample(data_targets, len(data_targets))

data, targets = list(zip(*data_targets))

from sklearn.model_selection import train_test_split

res = train_test_split(data, targets,

train_size=0.8,

test_size=0.2,

random_state=42)

train_data, test_data, train_targets, test_targets = res

from sklearn.feature_extraction.text import CountVectorizer, ENGLI

SH_STOP_WORDS

from sklearn.naive_bayes import MultinomialNB

from sklearn import metrics

vectorizer = CountVectorizer(stop_words=ENGLISH_STOP_WORDS)

410

#vectorizer = CountVectorizer()

vectors = vectorizer.fit_transform(train_data)

# creating a classifier

classifier = MultinomialNB(alpha=.01)

classifier.fit(vectors, train_targets)

vectors_test = vectorizer.transform(test_data)

predictions = classifier.predict(vectors_test)

accuracy_score = metrics.accuracy_score(test_targets,

predictions)

f1_score = metrics.f1_score(test_targets,

predictions,

average='macro')

print("accuracy score: ", accuracy_score)

print("F1-score: ", f1_score)

accuracy score: 0.9946569178852643

F1-score: 0.9966453736745848

Let us check this classifiert with some abitrary text in different languages:

some_texts = ["Es ist nicht von Bedeutung, wie langsam du gehst, s

olange du nicht stehenbleibst.",

"Man muss das Unmögliche versuchen, um das Mögliche

zu erreichen.",

"It's so much darker when a light goes out than it w

ould have been if it had never shone.",

"Rien n'est jamais fini, il suffit d'un peu de bonhe

ur pour que tout recommence.",

"Girano le stelle nella notte ed io ti penso forte f

orte e forte ti vorrei"]

sources = ["Konfuzius", "Hermann Hesse", "John Steinbeck", "Emile

Zola", "Gianna Nannini" ]

vtest = vectorizer.transform(some_texts)

predictions = classifier.predict(vtest)

for label in predictions:

print(label, labels[label])

411

00245de

de

en

fr

it