Qlib-巨人級的AI量化投資平台

章节大纲

-

引言

Qlib 是一个面向人工智能的量化投资平台,旨在发掘人工智能技术在量化投资领域的潜力、助力研究并创造价值。

通过 Qlib,用户可以轻松尝试他们的想法,以创建更好的量化投资策略。

框架

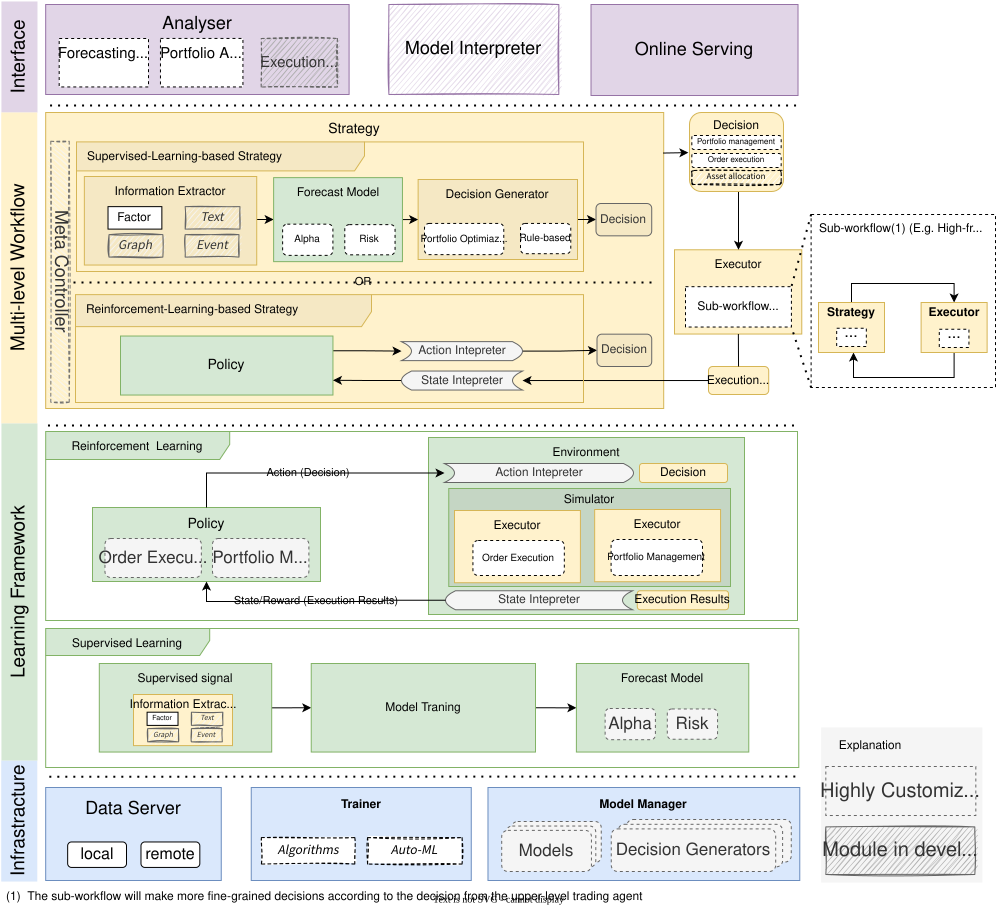

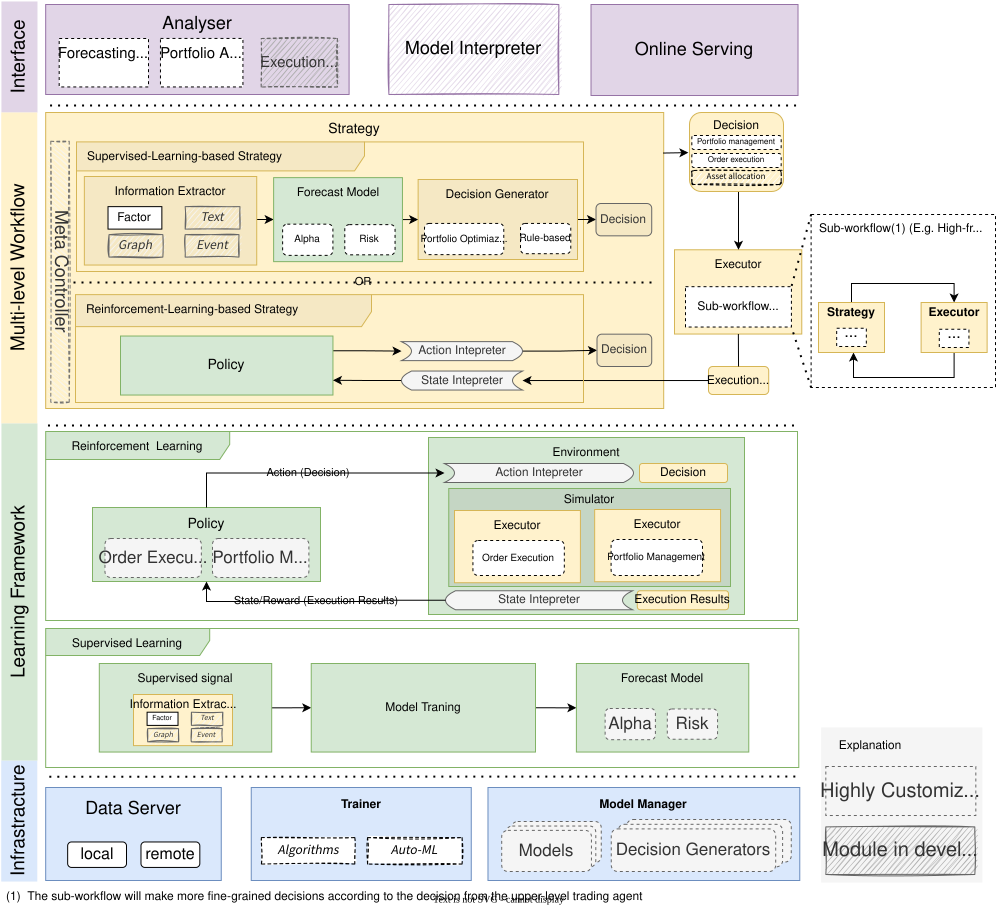

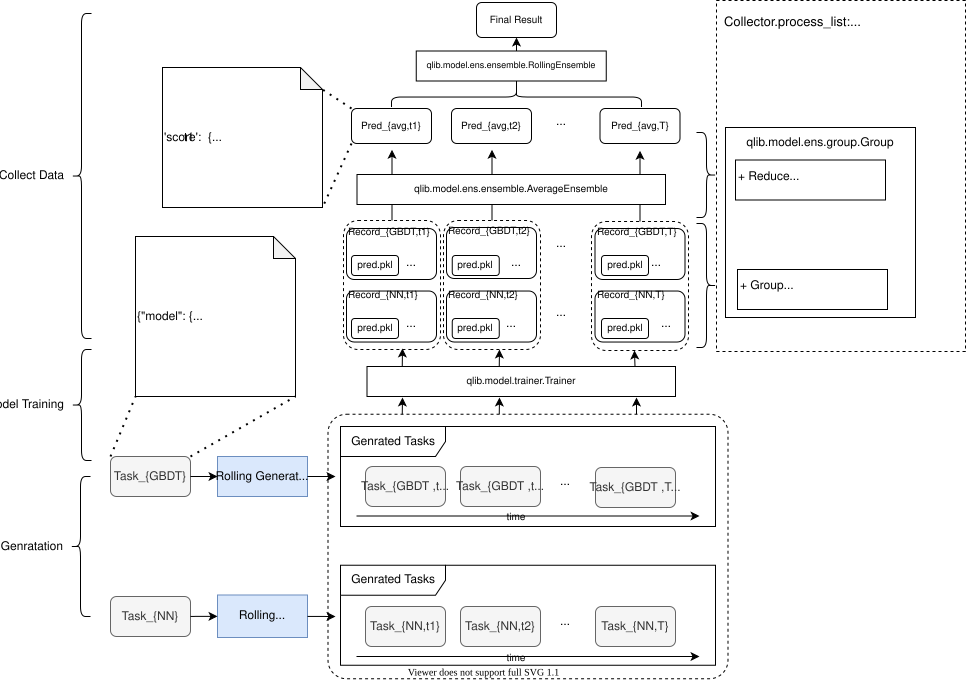

从模块层面来看,Qlib 是一个由上述组件构成的平台。这些组件被设计成松散耦合的模块,每个组件都可以独立使用。

这个框架对于 Qlib 新用户来说可能有些难以理解。它试图尽可能准确地包含 Qlib 设计的许多细节。对于新用户,你可以先跳过这部分,稍后再回来阅读。

名称 描述 基础设施层 基础设施层为量化研究提供底层支持。DataServer(数据服务器)为用户提供高性能的基础设施,用于管理和检索原始数据。Trainer(训练器)提供灵活的接口来控制模型的训练过程,从而使算法能够控制训练过程。 学习框架层 预测模型和交易代理都是可训练的。它们基于学习框架层进行训练,然后应用于工作流层中的多个场景。支持的学习范式可分为强化学习和监督学习。该学习框架也利用了工作流层(例如,共享信息提取器、基于执行环境创建环境)。 工作流层 工作流层涵盖了量化投资的整个工作流程。它支持基于监督学习和基于强化学习的策略。信息提取器为模型提取数据。预测模型专注于为其他模块生成各种预测信号(例如阿尔法、风险)。有了这些信号,决策生成器将生成目标交易决策(即投资组合、订单)。如果采用基于强化学习的策略,策略将以端到端的方式学习,并直接生成交易决策。决策将由执行环境(即交易市场)执行。可能存在多个级别的策略和执行器(例如,订单执行交易策略和盘中订单执行器可以像一个盘间交易循环,并嵌套在日常投资组合管理交易策略和盘间交易执行器交易循环中)。 接口层 接口层试图为底层系统提供一个用户友好的界面。分析器模块将为用户提供预测信号、投资组合和执行结果的详细分析报告。

-

以手绘风格展示的模块正在开发中,未来将会发布。

-

带有虚线边框的模块是高度可定制和可扩展的。

-

(附:框架图是用 https://draw.io/ 创建的。)

活动:0 -

-

Quick Start (快速上手)

简介

本篇快速上手指南旨在展示如何利用 Qlib 轻松构建完整的量化研究工作流,并验证用户的想法。

本指南将演示,即使是使用公开数据和简单的模型,机器学习技术在实际的量化投资中也能发挥很好的作用。

安装

用户可以按照以下步骤轻松安装 Qlib:

在从源代码安装 Qlib 之前,需要先安装一些依赖项:

pip install numpy pip install --upgrade cython克隆代码仓库并安装 Qlib:

git clone https://github.com/microsoft/qlib.git && cd qlib python setup.py install要了解更多关于安装的信息,请参阅 Qlib 安装。

准备数据

运行以下代码加载并准备数据:

python scripts/get_data.py qlib_data --target_dir ~/.qlib/qlib_data/cn_data --region cn该数据集是通过

scripts/data_collector/中的爬虫脚本收集的公开数据创建的,这些脚本已在同一个代码仓库中发布。用户可以使用这些脚本创建相同的数据集。要了解更多关于准备数据的信息,请参阅 数据准备。

自动化量化研究工作流

Qlib 提供了一个名为

qrun的工具,可以自动运行整个工作流(包括构建数据集、训练模型、回测和评估)。用户可以按照以下步骤启动自动化量化研究工作流并获得图形化报告分析:量化研究工作流:

使用 LightGBM 模型的配置文件

workflow_config_lightgbm.yaml运行qrun,如下所示。cd examples # 避免在包含 `qlib` 的目录下运行程序 qrun benchmarks/LightGBM/workflow_config_lightgbm.yaml工作流结果

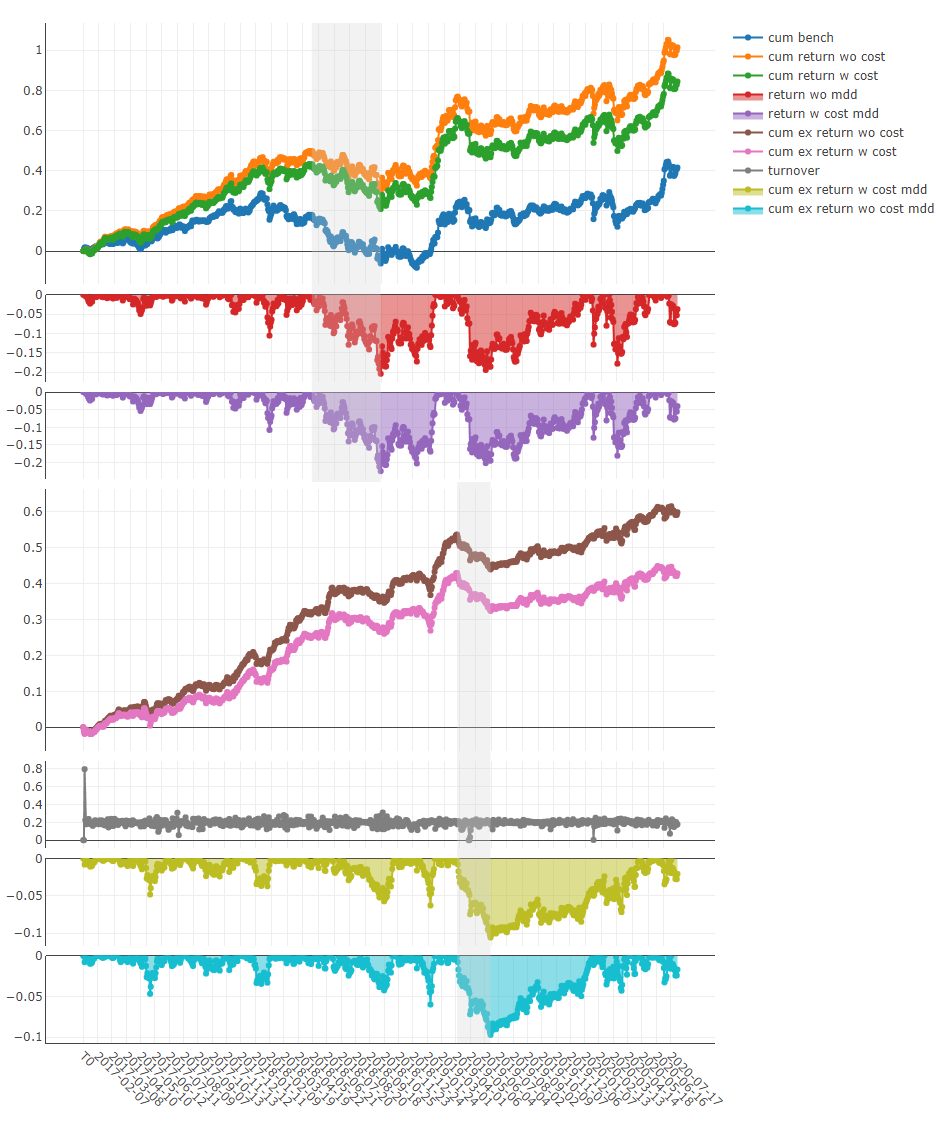

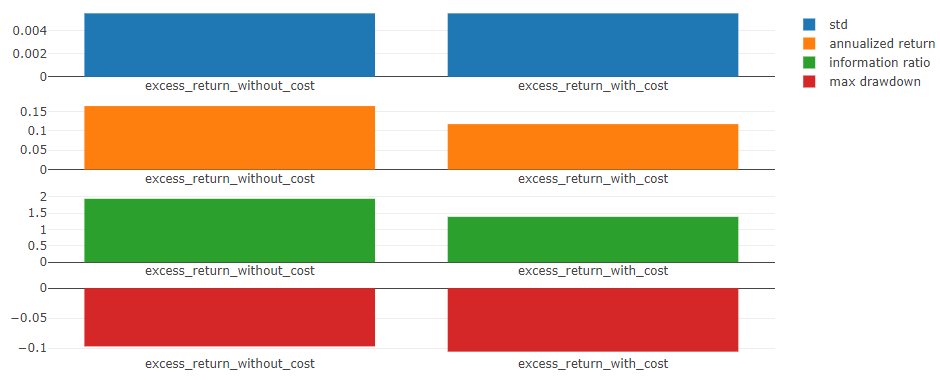

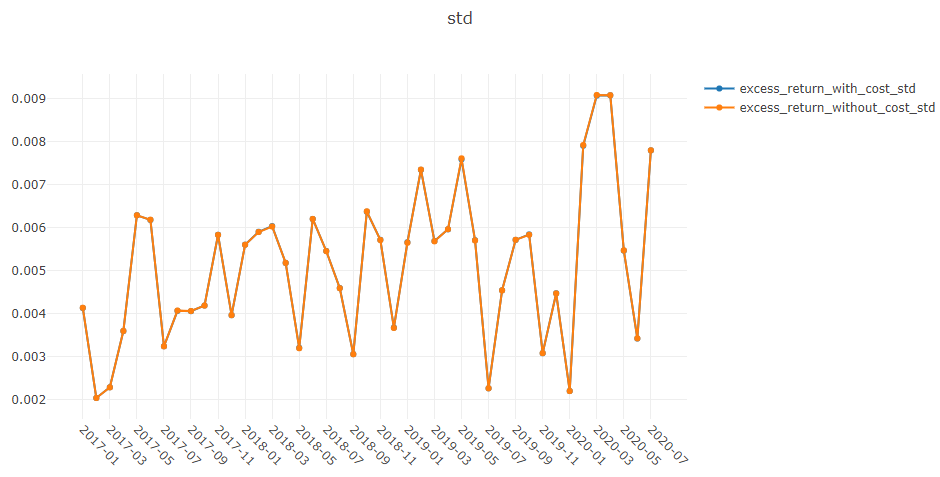

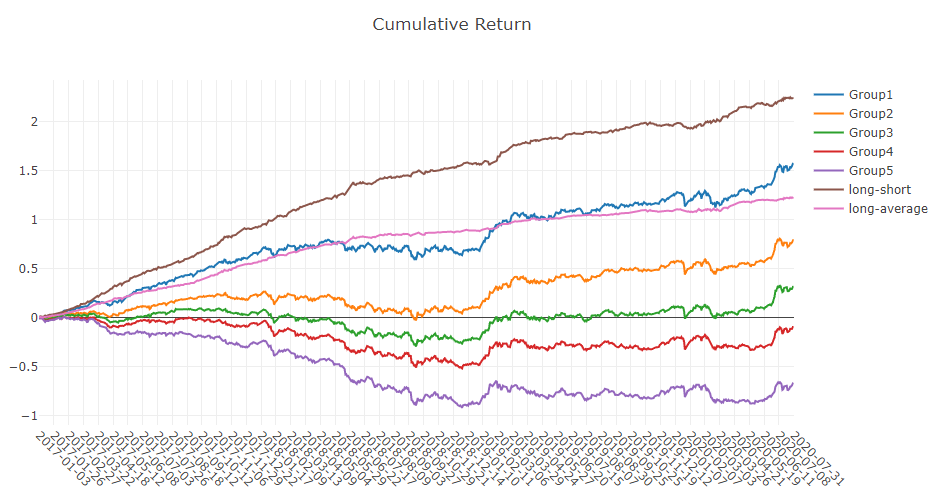

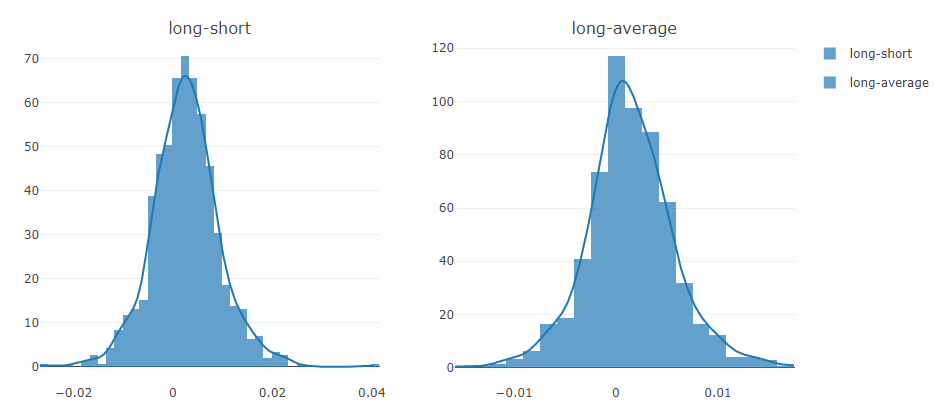

qrun的结果如下,这也是预测模型 (alpha) 的典型结果。有关结果的更多详细信息,请参阅 盘中交易。risk excess_return_without_cost mean 0.000605 std 0.005481 annualized_return 0.152373 information_ratio 1.751319 max_drawdown -0.059055 excess_return_with_cost mean 0.000410 std 0.005478 annualized_return 0.103265 information_ratio 1.187411 max_drawdown -0.075024要了解更多关于工作流和

qrun的信息,请参阅 工作流:工作流管理。图形化报告分析:

使用 jupyter notebook 运行

examples/workflow_by_code.ipynb。用户可以通过运行

examples/workflow_by_code.ipynb进行投资组合分析或预测得分(模型预测)分析。图形化报告

用户可以获得关于分析的图形化报告,更多详细信息请参阅 分析:评估与结果分析。

自定义模型集成

Qlib 提供了一系列模型(例如 lightGBM 和 MLP 模型)作为预测模型的示例。除了默认模型,用户还可以将自己的自定义模型集成到 Qlib 中。如果用户对自定义模型感兴趣,请参阅 自定义模型集成。

活动:0 -

安装

Qlib 安装

注意

Qlib 支持 Windows 和 Linux 操作系统,但建议在 Linux 系统上使用。Qlib 支持 Python 3,最高版本为 Python 3.8。

用户可以通过

pip轻松安装 Qlib,只需运行以下命令:pip install pyqlib此外,用户还可以通过源代码安装 Qlib,步骤如下:

进入 Qlib 的根目录,也就是

setup.py文件所在的目录。然后,执行以下命令来安装环境依赖和 Qlib:

$ pip install numpy $ pip install --upgrade cython $ git clone https://github.com/microsoft/qlib.git && cd qlib $ python setup.py install注意

建议使用 Anaconda/Miniconda 来设置环境。Qlib 需要 lightgbm 和 pytorch 这两个包,请使用

pip来安装它们。使用以下代码来验证安装是否成功:

import qlib qlib.__version__ <LATEST VERSION>活动:0 -

Qlib 初始化

初始化

请按照以下步骤初始化 Qlib。

-

下载并准备数据:执行以下命令下载股票数据。请注意,这些数据是从 Yahoo Finance 收集的,可能并不完美。如果您有高质量的数据集,我们建议您准备自己的数据。有关自定义数据集的更多信息,请参阅数据部分。

python scripts/get_data.py qlib_data --target_dir ~/.qlib/qlib_data/cn_data --region cn有关

get_data.py的更多信息,请参阅数据准备。 -

在调用其他 API 之前初始化 Qlib:在 Python 中运行以下代码。

Pythonimport qlib # region in [REG_CN, REG_US] from qlib.constant import REG_CN provider_uri = "~/.qlib/qlib_data/cn_data" # 你的目标目录 qlib.init(provider_uri=provider_uri, region=REG_CN)

注意

请不要在 Qlib 的代码仓库目录中导入

qlib包,否则可能会出现错误。

参数

除了

provider_uri和region之外,qlib.init还有其他参数。以下是qlib.init的几个重要参数(Qlib 有很多配置,这里只列出部分参数。更详细的设置请参阅这里):-

provider_uri:类型为str。Qlib 数据的 URI。例如,它可以是get_data.py加载数据后存储的目录。 -

region:类型为str,可选参数(默认为qlib.constant.REG_CN)。目前支持qlib.constant.REG_US('us') 和qlib.constant.REG_CN('cn')。不同的region值会对应不同的股票市场模式。-

qlib.constant.REG_US:美股市场。 -

qlib.constant.REG_CN:A股市场。

不同的模式会导致不同的交易限制和成本。

region只是用于定义一系列配置的快捷方式,包括最小交易单位(trade_unit)、交易限制(limit_threshold)等。它不是必需的,如果现有区域设置无法满足您的需求,您可以手动设置关键配置。 -

-

redis_host:类型为str,可选参数(默认为 "127.0.0.1"),redis的主机名。锁定和缓存机制依赖于redis。 -

redis_port:类型为int,可选参数(默认为 6379),redis的端口号。

注意

region的值应与provider_uri中存储的数据保持一致。目前,scripts/get_data.py只提供 A股市场数据。如果用户想使用美股市场数据,他们应该在provider_uri中准备自己的美股数据,并切换到美股模式。注意

如果 Qlib 无法通过

redis_host和redis_port连接到 Redis,将不会使用缓存机制!详情请参阅缓存。-

exp_manager:类型为dict,可选参数,用于在qlib中使用的实验管理器设置。用户可以指定一个实验管理器类,以及所有实验的追踪 URI。但是,请注意,我们只支持以下样式的字典作为exp_manager的输入。有关exp_manager的更多信息,用户可以参考记录器:实验管理。Python# 例如,如果你想将你的 tracking_uri 设置为一个 <特定文件夹>,你可以如下初始化 qlib qlib.init(provider_uri=provider_uri, region=REG_CN, exp_manager= { "class": "MLflowExpManager", "module_path": "qlib.workflow.expm", "kwargs": { "uri": "python_execution_path/mlruns", "default_exp_name": "Experiment", }}) -

mongo:类型为dict,可选参数,用于 MongoDB 的设置,这将在某些功能(如任务管理)中使用,以实现高性能和集群处理。用户需要首先按照安装中的步骤安装 MongoDB,然后通过 URI 访问它。用户可以通过将 "task_url" 设置为 "mongodb://%s:%s@%s" % (user, pwd, host + ":" + port) 这样的字符串,使用凭证访问 MongoDB。Python# 例如,你可以如下初始化 qlib qlib.init(provider_uri=provider_uri, region=REG_CN, mongo={ "task_url": "mongodb://localhost:27017/", # 你的 mongo url "task_db_name": "rolling_db", # 任务管理的数据库名称 }) -

logging_level:系统的日志级别。 -

kernels:在 Qlib 的表达式引擎中计算特征时使用的进程数。在调试表达式计算异常时,将其设置为 1 是非常有帮助的。

活动:0 -

-

数据检索

简介

用户可以使用 Qlib 获取股票数据。以下示例展示了其基本的用户界面。

示例

QLib 初始化:

注意

为了获取数据,用户需要先使用

qlib.init初始化 Qlib。请参阅初始化。如果用户按照初始化中的步骤下载了数据,则应使用以下代码初始化 qlib:

Python>> import qlib >> qlib.init(provider_uri='~/.qlib/qlib_data/cn_data')加载指定时间范围和频率的交易日历:

Python>> from qlib.data import D >> D.calendar(start_time='2010-01-01', end_time='2017-12-31', freq='day')[:2] [Timestamp('2010-01-04 00:00:00'), Timestamp('2010-01-05 00:00:00')]将给定的市场名称解析为股票池配置:

Python>> from qlib.data import D >> D.instruments(market='all') {'market': 'all', 'filter_pipe': []}加载指定时间范围内的特定股票池的成分股:

Python>> from qlib.data import D >> instruments = D.instruments(market='csi300') >> D.list_instruments(instruments=instruments, start_time='2010-01-01', end_time='2017-12-31', as_list=True)[:6] ['SH600036', 'SH600110', 'SH600087', 'SH600900', 'SH600089', 'SZ000912']根据名称过滤器从基础市场加载动态成分股:

Python>> from qlib.data import D >> from qlib.data.filter import NameDFilter >> nameDFilter = NameDFilter(name_rule_re='SH[0-9]{4}55') >> instruments = D.instruments(market='csi300', filter_pipe=[nameDFilter]) >> D.list_instruments(instruments=instruments, start_time='2015-01-01', end_time='2016-02-15', as_list=True) ['SH600655', 'SH601555']根据表达式过滤器从基础市场加载动态成分股:

Python>> from qlib.data import D >> from qlib.data.filter import ExpressionDFilter >> expressionDFilter = ExpressionDFilter(rule_expression='$close>2000') >> instruments = D.instruments(market='csi300', filter_pipe=[expressionDFilter]) >> D.list_instruments(instruments=instruments, start_time='2015-01-01', end_time='2016-02-15', as_list=True) ['SZ000651', 'SZ000002', 'SH600655', 'SH600570']有关过滤器的更多详细信息,请参阅过滤器 API。

加载指定时间范围内特定成分股的特征:

Python>> from qlib.data import D >> instruments = ['SH600000'] >> fields = ['$close', '$volume', 'Ref($close, 1)', 'Mean($close, 3)', '$high-$low'] >> D.features(instruments, fields, start_time='2010-01-01', end_time='2017-12-31', freq='day').head().to_string() ' $close $volume Ref($close, 1) Mean($close, 3) $high-$low... instrument datetime... SH600000 2010-01-04 86.778313 16162960.0 88.825928 88.061483 2.907631... 2010-01-05 87.433578 28117442.0 86.778313 87.679273 3.235252... 2010-01-06 85.713585 23632884.0 87.433578 86.641825 1.720009... 2010-01-07 83.788803 20813402.0 85.713585 85.645322 3.030487... 2010-01-08 84.730675 16044853.0 83.788803 84.744354 2.047623'加载指定时间范围内特定股票池的特征:

注意

启用缓存后,qlib 数据服务器将为请求的股票池和字段始终缓存数据,这可能会导致首次处理请求的时间比没有缓存时更长。但第一次之后,即使请求的时间段发生变化,具有相同股票池和字段的请求也会命中缓存并处理得更快。

Python>> from qlib.data import D >> from qlib.data.filter import NameDFilter, ExpressionDFilter >> nameDFilter = NameDFilter(name_rule_re='SH[0-9]{4}55') >> expressionDFilter = ExpressionDFilter(rule_expression='$close>Ref($close,1)') >> instruments = D.instruments(market='csi300', filter_pipe=[nameDFilter, expressionDFilter]) >> fields = ['$close', '$volume', 'Ref($close, 1)', 'Mean($close, 3)', '$high-$low'] >> D.features(instruments, fields, start_time='2010-01-01', end_time='2017-12-31', freq='day').head().to_string() ' $close $volume Ref($close, 1) Mean($close, 3) $high-$low... instrument datetime... SH600655 2010-01-04 2699.567383 158193.328125 2619.070312 2626.097738 124.580566... 2010-01-08 2612.359619 77501.406250 2584.567627 2623.220133 83.373047... 2010-01-11 2712.982422 160852.390625 2612.359619 2636.636556 146.621582... 2010-01-12 2788.688232 164587.937500 2712.982422 2704.676758 128.413818... 2010-01-13 2790.604004 145460.453125 2788.688232 2764.091553 128.413818'有关特征的更多详细信息,请参阅特征 API。

注意

在客户端调用

D.features()时,使用参数disk_cache=0跳过数据集缓存,使用disk_cache=1生成并使用数据集缓存。此外,在服务器端调用时,用户可以使用disk_cache=2更新数据集缓存。当您构建复杂的表达式时,在一个字符串中实现所有表达式可能并不容易。例如,它看起来相当长且复杂:

Python>> from qlib.data import D >> data = D.features(["sh600519"], ["(($high / $close) + ($open / $close)) * (($high / $close) + ($open / $close)) / (($high / $close) + ($open / $close))"], start_time="20200101")但使用字符串并不是实现表达式的唯一方法。您也可以通过代码实现表达式。下面是一个与上面示例做同样事情的例子。

Python>> from qlib.data.ops import * >> f1 = Feature("high") / Feature("close") >> f2 = Feature("open") / Feature("close") >> f3 = f1 + f2 >> f4 = f3 * f3 / f3 >> data = D.features(["sh600519"], [f4], start_time="20200101") >> data.head()API

要了解如何使用数据,请转到 API 参考:数据 API。

活动:0 -

自定义模型集成

简介

Qlib 的模型库 (Model Zoo) 包含 LightGBM、MLP、LSTM 等模型。这些模型都是预测模型的示例。除了 Qlib 提供的默认模型,用户还可以将自己的自定义模型集成到 Qlib 中。

用户可以按照以下步骤集成自己的自定义模型:

-

定义一个自定义模型类,该类应为

qlib.model.base.Model的子类。 -

编写一个描述自定义模型路径和参数的配置文件。

-

测试自定义模型。

自定义模型类

自定义模型需要继承

qlib.model.base.Model并重写其中的方法。重写

__init__方法Qlib 会将初始化参数传递给

__init__方法。配置文件中模型的超参数必须与

__init__方法中定义的参数保持一致。代码示例:在以下示例中,配置文件中模型的超参数应包含

loss: mse等参数。Pythondef __init__(self, loss='mse', **kwargs): if loss not in {'mse', 'binary'}: raise NotImplementedError self._scorer = mean_squared_error if loss == 'mse' else roc_auc_score self._params.update(objective=loss, **kwargs) self._model = None重写

fit方法Qlib 调用

fit方法来训练模型。参数必须包括训练特征

dataset,这是在接口中设计的。参数可以包含一些具有默认值的可选参数,例如 GBDT 的

num_boost_round = 1000。代码示例:在以下示例中,

num_boost_round = 1000是一个可选参数。Pythondef fit(self, dataset: DatasetH, num_boost_round = 1000, **kwargs): # prepare dataset for lgb training and evaluation df_train, df_valid = dataset.prepare( ["train", "valid"], col_set=["feature", "label"], data_key=DataHandlerLP.DK_L ) x_train, y_train = df_train["feature"], df_train["label"] x_valid, y_valid = df_valid["feature"], df_valid["label"] # Lightgbm need 1D array as its label if y_train.values.ndim == 2 and y_train.values.shape[1] == 1: y_train, y_valid = np.squeeze(y_train.values), np.squeeze(y_valid.values) else: raise ValueError("LightGBM doesn't support multi-label training") dtrain = lgb.Dataset(x_train.values, label=y_train) dvalid = lgb.Dataset(x_valid.values, label=y_valid) # fit the model self.model = lgb.train( self.params, dtrain, num_boost_round=num_boost_round, valid_sets=[dtrain, dvalid], valid_names=["train", "valid"], early_stopping_rounds=early_stopping_rounds, verbose_eval=verbose_eval, evals_result=evals_result, **kwargs )重写

predict方法参数必须包含

dataset参数,该参数将用于获取测试数据集。返回预测得分。

有关

fit方法的参数类型,请参阅模型 API。代码示例:在以下示例中,用户需要使用 LightGBM 预测测试数据

x_test的标签(例如preds)并返回。Pythondef predict(self, dataset: DatasetH, **kwargs)-> pandas.Series: if self.model is None: raise ValueError("model is not fitted yet!") x_test = dataset.prepare("test", col_set="feature", data_key=DataHandlerLP.DK_I) return pd.Series(self.model.predict(x_test.values), index=x_test.index)重写

finetune方法(可选)此方法对用户是可选的。当用户希望在自己的模型上使用此方法时,他们应该继承

ModelFT基类,该基类包含了finetune的接口。参数必须包含

dataset参数。代码示例:在以下示例中,用户将使用 LightGBM 作为模型并对其进行微调。

Pythondef finetune(self, dataset: DatasetH, num_boost_round=10, verbose_eval=20): # Based on existing model and finetune by train more rounds dtrain, _ = self._prepare_data(dataset) self.model = lgb.train( self.params, dtrain, num_boost_round=num_boost_round, init_model=self.model, valid_sets=[dtrain], valid_names=["train"], verbose_eval=verbose_eval, )

配置文件

配置文件在工作流文档中有详细描述。为了将自定义模型集成到 Qlib 中,用户需要修改配置文件中的 “model” 字段。该配置描述了要使用哪个模型以及如何初始化它。

示例:以下示例描述了上述自定义 lightgbm 模型的配置文件中的

model字段,其中module_path是模块路径,class是类名,args是传递给__init__方法的超参数。字段中的所有参数都通过__init__中的**kwargs传递给self._params,除了loss = mse。model: class: LGBModel module_path: qlib.contrib.model.gbdt args: loss: mse colsample_bytree: 0.8879 learning_rate: 0.0421 subsample: 0.8789 lambda_l1: 205.6999 lambda_l2: 580.9768 max_depth: 8 num_leaves: 210 num_threads: 20用户可以在

examples/benchmarks中找到模型基准的配置文件。所有不同模型的配置都列在相应的模型文件夹下。

模型测试

假设配置文件是

examples/benchmarks/LightGBM/workflow_config_lightgbm.yaml,用户可以运行以下命令来测试自定义模型:cd examples # 避免在包含 `qlib` 的目录下运行程序 qrun benchmarks/LightGBM/workflow_config_lightgbm.yaml注意

qrun是 Qlib 的一个内置命令。此外,模型也可以作为单个模块进行测试。

examples/workflow_by_code.ipynb中给出了一个示例。

参考

要了解更多关于预测模型的信息,请参阅预测模型:模型训练与预测和模型 API。

活动:0 -

-

工作流:工作流管理

简介

Qlib 框架中的组件是松散耦合设计的。用户可以像示例中那样,使用这些组件构建自己的量化研究工作流。

此外,Qlib 还提供了更友好的接口

qrun,用于自动运行由配置定义好的整个工作流。运行整个工作流被称为一次执行。通过qrun,用户可以轻松启动一次执行,其中包含以下步骤:-

数据

-

加载

-

处理

-

切片

-

-

模型

-

训练和推断

-

保存和加载

-

-

评估

-

预测信号分析

-

回测

-

对于每一次执行,Qlib 都有一个完整的系统来跟踪在训练、推断和评估阶段生成的所有信息和工件。有关 Qlib 如何处理这些内容的更多信息,请参阅相关文档:记录器:实验管理。

完整示例

在深入细节之前,这里有一个

qrun的完整示例,它定义了典型的量化研究工作流。以下是一个典型的qrun配置文件。YAMLqlib_init: provider_uri: "~/.qlib/qlib_data/cn_data" region: cn market: &market csi300 benchmark: &benchmark SH000300 data_handler_config: &data_handler_config start_time: 2008-01-01 end_time: 2020-08-01 fit_start_time: 2008-01-01 fit_end_time: 2014-12-31 instruments: *market port_analysis_config: &port_analysis_config strategy: class: TopkDropoutStrategy module_path: qlib.contrib.strategy.strategy kwargs: topk: 50 n_drop: 5 signal: <PRED> backtest: start_time: 2017-01-01 end_time: 2020-08-01 account: 100000000 benchmark: *benchmark exchange_kwargs: limit_threshold: 0.095 deal_price: close open_cost: 0.0005 close_cost: 0.0015 min_cost: 5 task: model: class: LGBModel module_path: qlib.contrib.model.gbdt kwargs: loss: mse colsample_bytree: 0.8879 learning_rate: 0.0421 subsample: 0.8789 lambda_l1: 205.6999 lambda_l2: 580.9768 max_depth: 8 num_leaves: 210 num_threads: 20 dataset: class: DatasetH module_path: qlib.data.dataset kwargs: handler: class: Alpha158 module_path: qlib.contrib.data.handler kwargs: *data_handler_config segments: train: [2008-01-01, 2014-12-31] valid: [2015-01-01, 2016-12-31] test: [2017-01-01, 2020-08-01] record: - class: SignalRecord module_path: qlib.workflow.record_temp kwargs: {} - class: PortAnaRecord module_path: qlib.workflow.record_temp kwargs: config: *port_analysis_config将配置保存到

configuration.yaml后,用户只需一条命令即可启动工作流并测试他们的想法:qrun configuration.yaml如果用户想在调试模式下使用

qrun,请使用以下命令:python -m pdb qlib/workflow/cli.py examples/benchmarks/LightGBM/workflow_config_lightgbm_Alpha158.yaml注意

安装 Qlib 后,

qrun将位于您的$PATH目录中。注意

yaml文件中的符号&表示一个字段的锚点,当其他字段包含该参数作为值的一部分时非常有用。以上述配置文件为例,用户可以直接更改market和benchmark的值,而无需遍历整个配置文件。

配置文件

本节将详细介绍

qrun。在使用qrun之前,用户需要准备一个配置文件。以下内容展示了如何准备配置文件的每个部分。配置文件的设计逻辑非常简单。它预定义了固定的工作流,并为用户提供这个

yaml接口来定义如何初始化每个组件。它遵循init_instance_by_config的设计。它定义了 Qlib 每个组件的初始化,通常包括类和初始化参数。例如,以下

yaml和代码是等价的。YAMLmodel: class: LGBModel module_path: qlib.contrib.model.gbdt kwargs: loss: mse colsample_bytree: 0.8879 learning_rate: 0.0421 subsample: 0.8789 lambda_l1: 205.6999 lambda_l2: 580.9768 max_depth: 8 num_leaves: 210 num_threads: 20Pythonfrom qlib.contrib.model.gbdt import LGBModel kwargs = { "loss": "mse" , "colsample_bytree": 0.8879, "learning_rate": 0.0421, "subsample": 0.8789, "lambda_l1": 205.6999, "lambda_l2": 580.9768, "max_depth": 8, "num_leaves": 210, "num_threads": 20, } LGBModel(kwargs)Qlib 初始化部分

首先,配置文件需要包含几个用于 Qlib 初始化的基本参数。

YAMLprovider_uri: "~/.qlib/qlib_data/cn_data" region: cn每个字段的含义如下:

-

provider_uri:类型为str。Qlib 数据的 URI。例如,它可以是get_data.py加载数据后存储的目录。 -

region:-

如果

region == "us",Qlib 将以美股模式初始化。 -

如果

region == "cn",Qlib 将以 A股模式初始化。

-

注意

region的值应与provider_uri中存储的数据保持一致。任务部分

配置中的

task字段对应一个任务,其中包含三个不同子部分的参数:Model、Dataset 和 Record。模型部分

在

task字段中,model部分描述了用于训练和推断的模型的参数。有关基础 Model 类的更多信息,请参阅 Qlib 模型。YAMLmodel: class: LGBModel module_path: qlib.contrib.model.gbdt kwargs: loss: mse colsample_bytree: 0.8879 learning_rate: 0.0421 subsample: 0.8789 lambda_l1: 205.6999 lambda_l2: 580.9768 max_depth: 8 num_leaves: 210 num_threads: 20每个字段的含义如下:

-

class:类型为str。模型类的名称。 -

module_path:类型为str。模型在qlib中的路径。 -

kwargs:模型的关键字参数。有关更多信息,请参阅特定模型的实现:models。

注意

Qlib 提供了一个名为

init_instance_by_config的实用工具,用于使用包含class、module_path和kwargs字段的配置来初始化 Qlib 中的任何类。数据集部分

dataset字段描述了 Qlib 中 Dataset 模块的参数,以及 DataHandler 模块的参数。有关 Dataset 模块的更多信息,请参阅 Qlib 数据。DataHandler 的关键字参数配置如下:

YAMLdata_handler_config: &data_handler_config start_time: 2008-01-01 end_time: 2020-08-01 fit_start_time: 2008-01-01 fit_end_time: 2014-12-31 instruments: *market用户可以参考 DataHandler 的文档,以获取配置中每个字段的含义。

这是 Dataset 模块的配置,该模块将在训练和测试阶段负责数据预处理和切片。

YAMLdataset: class: DatasetH module_path: qlib.data.dataset kwargs: handler: class: Alpha158 module_path: qlib.contrib.data.handler kwargs: *data_handler_config segments: train: [2008-01-01, 2014-12-31] valid: [2015-01-01, 2016-12-31] test: [2017-01-01, 2020-08-01]记录部分

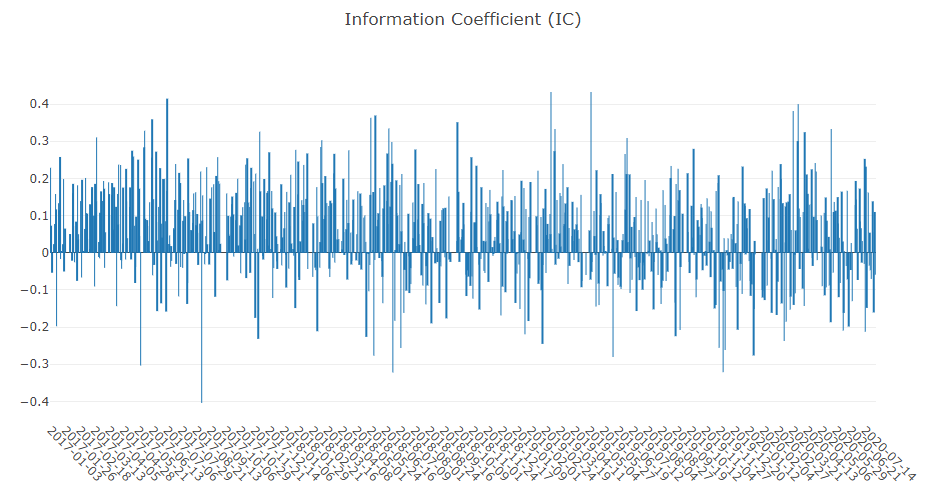

record字段是关于 Qlib 中 Record 模块的参数。Record 负责以标准格式跟踪训练过程和结果,例如信息系数 (IC) 和回测。以下脚本是回测及其使用的策略的配置:

YAMLport_analysis_config: &port_analysis_config strategy: class: TopkDropoutStrategy module_path: qlib.contrib.strategy.strategy kwargs: topk: 50 n_drop: 5 signal: <PRED> backtest: limit_threshold: 0.095 account: 100000000 benchmark: *benchmark deal_price: close open_cost: 0.0005 close_cost: 0.0015 min_cost: 5有关策略和回测配置中每个字段的含义,用户可以查阅文档:策略和回测。

这是不同记录模板(如

SignalRecord和PortAnaRecord)的配置详细信息:YAMLrecord: - class: SignalRecord module_path: qlib.workflow.record_temp kwargs: {} - class: PortAnaRecord module_path: qlib.workflow.record_temp kwargs: config: *port_analysis_config有关 Qlib 中 Record 模块的更多信息,用户可以参阅相关文档:记录。

活动:0 -

-

数据层:数据框架与使用

简介

数据层提供了用户友好的 API 来管理和检索数据。它提供了高性能的数据基础设施。

它专为量化投资而设计。例如,用户可以轻松地使用数据层构建公式化因子 (alphas)。有关更多详细信息,请参阅构建公式化因子。

数据层的介绍包括以下几个部分:

-

数据准备

-

数据 API

-

数据加载器

-

数据处理器

-

数据集

-

缓存

-

数据和缓存文件结构

下面是 Qlib 数据工作流的一个典型示例:

-

用户下载数据并将其转换为 Qlib 格式(文件名后缀为 .bin)。在此步骤中,通常只有一些基本数据(例如 OHLCV)存储在磁盘上。

-

基于 Qlib 的表达式引擎创建一些基本特征(例如 “Ref($close, 60) / $close”,即过去 60 个交易日的收益)。表达式引擎中支持的运算符可以在这里找到。此步骤通常在 Qlib 的数据加载器中实现,它是数据处理器的一个组件。

-

如果用户需要更复杂的数据处理(例如数据归一化),数据处理器支持用户自定义的处理器来处理数据(一些预定义的处理器可以在这里找到)。这些处理器与表达式引擎中的运算符不同。它专为一些难以在表达式引擎中用运算符支持的复杂数据处理方法而设计。

-

最后,数据集负责从数据处理器处理过的数据中为特定模型准备数据集。

数据准备

Qlib 格式数据

我们专门设计了一种数据结构来管理金融数据,有关详细信息,请参阅 Qlib 论文中的文件存储设计部分。此类数据将以

.bin为文件名后缀存储(我们将称之为 .bin 文件、.bin 格式或 Qlib 格式)。.bin 文件专为金融数据的科学计算而设计。Qlib 提供了两个现成的数据集,可通过此链接访问:

数据集 美股市场 A股市场 Alpha360 √ √ Alpha158 √ √ 此外,Qlib 还提供了一个高频数据集。用户可以通过此链接运行高频数据集示例。

Qlib 格式数据集

Qlib 提供了一个 .bin 格式的现成数据集,用户可以使用

scripts/get_data.py脚本下载 A股数据集,如下所示。用户还可以使用 numpy 加载 .bin 文件来验证数据。价格和成交量数据看起来与实际成交价不同,因为它们是复权的(复权价格)。然后您可能会发现不同数据源的复权价格可能不同。这是因为不同的数据源在复权方式上可能有所不同。Qlib 在复权时将每只股票第一个交易日的价格归一化为 1。用户可以利用$factor获取原始交易价格(例如,$close / $factor获取原始收盘价)。以下是关于 Qlib 价格复权的一些讨论。

https://github.com/microsoft/qlib/issues/991#issuecomment-1075252402

# 下载日数据 python scripts/get_data.py qlib_data --target_dir ~/.qlib/qlib_data/cn_data --region cn # 下载1分钟数据 python scripts/get_data.py qlib_data --target_dir ~/.qlib/qlib_data/qlib_cn_1min --region cn --interval 1min除了 A股数据,Qlib 还包含一个美股数据集,可以通过以下命令下载:

python scripts/get_data.py qlib_data --target_dir ~/.qlib/qlib_data/us_data --region us运行上述命令后,用户可以在

~/.qlib/qlib_data/cn_data和~/.qlib/qlib_data/us_data目录中分别找到 Qlib 格式的 A股和美股数据。Qlib 还在

scripts/data_collector中提供了脚本,帮助用户抓取互联网上的最新数据并将其转换为 Qlib 格式。当使用该数据集初始化 Qlib 后,用户可以使用它构建和评估自己的模型。有关更多详细信息,请参阅初始化。

日频数据的自动更新

建议用户先手动更新一次数据(

--trading_date 2021-05-25),然后再设置为自动更新。更多信息请参阅:yahoo collector。

每个交易日自动更新数据到 "qlib" 目录 (Linux):

使用 crontab: crontab -e。

设置定时任务:

* * * * 1-5 python <script path> update_data_to_bin --qlib_data_1d_dir <user data dir>

脚本路径:scripts/data_collector/yahoo/collector.py

手动更新数据:

python scripts/data_collector/yahoo/collector.py update_data_to_bin --qlib_data_1d_dir <user data dir> --trading_date <start date> --end_date <end date>

trading_date: 交易日开始日期

end_date: 交易日结束日期(不包含)

将 CSV 格式转换为 Qlib 格式

Qlib 提供了

scripts/dump_bin.py脚本,可以将任何符合正确格式的 CSV 格式数据转换为 .bin 文件(Qlib 格式)。除了下载准备好的演示数据,用户还可以直接从 Collector 下载演示数据作为 CSV 格式的参考。以下是一些示例:

对于日频数据:

python scripts/get_data.py download_data --file_name csv_data_cn.zip --target_dir ~/.qlib/csv_data/cn_data

对于 1 分钟数据:

python scripts/data_collector/yahoo/collector.py download_data --source_dir ~/.qlib/stock_data/source/cn_1min --region CN --start 2021-05-20 --end 2021-05-23 --delay 0.1 --interval 1min --limit_nums 10

用户也可以提供自己的 CSV 格式数据。但是,CSV 数据必须满足以下标准:

-

CSV 文件以特定股票命名,或者 CSV 文件包含一个股票名称列。

-

以股票命名 CSV 文件:

SH600000.csv、AAPL.csv(不区分大小写)。 -

CSV 文件包含一个股票名称列。在转储数据时,用户必须指定列名。这是一个示例:

python scripts/dump_bin.py dump_all ... --symbol_field_name symbol

其中数据格式如下:

symbol,close

SH600000,120

-

-

CSV 文件必须包含一个日期列,并且在转储数据时,用户必须指定日期列名。这是一个示例:

python scripts/dump_bin.py dump_all ... --date_field_name date

其中数据格式如下:

symbol,date,close,open,volume

SH600000,2020-11-01,120,121,12300000

SH600000,2020-11-02,123,120,12300000

假设用户在目录

~/.qlib/csv_data/my_data中准备了他们的 CSV 格式数据,他们可以运行以下命令来开始转换。python scripts/dump_bin.py dump_all --csv_path ~/.qlib/csv_data/my_data --qlib_dir ~/.qlib/qlib_data/my_data --include_fields open,close,high,low,volume,factor对于转储数据到 .bin 文件时支持的其他参数,用户可以通过运行以下命令来获取信息:

python dump_bin.py dump_all --help

转换后,用户可以在

~/.qlib/qlib_data/my_data目录中找到他们的 Qlib 格式数据。注意

--include_fields的参数应与 CSV 文件的列名相对应。Qlib 提供的数据集的列名至少应包含open、close、high、low、volume和factor。-

open:复权开盘价 -

close:复权收盘价 -

high:复权最高价 -

low:复权最低价 -

volume:复权交易量 -

factor:复权因子。通常,factor = 复权价格 / 原始价格,复权价格参考:split adjusted。

在 Qlib 数据处理的约定中,如果股票停牌,

open、close、high、low、volume、money和factor将被设置为 NaN。如果您想使用无法通过 OCHLV 计算的自定义因子,如 PE、EPS 等,您可以将其与 OHCLV 一起添加到 CSV 文件中,然后将其转储为 Qlib 格式数据。检查数据健康状况

Qlib 提供了一个脚本来检查数据的健康状况。

主要检查点如下:

-

检查 DataFrame 中是否有任何数据缺失。

-

检查 OHLCV 列中是否有任何超出阈值的大幅阶跃变化。

-

检查 DataFrame 中是否缺少任何必需的列 (OLHCV)。

-

检查 DataFrame 中是否缺少

factor列。

您可以运行以下命令来检查数据是否健康。

对于日频数据:

python scripts/check_data_health.py check_data --qlib_dir ~/.qlib/qlib_data/cn_data

对于 1 分钟数据:

python scripts/check_data_health.py check_data --qlib_dir ~/.qlib/qlib_data/cn_data_1min --freq 1min

当然,您还可以添加一些参数来调整测试结果。

可用参数如下:

-

freq:数据频率。 -

large_step_threshold_price:允许的最大价格变化。 -

large_step_threshold_volume:允许的最大成交量变化。 -

missing_data_num:允许数据为空的最大值。

您可以运行以下命令来检查数据是否健康。

对于日频数据:

python scripts/check_data_health.py check_data --qlib_dir ~/.qlib/qlib_data/cn_data --missing_data_num 30055 --large_step_threshold_volume 94485 --large_step_threshold_price 20

对于 1 分钟数据:

python scripts/check_data_health.py check_data --qlib_dir ~/.qlib/qlib_data/cn_data --freq 1min --missing_data_num 35806 --large_step_threshold_volume 3205452000000 --large_step_threshold_price 0.91

股票池 (市场)

Qlib 将股票池定义为股票列表及其日期范围。可以按如下方式导入预定义的股票池(例如 csi300)。

python collector.py --index_name CSI300 --qlib_dir <user qlib data dir> --method parse_instruments多种股票模式

Qlib 现在为用户提供了两种不同的股票模式:A股模式和美股模式。这两种模式的一些不同设置如下:

区域 交易单位 涨跌幅限制阈值 A股 100 0.099 美股 1 None 交易单位定义了可用于交易的股票数量单位,涨跌幅限制阈值定义了股票涨跌百分比的界限。

如果用户在 A股模式下使用 Qlib,则需要 A股数据。用户可以按照以下步骤在 A股模式下使用 Qlib:

-

下载 Qlib 格式的 A股数据,请参阅 Qlib 格式数据集一节。

-

在 A股模式下初始化 Qlib。

假设用户将 Qlib 格式数据下载到 ~/.qlib/qlib_data/cn_data 目录中。用户只需按如下方式初始化 Qlib 即可。

Pythonfrom qlib.constant import REG_CN qlib.init(provider_uri='~/.qlib/qlib_data/cn_data', region=REG_CN)

如果用户在美股模式下使用 Qlib,则需要美股数据。Qlib 也提供了下载美股数据的脚本。用户可以按照以下步骤在美股模式下使用 Qlib:

-

下载 Qlib 格式的美股数据,请参阅 Qlib 格式数据集一节。

-

在美股模式下初始化 Qlib。

假设用户将 Qlib 格式数据准备在 ~/.qlib/qlib_data/us_data 目录中。用户只需按如下方式初始化 Qlib 即可。

Pythonfrom qlib.config import REG_US qlib.init(provider_uri='~/.qlib/qlib_data/us_data', region=REG_US)

注意

我们非常欢迎新的数据源的 PR!用户可以将抓取数据的代码作为 PR 提交,就像这里的示例一样。然后,我们将在我们的服务器上使用该代码创建数据缓存,其他用户可以直接使用。

数据 API

数据检索

用户可以使用

qlib.data中的 API 检索数据,请参阅数据检索。特征

Qlib 提供了

Feature和ExpressionOps以根据用户的需求获取特征。-

Feature:从数据提供者加载数据。用户可以获取$high、$low、$open、$close等特征,这些特征应与--include_fields的参数相对应,请参阅将 CSV 格式转换为 Qlib 格式一节。 -

ExpressionOps:ExpressionOps将使用运算符进行特征构建。要了解更多关于运算符的信息,请参阅运算符 API。此外,Qlib 支持用户定义自己的自定义运算符,tests/test_register_ops.py中给出了一个示例。

要了解更多关于特征的信息,请参阅特征 API。

过滤器

Qlib 提供了

NameDFilter和ExpressionDFilter来根据用户的需求过滤成分股。-

NameDFilter:动态名称过滤器。根据规范的名称格式过滤成分股。需要一个名称规则正则表达式。 -

ExpressionDFilter:动态表达式过滤器。根据某个表达式过滤成分股。需要一个表示某个特征字段的表达式规则。-

基本特征过滤器:

rule_expression = '$close/$open>5' -

横截面特征过滤器:

rule_expression = '$rank($close)<10' -

时间序列特征过滤器:

rule_expression = '$Ref($close, 3)>100'

-

下面是一个简单的示例,展示了如何在基本的 Qlib 工作流配置文件中使用过滤器:

YAMLfilter: &filter filter_type: ExpressionDFilter rule_expression: "Ref($close, -2) / Ref($close, -1) > 1" filter_start_time: 2010-01-01 filter_end_time: 2010-01-07 keep: False data_handler_config: &data_handler_config start_time: 2010-01-01 end_time: 2021-01-22 fit_start_time: 2010-01-01 fit_end_time: 2015-12-31 instruments: *market filter_pipe: [*filter]要了解更多关于过滤器的信息,请参阅过滤器 API。

参考

要了解更多关于数据 API 的信息,请参阅数据 API。

数据加载器

Qlib 中的数据加载器旨在从原始数据源加载原始数据。它将在数据处理器模块中加载和使用。

QlibDataLoader

Qlib 中的

QlibDataLoader类是一个这样的接口,它允许用户从 Qlib 数据源加载原始数据。StaticDataLoader

Qlib 中的

StaticDataLoader类是一个这样的接口,它允许用户从文件或作为提供的数据加载原始数据。接口

以下是

QlibDataLoader类的一些接口:class qlib.data.dataset.loader.DataLoaderDataLoader旨在从原始数据源加载原始数据。abstract load(instruments, start_time=None, end_time=None) -> DataFrame将数据作为

pd.DataFrame加载。数据示例(列的多级索引是可选的):

feature label

$close $volume Ref($close, 1) Mean($close, 3) $high-$low LABEL0

datetime instrument

2010-01-04 SH600000 81.807068 17145150.0 83.737389 83.016739 2.741058 0.0032

SH600004 13.313329 11800983.0 13.313329 13.317701 0.183632 0.0042

SH600005 37.796539 12231662.0 38.258602 37.919757 0.970325 0.0289

参数:

-

instruments(str或dict) – 可以是市场名称,也可以是InstrumentProvider生成的成分股配置文件。如果instruments的值为None,则表示不进行过滤。 -

start_time(str) – 时间范围的开始。 -

end_time(str) – 时间范围的结束。

返回:

从底层源加载的数据。

返回类型:

pd.DataFrame

引发:

KeyError – 如果不支持成分股过滤器,则引发 KeyError。

API

要了解更多关于数据加载器的信息,请参阅数据加载器 API。

数据处理器

Qlib 中的数据处理器模块旨在处理大多数模型将使用的常见数据处理方法。

用户可以通过

qrun在自动化工作流中使用数据处理器,有关更多详细信息,请参阅工作流:工作流管理。DataHandlerLP

除了在

qrun的自动化工作流中使用数据处理器外,数据处理器还可以作为一个独立的模块使用,用户可以通过它轻松地预处理数据(标准化、删除 NaN 等)和构建数据集。为了实现这一点,Qlib 提供了一个基类

qlib.data.dataset.DataHandlerLP。这个类的核心思想是:我们将拥有一些可学习的处理器(Processors),它们可以学习数据处理的参数(例如,zscore 归一化的参数)。当新数据到来时,这些训练过的处理器可以处理新数据,从而可以高效地处理实时数据。有关处理器的更多信息,将在下一小节中列出。接口

以下是

DataHandlerLP提供的一些重要接口:class qlib.data.dataset.handler.DataHandlerLP(instruments=None, start_time=None, end_time=None, data_loader: dict | str | DataLoader | None = None, infer_processors: List = [], learn_processors: List = [], shared_processors: List = [], process_type='append', drop_raw=False, **kwargs)带**(L)可学习 (P)处理器**的数据处理器。

此处理器将生成三部分

pd.DataFrame格式的数据。-

DK_R / self._data: 从加载器加载的原始数据 -

DK_I / self._infer: 为推断处理的数据 -

DK_L / self._learn: 为学习模型处理的数据

使用不同的处理器工作流进行学习和推断的动机是多方面的。以下是一些例子:

-

学习和推断的成分股范围可能不同。

-

某些样本的处理可能依赖于标签(例如,一些达到涨跌停的样本可能需要额外处理或被删除)。

-

这些处理器仅适用于学习阶段。

数据处理器提示:

为了减少内存开销:

drop_raw=True: 这将就地修改原始数据;

请注意,self._infer 或 self._learn 等处理过的数据与 Qlib 数据集中的 segments(如“train”和“test”)是不同的概念。

-

self._infer或self._learn等处理过的数据是使用不同处理器处理的底层数据。 -

Qlib 数据集中的 segments(如“train”和“test”)只是查询数据时的时间分段(“train”在时间上通常位于“test”之前)。

例如,您可以在“train”时间分段中查询由 infer_processors 处理的 data._infer。

__init__(instruments=None, start_time=None, end_time=None, data_loader: dict | str | DataLoader | None = None, infer_processors: List = [], learn_processors: List = [], shared_processors: List = [], process_type='append', drop_raw=False, **kwargs)参数:

-

infer_processors (list) –

用于生成推断数据的一系列处理器描述信息。

描述信息的示例:

-

类名和 kwargs:

JSON{ "class": "MinMaxNorm", "kwargs": { "fit_start_time": "20080101", "fit_end_time": "20121231" } }-

仅类名:

"DropnaFeature" -

处理器对象实例

-

-

learn_processors(list) – 类似于infer_processors,但用于生成模型学习数据。 -

process_type(str) –-

PTYPE_I = 'independent'-

self._infer将由infer_processors处理 -

self._learn将由learn_processors处理

-

-

PTYPE_A = 'append'-

self._infer将由infer_processors处理 -

self._learn将由infer_processors + learn_processors处理 -

(例如

self._infer由learn_processors处理)

-

-

-

drop_raw(bool) – 是否删除原始数据。

fit():不处理数据,仅拟合数据。

fit_process_data():拟合并处理数据。前一个处理器的输出将作为 fit 的输入。

process_data(with_fit: bool = False):处理数据。如有必要,运行 processor.fit。

符号:

(data) [processor]# self.process_type == DataHandlerLP.PTYPE_I 的数据处理流程 (self._data)-[shared_processors]-(_shared_df)-[learn_processors]-(_learn_df) \ -[infer_processors]-(_infer_df) # self.process_type == DataHandlerLP.PTYPE_A 的数据处理流程 (self._data)-[shared_processors]-(_shared_df)-[infer_processors]-(_infer_df)-[learn_processors]-(_learn_df)参数:

-

with_fit(bool) – 前一个处理器的输出将作为fit的输入。

config(processor_kwargs: dict | None = None, **kwargs)

数据配置。# 从数据源加载哪些数据。

此方法将在从数据集加载腌制(pickled)处理器时使用。数据将使用不同的时间范围进行初始化。

setup_data(init_type: str = 'fit_seq', **kwargs)

在多次运行初始化时设置数据。

参数:

-

init_type(str) – 上面列出的IT_*类型。 -

enable_cache (bool) –

默认值为 false:

如果 enable_cache == True:处理过的数据将保存在磁盘上,当下次调用 init 时,处理器将直接从磁盘加载缓存的数据。

fetch(selector: Timestamp | slice | str = slice(None, None, None), level: str | int = 'datetime', col_set='__all', data_key: Literal['raw', 'infer', 'learn'] = 'infer', squeeze: bool = False, proc_func: Callable | None = None) -> DataFrame

从底层数据源获取数据。

参数:

-

selector(Union[pd.Timestamp, slice, str]) – 描述如何按索引选择数据。 -

level(Union[str, int]) – 选择哪个索引级别的数据。 -

col_set(str) – 选择一组有意义的列(例如 features, columns)。 -

data_key(str) – 获取的数据:DK_*。 -

proc_func(Callable) – 请参阅DataHandler.fetch的文档。

返回类型:pd.DataFrame

引发:NotImplementedError –

get_cols(col_set='__all', data_key: Literal['raw', 'infer', 'learn'] = 'infer') -> list

获取列名。

参数:

-

col_set(str) – 选择一组有意义的列(例如 features, columns)。 -

data_key(DATA_KEY_TYPE) – 获取的数据:DK_*。

返回:

列名列表。

返回类型:

list

classmethod cast(handler: DataHandlerLP) -> DataHandlerLP

动机:

用户在他的自定义包中创建了一个数据处理器。然后,他想将处理过的处理器分享给其他用户,而无需引入包依赖和复杂的数据处理逻辑。这个类通过将类转换为 DataHandlerLP 并仅保留处理过的数据来实现这一点。

参数:

-

handler (DataHandlerLP) – DataHandlerLP 的子类。

返回:

转换后的处理过的数据。

返回类型:

DataHandlerLP

classmethod from_df(df: DataFrame) -> DataHandlerLP

动机:当用户想要快速获取一个数据处理器时。

创建的数据处理器将只有一个共享 DataFrame,不带处理器。创建处理器后,用户通常会想将其转储以供重用。这是一个典型的用例:

Pythonfrom qlib.data.dataset import DataHandlerLP dh = DataHandlerLP.from_df(df) dh.to_pickle(fname, dump_all=True)TODO: -

StaticDataLoader相当慢。它不必再次复制数据…如果用户想通过配置加载特征和标签,可以定义一个新的处理器并调用 qlib.contrib.data.handler.Alpha158 的静态方法 parse_config_to_fields。

此外,用户还可以将 qlib.contrib.data.processor.ConfigSectionProcessor 传递给新的处理器,它提供了一些用于通过配置定义的特征的预处理方法。

处理器

Qlib 中的处理器模块被设计为可学习的,它负责处理数据处理,例如归一化和删除空/NaN 特征/标签。

Qlib 提供了以下处理器:

-

DropnaProcessor:删除 N/A 特征的处理器。 -

DropnaLabel:删除 N/A 标签的处理器。 -

TanhProcess:使用tanh处理噪声数据的处理器。 -

ProcessInf:处理无穷大值的处理器,它将被替换为该列的平均值。 -

Fillna:处理 N/A 值的处理器,它将用 0 或其他给定数字填充 N/A 值。 -

MinMaxNorm:应用 Min-Max 归一化的处理器。 -

ZscoreNorm:应用 Z-score 归一化的处理器。 -

RobustZScoreNorm:应用鲁棒 Z-score 归一化的处理器。 -

CSZScoreNorm:应用横截面 Z-score 归一化的处理器。 -

CSRankNorm:应用横截面排名归一化的处理器。 -

CSZFillna:以横截面方式用该列的平均值填充 N/A 值的处理器。

用户还可以通过继承 Processor 的基类来创建自己的处理器。有关更多信息,请参阅所有处理器的实现(处理器链接)。

要了解更多关于处理器的信息,请参阅处理器 API。

示例

数据处理器可以通过修改配置文件与 qrun 一起运行,也可以作为一个独立的模块使用。

要了解更多关于如何与 qrun 一起运行数据处理器的信息,请参阅工作流:工作流管理。

Qlib 提供了已实现的数据处理器

Alpha158。以下示例展示了如何将Alpha158作为一个独立的模块运行。注意

用户需要先用

qlib.init初始化 Qlib,请参阅初始化。Pythonimport qlib from qlib.contrib.data.handler import Alpha158 data_handler_config = { "start_time": "2008-01-01", "end_time": "2020-08-01", "fit_start_time": "2008-01-01", "fit_end_time": "2014-12-31", "instruments": "csi300", } if __name__ == "__main__": qlib.init() h = Alpha158(**data_handler_config) # 获取数据的所有列 print(h.get_cols()) # 获取所有标签 print(h.fetch(col_set="label")) # 获取所有特征 print(h.fetch(col_set="feature"))注意

在 Alpha158 中,Qlib 使用的标签是

Ref($close, -2)/Ref($close, -1) - 1,这意味着从 T+1 到 T+2 的变化,而不是Ref($close, -1)/$close - 1。原因是在获取 A股的 T 日收盘价时,股票可以在 T+1 日买入,在 T+2 日卖出。API

要了解更多关于数据处理器的信息,请参阅数据处理器 API。

数据集

Qlib 中的数据集模块旨在为模型训练和推断准备数据。

该模块的动机是我们希望最大化不同模型处理适合其自身的数据的灵活性。该模块赋予模型以独特方式处理其数据的灵活性。例如,像 GBDT 这样的模型可能在包含

nan或None值的数据上运行良好,而像 MLP 这样的神经网络模型则会因此崩溃。如果用户的模型需要以不同的方式处理数据,用户可以实现自己的 Dataset 类。如果模型的数据处理不特殊,则可以直接使用

DatasetH。DatasetH类是带有数据处理器的数据集。这是该类最重要的接口:class qlib.data.dataset.__init__.DatasetH(handler: Dict | DataHandler, segments: Dict[str, Tuple], fetch_kwargs: Dict = {}, **kwargs)带数据处理器的数据集。

用户应尽量将数据预处理功能放入处理器中。只有以下数据处理功能应放在数据集中:

-

与特定模型相关的处理。

-

与数据拆分相关的处理。

__init__(handler: Dict | DataHandler, segments: Dict[str, Tuple], fetch_kwargs: Dict = {}, **kwargs)

设置底层数据。

参数:

-

handler (Union[dict, DataHandler]) –

处理器可以是:

-

DataHandler的实例 -

DataHandler的配置。请参阅数据处理器

-

-

segments (dict) –

描述如何分割数据。以下是一些示例:

-

'segments': {

'train': ("2008-01-01", "2014-12-31"),

'valid': ("2017-01-01", "2020-08-01",),

'test': ("2015-01-01", "2016-12-31",),

}

-

'segments': {

'insample': ("2008-01-01", "2014-12-31"),

'outsample': ("2017-01-01", "2020-08-01",),

}

-

-

config(handler_kwargs: dict | None = None, **kwargs)

初始化 DatasetH

参数:

-

handler_kwargs (dict) –

DataHandler 的配置,可以包括以下参数:

-

DataHandler.conf_data的参数,例如instruments、start_time和end_time。

-

-

kwargs (dict) –

DatasetH 的配置,例如:

-

segmentsdict-

segments的配置与self.__init__中的相同。

-

-

-

-

setup_data(handler_kwargs: dict | None = None, **kwargs)

设置数据。

参数:

-

handler_kwargs (dict) –

DataHandler 的初始化参数,可以包括以下参数:

-

init_type:Handler的初始化类型 -

enable_cache: 是否启用缓存

-

-

-

prepare(segments: List[str] | Tuple[str] | str | slice | Index, col_set='__all', data_key='infer', **kwargs) -> List[DataFrame] | DataFrame

为学习和推断准备数据。

参数:

-

segments (Union[List[Text], Tuple[Text], Text, slice]) –

描述要准备的数据的范围。以下是一些示例:

-

'train' -

['train', 'valid']

-

-

col_set (str) –

获取数据时将传递给 self.handler。TODO:使其自动化:

-

为测试数据选择

DK_I -

为训练数据选择

DK_L

-

-

data_key(str) – 要获取的数据:DK_*。默认是DK_I,表示获取用于推断的数据。 -

kwargs –

kwargs 可能包含的参数:

-

flt_colstr-

它只存在于 TSDatasetH 中,可用于添加一个数据列(True 或 False)来过滤数据。此参数仅在它是 TSDatasetH 的实例时受支持。

返回类型:Union[List[pd.DataFrame], pd.DataFrame]

引发:NotImplementedError –

-

-

-

API

要了解更多关于数据集的信息,请参阅数据集 API。

缓存

缓存是一个可选模块,通过将一些常用数据保存为缓存文件来帮助加速数据提供。Qlib 提供了一个

Memcache类来缓存内存中最常用的数据,一个可继承的ExpressionCache类,以及一个可继承的DatasetCache类。全局内存缓存

Memcache是一个全局内存缓存机制,由三个MemCacheUnit实例组成,用于缓存日历、成分股和特征。MemCache在cache.py中全局定义为H。用户可以使用H['c'],H['i'],H['f']来获取/设置内存缓存。class qlib.data.cache.MemCacheUnit(*args, **kwargs)

内存缓存单元。

__init__(*args, **kwargs)

property limited

内存缓存是否受限。

class qlib.data.cache.MemCache(mem_cache_size_limit=None, limit_type='length')

内存缓存。

__init__(mem_cache_size_limit=None, limit_type='length')

参数:

-

mem_cache_size_limit– 缓存的最大大小。 -

limit_type–length或sizeof;length(调用函数:len),size(调用函数:sys.getsizeof)。

表达式缓存

ExpressionCache是一个缓存机制,用于保存Mean($close, 5)等表达式。用户可以继承这个基类来定义自己的缓存机制,以保存表达式,步骤如下。-

重写

self._uri方法以定义如何生成缓存文件路径。 -

重写

self._expression方法以定义将缓存哪些数据以及如何缓存。

以下是接口的详细信息:

class qlib.data.cache.ExpressionCache(provider)

表达式缓存机制基类。

此类用于使用自定义的表达式缓存机制封装表达式提供者。

注意

重写 _uri 和 _expression 方法来创建您自己的表达式缓存机制。

expression(instrument, field, start_time, end_time, freq)

获取表达式数据。

注意

与表达式提供者中的 expression 方法接口相同。

update(cache_uri: str | Path, freq: str = 'day')

将表达式缓存更新到最新的日历。

重写此方法以定义如何根据用户自己的缓存机制更新表达式缓存。

参数:

-

cache_uri(str或Path) – 表达式缓存文件的完整 URI(包括目录路径)。 -

freq(str) –

返回:

0(更新成功)/ 1(无需更新)/ 2(更新失败)。

返回类型:int

Qlib 目前提供了已实现的磁盘缓存

DiskExpressionCache,它继承自ExpressionCache。表达式数据将存储在磁盘上。数据集缓存

DatasetCache是一个缓存机制,用于保存数据集。一个特定的数据集由股票池配置(或一系列成分股,尽管不推荐)、表达式列表或静态特征字段、所收集特征的开始时间和结束时间以及频率来规范。用户可以继承这个基类来定义自己的缓存机制,以保存数据集,步骤如下。-

重写

self._uri方法以定义如何生成缓存文件路径。 -

重写

self._expression方法以定义将缓存哪些数据以及如何缓存。

以下是接口的详细信息:

class qlib.data.cache.DatasetCache(provider)

数据集缓存机制基类。

此类用于使用自定义的数据集缓存机制封装数据集提供者。

注意

重写 _uri 和 _dataset 方法来创建您自己的数据集缓存机制。

dataset(instruments, fields, start_time=None, end_time=None, freq='day', disk_cache=1, inst_processors=[])

获取特征数据集。

注意

与数据集提供者中的 dataset 方法接口相同。

注意

服务器使用 redis_lock 来确保不会触发读写冲突,但客户端读取器不在考虑范围内。

update(cache_uri: str | Path, freq: str = 'day')

将数据集缓存更新到最新的日历。

重写此方法以定义如何根据用户自己的缓存机制更新数据集缓存。

参数:

-

cache_uri(str或Path) – 数据集缓存文件的完整 URI(包括目录路径)。 -

freq(str) –

返回:

0(更新成功)/ 1(无需更新)/ 2(更新失败)。

返回类型:int

static cache_to_origin_data(data, fields)

将缓存数据转换为原始数据。

参数:

-

data–pd.DataFrame,缓存数据。 -

fields – 特征字段。

返回:

pd.DataFrame。

static normalize_uri_args(instruments, fields, freq)

规范化 URI 参数。

Qlib 目前提供了已实现的磁盘缓存

DiskDatasetCache,它继承自DatasetCache。数据集数据将存储在磁盘上。

数据和缓存文件结构

我们专门设计了一种文件结构来管理数据和缓存,有关详细信息,请参阅 Qlib 论文中的文件存储设计部分。数据和缓存的文件结构如下。

-

data/-

[raw data]由数据提供者更新-

calendars/-

day.txt

-

-

instruments/-

all.txt -

csi500.txt -

...

-

-

features/-

sh600000/-

open.day.bin -

close.day.bin -

...

-

-

...

-

-

-

[cached data]原始数据更新时更新-

calculated features/-

sh600000/-

[hash(instrtument, field_expression, freq)]-

all-time expression -cache data file(全时间表达式缓存数据文件) -

.meta:一个辅助元文件,记录成分股名称、字段名称、频率和访问次数。

-

-

-

...

-

-

cache/-

[hash(stockpool_config, field_expression_list, freq)]-

all-time Dataset-cache data file(全时间数据集缓存数据文件) -

.meta:一个辅助元文件,记录股票池配置、字段名称和访问次数。 -

.index:一个辅助索引文件,记录所有日历的行索引。

-

-

...

-

-

-

活动:0 -

-

预测模型:模型训练与预测

简介

预测模型旨在为股票生成预测分数。用户可以通过

qrun在自动化工作流中使用预测模型,请参阅工作流:工作流管理。由于 Qlib 中的组件采用松散耦合的设计,预测模型也可以作为一个独立的模块使用。

基类与接口

Qlib 提供了一个基类

qlib.model.base.Model,所有模型都应继承自该类。该基类提供了以下接口:

class qlib.model.base.Model

可学习的模型

fit(dataset: Dataset, reweighter: Reweighter)

从基础模型中学习模型。

注意

学习到的模型的属性名称不应以 _ 开头。这样模型就可以被转储到磁盘。

以下代码示例展示了如何从数据集中检索

x_train、y_train和w_train:Python# get features and labels df_train, df_valid = dataset.prepare( ["train", "valid"], col_set=["feature", "label"], data_key=DataHandlerLP.DK_L) x_train, y_train = df_train["feature"], df_train["label"] x_valid, y_valid = df_valid["feature"], df_valid["label"] # get weights try: wdf_train, wdf_valid = dataset.prepare(["train", "valid"], col_set=["weight"], data_key=DataHandlerLP.DK_L) w_train, w_valid = wdf_train["weight"], wdf_valid["weight"] except KeyError as e: w_train = pd.DataFrame(np.ones_like(y_train.values), index=y_train.index) w_valid = pd.DataFrame(np.ones_like(y_valid.values), index=y_valid.index)参数:

-

dataset(Dataset) – 数据集将生成用于模型训练的处理过的数据。

abstract predict(dataset: Dataset, segment: str | slice = 'test') -> object

给定数据集进行预测。

参数:

-

dataset(Dataset) – 数据集将生成用于模型训练的处理过的数据集。 -

segment (Text or slice) – 数据集将使用此分段来准备数据。(默认为 test)

返回类型:

具有特定类型(例如 pandas.Series)的预测结果。

Qlib 还提供了一个基类

qlib.model.base.ModelFT,其中包含了微调模型的方法。对于

finetune等其他接口,请参阅模型 API。

示例

Qlib 的模型库 (Model Zoo) 包含 LightGBM、MLP、LSTM 等模型。这些模型被视为预测模型的基线。以下步骤展示了如何将

LightGBM作为独立模块运行。-

首先,使用

qlib.init初始化 Qlib,请参阅初始化。 -

运行以下代码以获取预测分数

pred_score。

Pythonfrom qlib.contrib.model.gbdt import LGBModel from qlib.contrib.data.handler import Alpha158 from qlib.utils import init_instance_by_config, flatten_dict from qlib.workflow import R from qlib.workflow.record_temp import SignalRecord, PortAnaRecord market = "csi300" benchmark = "SH000300" data_handler_config = { "start_time": "2008-01-01", "end_time": "2020-08-01", "fit_start_time": "2008-01-01", "fit_end_time": "2014-12-31", "instruments": market, } task = { "model": { "class": "LGBModel", "module_path": "qlib.contrib.model.gbdt", "kwargs": { "loss": "mse", "colsample_bytree": 0.8879, "learning_rate": 0.0421, "subsample": 0.8789, "lambda_l1": 205.6999, "lambda_l2": 580.9768, "max_depth": 8, "num_leaves": 210, "num_threads": 20, }, }, "dataset": { "class": "DatasetH", "module_path": "qlib.data.dataset", "kwargs": { "handler": { "class": "Alpha158", "module_path": "qlib.contrib.data.handler", "kwargs": data_handler_config, }, "segments": { "train": ("2008-01-01", "2014-12-31"), "valid": ("2015-01-01", "2016-12-31"), "test": ("2017-01-01", "2020-08-01"), }, }, }, } # 模型初始化 model = init_instance_by_config(task["model"]) dataset = init_instance_by_config(task["dataset"]) # 开始实验 with R.start(experiment_name="workflow"): # 训练 R.log_params(**flatten_dict(task)) model.fit(dataset) # 预测 recorder = R.get_recorder() sr = SignalRecord(model, dataset, recorder) sr.generate()注意

Alpha158 是 Qlib 提供的数据处理器,请参阅数据处理器。SignalRecord 是 Qlib 中的记录模板,请参阅工作流。

此外,上述示例已在

examples/train_backtest_analyze.ipynb中给出。从技术上讲,模型预测的含义取决于用户设计的标签设置。默认情况下,分数的含义通常是预测模型对成分股的评级。分数越高,成分股的利润潜力越大。

自定义模型

Qlib 支持自定义模型。如果用户有兴趣自定义自己的模型并将其集成到 Qlib 中,请参阅自定义模型集成。

API

请参阅模型 API。

活动:0 -

-

投资组合策略:投资组合管理

简介

投资组合策略旨在采用不同的投资组合策略,这意味着用户可以基于预测模型的预测分数采用不同的算法来生成投资组合。用户可以通过 Workflow 模块在自动化工作流中使用投资组合策略,请参阅工作流:工作流管理。

由于 Qlib 中的组件采用松散耦合的设计,投资组合策略也可以作为一个独立的模块使用。

Qlib 提供了几种已实现的投资组合策略。此外,Qlib 支持自定义策略,用户可以根据自己的需求自定义策略。

在用户指定模型(预测信号)和策略后,运行回测将帮助用户检查自定义模型(预测信号)/策略的性能。

基类与接口

BaseStrategy

Qlib 提供了一个基类

qlib.strategy.base.BaseStrategy。所有策略类都需要继承该基类并实现其接口。generate_trade_decision:

generate_trade_decision 是一个关键接口,它在每个交易时段生成交易决策。调用此方法的频率取决于执行器频率("time_per_step" 默认为 "day")。但交易频率可以由用户的实现决定。例如,如果用户希望每周交易,而执行器中的 time_per_step 是 "day",则用户可以每周返回非空的 TradeDecision(否则返回空,像这样)。

用户可以继承

BaseStrategy来自定义他们的策略类。WeightStrategyBase

Qlib 还提供了一个类

qlib.contrib.strategy.WeightStrategyBase,它是BaseStrategy的子类。WeightStrategyBase只关注目标头寸,并根据头寸自动生成订单列表。它提供了generate_target_weight_position接口。generate_target_weight_position:

根据当前头寸和交易日期生成目标头寸。输出的权重分布不考虑现金。

返回目标头寸。

注意

这里的目标头寸是指总资产的目标百分比。

WeightStrategyBase实现了generate_order_list接口,其处理过程如下。-

调用

generate_target_weight_position方法生成目标头寸。 -

从目标头寸生成股票的目标数量。

-

从目标数量生成订单列表。

用户可以继承

WeightStrategyBase并实现generate_target_weight_position接口来自定义他们的策略类,该策略类只关注目标头寸。

已实现的策略

Qlib 提供了一个名为

TopkDropoutStrategy的已实现的策略类。TopkDropoutStrategy

TopkDropoutStrategy是BaseStrategy的子类,并实现了generate_order_list接口,其过程如下。-

采用 Topk-Drop 算法计算每只股票的目标数量。

注意

Topk-Drop 算法有两个参数:

-

Topk:持有的股票数量。 -

Drop:每个交易日卖出的股票数量。

通常,当前持有的股票数量是 Topk,除了交易开始时期为零。对于每个交易日,设 d 是当前持有的股票中,按预测分数从高到低排名时排名 gt K 的股票数量。然后将卖出当前持有的 d 只预测分数最差的股票,并买入相同数量的未持有但预测分数最佳的股票。

通常,d = Drop,尤其是在候选股票池很大,K 很大且 Drop 很小的情况下。

在大多数情况下,TopkDrop 算法每天卖出和买入 Drop 只股票,这使得换手率为 2 * Drop / K。

下图说明了一个典型的场景。

-

-

从目标数量生成订单列表。

EnhancedIndexingStrategy

EnhancedIndexingStrategy 增强型指数化结合了主动管理和被动管理的艺术,旨在在控制风险敞口(又称跟踪误差)的同时,在投资组合回报方面跑赢基准指数(例如,标准普尔 500 指数)。

更多信息请参阅 qlib.contrib.strategy.signal_strategy.EnhancedIndexingStrategy 和 qlib.contrib.strategy.optimizer.enhanced_indexing.EnhancedIndexingOptimizer。

用法与示例

首先,用户可以创建一个模型来获取交易信号(在以下情况下变量名为

pred_score)。预测分数

预测分数是一个 pandas DataFrame。它的索引是 <datetime(pd.Timestamp), instrument(str)>,并且它必须包含一个 score 列。

预测样本如下所示。

datetime instrument score 2019-01-04 SH600000 -0.505488 2019-01-04 SZ002531 -0.320391 2019-01-04 SZ000999 0.583808 2019-01-04 SZ300569 0.819628 2019-01-04 SZ001696 -0.137140 ... ... ... 2019-04-30 SZ000996 -1.027618 2019-04-30 SH603127 0.225677 2019-04-30 SH603126 0.462443 2019-04-30 SH603133 -0.302460 2019-04-30 SZ300760 -0.126383 预测模型模块可以进行预测,请参阅预测模型:模型训练与预测。

通常,预测分数是模型的输出。但有些模型是从不同尺度的标签中学习的。因此,预测分数的尺度可能与您的预期(例如,成分股的收益)不同。

Qlib 没有添加一个步骤来将预测分数统一缩放到一个尺度,原因如下。

-

因为并非每个交易策略都关心尺度(例如,

TopkDropoutStrategy只关心排名)。因此,策略有责任重新缩放预测分数(例如,一些基于投资组合优化的策略可能需要有意义的尺度)。 -

模型可以灵活地定义目标、损失和数据处理。因此,我们不认为仅仅基于模型的输出来直接重新缩放它有一个万能的方法。如果您想将其重新缩放到一些有意义的值(例如,股票收益),一个直观的解决方案是为您模型的近期输出和您近期的目标值创建一个回归模型。

运行回测

在大多数情况下,用户可以使用

backtest_daily回测他们的投资组合管理策略。Pythonfrom pprint import pprint import qlib import pandas as pd from qlib.utils.time import Freq from qlib.utils import flatten_dict from qlib.contrib.evaluate import backtest_daily from qlib.contrib.evaluate import risk_analysis from qlib.contrib.strategy import TopkDropoutStrategy # init qlib qlib.init(provider_uri=<qlib data dir>) CSI300_BENCH = "SH000300" STRATEGY_CONFIG = { "topk": 50, "n_drop": 5, # pred_score, pd.Series "signal": pred_score, } strategy_obj = TopkDropoutStrategy(**STRATEGY_CONFIG) report_normal, positions_normal = backtest_daily( start_time="2017-01-01", end_time="2020-08-01", strategy=strategy_obj) analysis = dict() # default frequency will be daily (i.e. "day") analysis["excess_return_without_cost"] = risk_analysis(report_normal["return"] - report_normal["bench"]) analysis["excess_return_with_cost"] = risk_analysis(report_normal["return"] - report_normal["bench"] - report_normal["cost"]) analysis_df = pd.concat(analysis) # type: pd.DataFrame pprint(analysis_df)如果用户希望以更详细的方式控制他们的策略(例如,用户有一个更高级的执行器版本),用户可以遵循这个示例。

Pythonfrom pprint import pprint import qlib import pandas as pd from qlib.utils.time import Freq from qlib.utils import flatten_dict from qlib.backtest import backtest, executor from qlib.contrib.evaluate import risk_analysis from qlib.contrib.strategy import TopkDropoutStrategy # init qlib qlib.init(provider_uri=<qlib data dir>) CSI300_BENCH = "SH000300" # Benchmark 用于计算您的策略的超额收益。 # 它的数据格式将像**一个普通成分股**。 # 例如,您可以使用以下代码查询其数据 # `D.features(["SH000300"], ["$close"], start_time='2010-01-01', end_time='2017-12-31', freq='day')` # 它与参数 `market` 不同,`market` 表示一个股票池(例如,**一组**股票,如 csi300) # 例如,您可以使用以下代码查询股票市场的所有数据。 # `D.features(D.instruments(market='csi300'), ["$close"], start_time='2010-01-01', end_time='2017-12-31', freq='day')` FREQ = "day" STRATEGY_CONFIG = { "topk": 50, "n_drop": 5, # pred_score, pd.Series "signal": pred_score, } EXECUTOR_CONFIG = { "time_per_step": "day", "generate_portfolio_metrics": True, } backtest_config = { "start_time": "2017-01-01", "end_time": "2020-08-01", "account": 100000000, "benchmark": CSI300_BENCH, "exchange_kwargs": { "freq": FREQ, "limit_threshold": 0.095, "deal_price": "close", "open_cost": 0.0005, "close_cost": 0.0015, "min_cost": 5, }, } # 策略对象 strategy_obj = TopkDropoutStrategy(**STRATEGY_CONFIG) # 执行器对象 executor_obj = executor.SimulatorExecutor(**EXECUTOR_CONFIG) # 回测 portfolio_metric_dict, indicator_dict = backtest(executor=executor_obj, strategy=strategy_obj, **backtest_config) analysis_freq = "{0}{1}".format(*Freq.parse(FREQ)) # 回测信息 report_normal, positions_normal = portfolio_metric_dict.get(analysis_freq) # 分析 analysis = dict() analysis["excess_return_without_cost"] = risk_analysis( report_normal["return"] - report_normal["bench"], freq=analysis_freq) analysis["excess_return_with_cost"] = risk_analysis( report_normal["return"] - report_normal["bench"] - report_normal["cost"], freq=analysis_freq) analysis_df = pd.concat(analysis) # type: pd.DataFrame # 记录指标 analysis_dict = flatten_dict(analysis_df["risk"].unstack().T.to_dict()) # 打印结果 pprint(f"以下是基准收益({analysis_freq})的分析结果。") pprint(risk_analysis(report_normal["bench"], freq=analysis_freq)) pprint(f"以下是无成本超额收益({analysis_freq})的分析结果。") pprint(analysis["excess_return_without_cost"]) pprint(f"以下是有成本超额收益({analysis_freq})的分析结果。") pprint(analysis["excess_return_with_cost"])结果

回测结果采用以下形式:

risk

excess_return_without_cost mean 0.000605

std 0.005481

annualized_return 0.152373

information_ratio 1.751319

max_drawdown -0.059055

excess_return_with_cost mean 0.000410

std 0.005478

annualized_return 0.103265

information_ratio 1.187411

max_drawdown -0.075024

-

excess_return_without_cost-

mean:无成本的CAR(累计异常收益)的平均值。 -

std:无成本的CAR(累计异常收益)的标准差。 -

annualized_return:无成本的CAR(累计异常收益)的年化收益率。 -

information_ratio:无成本的信息比率。请参阅信息比率 – IR。 -

max_drawdown:无成本的CAR(累计异常收益)的最大回撤。请参阅最大回撤 (MDD)。

-

-

excess_return_with_cost-

mean:有成本的CAR(累计异常收益)系列的平均值。 -

std:有成本的CAR(累计异常收益)系列的标准差。 -

annualized_return:有成本的CAR(累计异常收益)的年化收益率。 -

information_ratio:有成本的信息比率。请参阅信息比率 – IR。 -

max_drawdown:有成本的CAR(累计异常收益)的最大回撤。请参阅最大回撤 (MDD)。

-

参考

要了解更多关于预测模型输出的预测分数

pred_score的信息,请参阅预测模型:模型训练与预测。活动:0 -

-

高频交易中的嵌套决策执行框架设计

简介

日内交易(例如投资组合管理)和盘中交易(例如订单执行)是量化投资中的两个热门话题,通常是分开研究的。

为了获得日内交易和盘中交易的联合交易表现,它们必须相互作用并联合运行回测。为了支持多个级别的联合回测策略,需要一个相应的框架。目前公开可用的高频交易框架都没有考虑多级别的联合交易,这使得上述回测不准确。

除了回测,不同级别的策略优化也不是独立的,它们会相互影响。例如,最佳投资组合管理策略可能会随着订单执行性能的变化而改变(例如,当我们改进订单执行策略时,换手率更高的投资组合可能会成为更好的选择)。为了实现整体良好的性能,有必要考虑不同级别策略之间的相互作用。

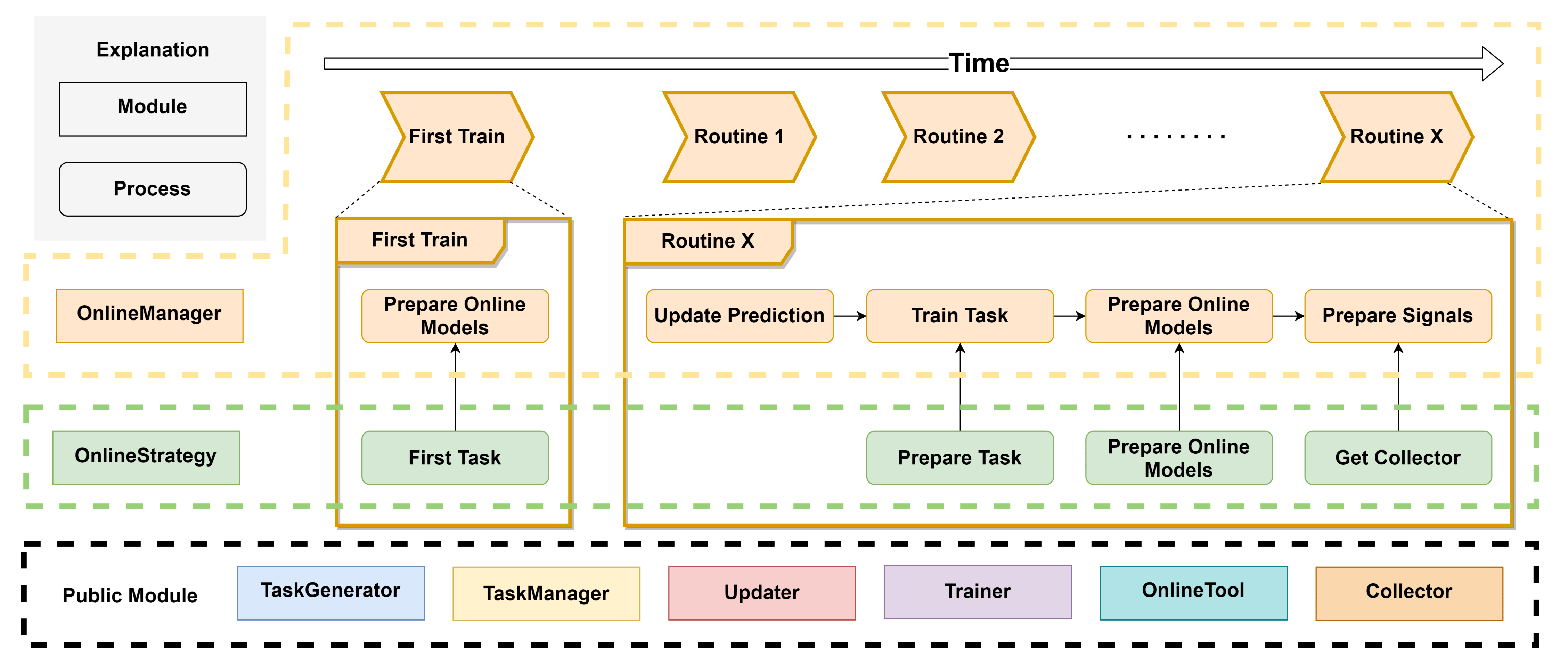

因此,为了解决上述各种问题,构建一个新的多级别交易框架变得很有必要。为此,我们设计了一个考虑策略相互作用的嵌套决策执行框架。

该框架的设计如上图中间的黄色部分所示。每个级别都由

Trading Agent和Execution Env组成。Trading Agent有其自己的数据处理模块 (Information Extractor)、预测模块 (Forecast Model) 和决策生成器 (Decision Generator)。交易算法根据Forecast Module输出的预测信号,通过Decision Generator生成决策,生成的决策被传递给Execution Env,后者返回执行结果。交易算法的频率、决策内容和执行环境可以由用户定制(例如,盘中交易、日频交易、周频交易),并且执行环境内部可以嵌套更细粒度的交易算法和执行环境(即图中的子工作流,例如,日频订单可以通过在日内拆分订单转换为更细粒度的决策)。嵌套决策执行框架的灵活性使用户可以轻松探索不同级别交易策略组合的效果,并打破不同级别交易算法之间的优化壁垒。

嵌套决策执行框架的优化可以在

QlibRL的支持下实现。要了解如何使用QlibRL的更多信息,请访问 API 参考:RL API。

示例

高频嵌套决策执行框架的示例可以在这里找到。

此外,除了上述示例,以下是一些关于 Qlib 中高频交易的其他相关工作。

-

使用高频数据进行预测

-

从非固定频率的高频数据中提取特征的示例。

-

一篇关于高频交易的论文。

活动:0 -

-

元控制器:元任务、元数据集与元模型

简介

元控制器为预测模型提供指导,其目的是学习一系列预测任务中的规律模式,并利用这些学习到的模式来指导未来的预测任务。用户可以基于 Meta Controller 模块实现自己的元模型实例。

元任务

元任务实例是元学习框架中的基本元素。它保存可供元模型使用的数据。多个元任务实例可能共享同一个

Data Handler,由元数据集控制。用户应该使用prepare_task_data()来获取可以直接输入元模型的数据。class qlib.model.meta.task.MetaTask(task: dict, meta_info: object, mode: str = 'full')

一个独立的元任务,一个元数据集包含一个元任务列表。它作为 MetaDatasetDS 中的一个组件。

数据处理方式不同:

-

训练和测试之间的处理输入可能不同。

-

训练时,训练任务中的

X、y、X_test、y_test是必需的(# PROC_MODE_FULL #),但在测试任务中不是必需的(# PROC_MODE_TEST #)。 -

当元模型可以转移到其他数据集时,只有

meta_info是必需的(# PROC_MODE_TRANSFER #)。

__init__(task: dict, meta_info: object, mode: str = 'full')

__init__ 函数负责:

-

存储任务。

-

存储原始输入数据。

-

处理元数据的输入数据。

参数:

-

task(dict) – 待元模型增强的任务。 -

meta_info(object) – 元模型的输入。

get_meta_input() -> object

返回处理过的 meta_info。

元数据集

元数据集控制元信息生成过程。它负责为训练元模型提供数据。用户应该使用

prepare_tasks来检索元任务实例列表。class qlib.model.meta.dataset.MetaTaskDataset(segments: Dict[str, Tuple] | float, *args, **kwargs)

一个在元级别获取数据的数据集。

元数据集负责:

-

输入任务(例如 Qlib 任务)并准备元任务。

-

元任务比普通任务包含更多信息(例如,元模型的输入数据)。

-

所学到的模式可以转移到其他元数据集。应支持以下情况:

-

在元数据集 A 上训练的元模型,然后应用于元数据集 B。

-

元数据集 A 和 B 之间共享一些模式,因此当元模型应用于元数据集 B 时,会使用元数据集 A 上的元输入。

-

__init__(segments: Dict[str, Tuple] | float, *args, **kwargs)

元数据集在初始化时维护一个元任务列表。

-

segments表示划分数据的方式。 -

MetaTaskDataset的__init__函数的职责是初始化任务。

prepare_tasks(segments: List[str] | str, *args, **kwargs) -> List[MetaTask]

准备每个元任务中的数据,并为训练做好准备。

以下代码示例展示了如何从元数据集中检索元任务列表:

Python# get the train segment and the test segment, both of them are lists train_meta_tasks, test_meta_tasks = meta_dataset.prepare_tasks(["train", "test"])参数:

-

segments (Union[List[Text], Tuple[Text], Text]) – 用于选择数据的信息。

返回:

一个元任务列表,其中包含用于训练元模型的每个元任务的已准备数据。对于多个分段 [seg1, seg2, ..., segN],返回的列表将是 [[seg1 中的任务], [seg2 中的任务], ..., [segN 中的任务]]。每个任务都是一个元任务。

返回类型:list

元模型

通用元模型

元模型实例是控制工作流的部分。元模型的用法包括:

-

用户使用

fit函数训练他们的元模型。 -

元模型实例通过

inference函数提供有用信息来指导工作流。

class qlib.model.meta.model.MetaModel

指导模型学习的元模型。

“指导”一词可以根据模型学习的阶段分为两类:

-

学习任务的定义:请参阅

MetaTaskModel的文档。 -

控制模型的学习过程:请参阅

MetaGuideModel的文档。

abstract fit(*args, **kwargs)

元模型的训练过程。

abstract inference(*args, **kwargs) -> object

元模型的推理过程。

返回:

一些用于指导模型学习的信息。

返回类型:object

元任务模型

此类元模型可能直接与任务定义交互。因此,元任务模型是它们要继承的类。它们通过修改基本任务定义来指导基本任务。

prepare_tasks函数可用于获取修改后的基本任务定义。class qlib.model.meta.model.MetaTaskModel

此类元模型处理基本任务定义。元模型在训练后为训练新的基本预测模型创建任务。prepare_tasks 直接修改任务定义。

fit(meta_dataset: MetaTaskDataset)

MetaTaskModel 应该从 meta_dataset 中获取已准备好的 MetaTask。然后,它将从元任务中学习知识。

inference(meta_dataset: MetaTaskDataset) -> List[dict]

MetaTaskModel 将对 meta_dataset 进行推理。MetaTaskModel 应该从 meta_dataset 中获取已准备好的 MetaTask。然后,它将创建带有 Qlib 格式的修改后的任务,这些任务可以由 Qlib 训练器执行。

返回:

一个修改后的任务定义列表。

返回类型:List[dict]

元指导模型

此类元模型参与基本预测模型的训练过程。元模型可以在基本预测模型的训练过程中指导它们,以提高其性能。

class qlib.model.meta.model.MetaGuideModel

此类元模型旨在指导基本模型的训练过程。元模型在基本预测模型的训练过程中与它们交互。

abstract fit(*args, **kwargs)

元模型的训练过程。

abstract inference(*args, **kwargs)

元模型的推理过程。

返回:

一些用于指导模型学习的信息。

返回类型:object

示例

Qlib 提供了一个名为 DDG-DA 的 Meta Model 模块实现,该模块可适应市场动态。

DDG-DA 包括四个步骤:

-

计算元信息并将其封装到

Meta Task实例中。所有元任务构成一个Meta Dataset实例。 -

基于元数据集的训练数据训练

DDG-DA。 -

对

DDG-DA进行推理以获取指导信息。 -

将指导信息应用于预测模型以提高其性能。

上述示例可以在

examples/benchmarks_dynamic/DDG-DA/workflow.py中找到。活动:0 -

-

Qlib 记录器:实验管理

简介

Qlib 包含一个名为 QlibRecorder 的实验管理系统,旨在帮助用户高效地处理实验和分析结果。

该系统由三个组件组成:

-

ExperimentManager:一个管理实验的类。

-

Experiment:一个实验类,每个实例负责一个单独的实验。

-

Recorder:一个记录器类,每个实例负责一个单独的运行。

以下是该系统结构的总体视图:

ExperimentManager - Experiment 1 - Recorder 1 - Recorder 2 - ... - Experiment 2 - Recorder 1 - Recorder 2 - ... - ...这个实验管理系统定义了一组接口并提供了一个具体的实现:MLflowExpManager,它基于机器学习平台 MLFlow。

如果用户将

ExpManager的实现设置为MLflowExpManager,他们可以使用mlflow ui命令来可视化和检查实验结果。有关更多信息,请参阅此处的相关文档。

Qlib 记录器

QlibRecorder 为用户提供了一个高级 API 来使用实验管理系统。接口被封装在 Qlib 中的变量

R中,用户可以直接使用R与系统交互。以下命令展示了如何在 Python 中导入R:Pythonfrom qlib.workflow import RQlibRecorder 包括几个用于在工作流中管理实验和记录器的常用 API。有关更多可用 API,请参阅下面关于实验管理器、实验和记录器的部分。

以下是 QlibRecorder 的可用接口:

class qlib.workflow.__init__.QlibRecorder(exp_manager: ExpManager)

一个帮助管理实验的全局系统。

__init__(exp_manager: ExpManager)start(*, experiment_id: str | None = None, experiment_name: str | None = None, recorder_id: str | None = None, recorder_name: str | None = None, uri: str | None = None, resume: bool = False)

启动实验的方法。此方法只能在 Python 的 with 语句中调用。以下是示例代码:

Python# start new experiment and recorder with R.start(experiment_name='test', recorder_name='recorder_1'): model.fit(dataset) R.log... ... # further operations # resume previous experiment and recorder with R.start(experiment_name='test', recorder_name='recorder_1', resume=True): # if users want to resume recorder, they have to specify the exact same name for experiment and recorder. ... # further operations参数:

-

experiment_id(str) – 要启动的实验 ID。 -

experiment_name(str) – 要启动的实验名称。 -

recorder_id(str) – 要在实验下启动的记录器 ID。 -

recorder_name(str) – 要在实验下启动的记录器名称。 -

uri(str) – 实验的跟踪 URI,所有工件/指标等都将存储在此处。默认 URI 在qlib.config中设置。请注意,此uri参数不会更改配置文件中定义的 URI。因此,下次用户在同一实验中调用此函数时,他们也必须指定相同值的此参数。否则,可能会出现不一致的 URI。 -

resume(bool) – 是否恢复给定实验下指定名称的记录器。

start_exp(*, experiment_id=None, experiment_name=None, recorder_id=None, recorder_name=None, uri=None, resume=False)

启动实验的底层方法。使用此方法时,应手动结束实验,并且记录器的状态可能无法正确处理。以下是示例代码:

PythonR.start_exp(experiment_name='test', recorder_name='recorder_1') ... # further operations R.end_exp('FINISHED') or R.end_exp(Recorder.STATUS_S)参数:

-

experiment_id(str) – 要启动的实验 ID。 -

experiment_name(str) – 要启动的实验名称。 -

recorder_id(str) – 要在实验下启动的记录器 ID。 -

recorder_name(str) – 要在实验下启动的记录器名称。 -

uri(str) – 实验的跟踪 URI,所有工件/指标等都将存储在此处。默认 URI 在qlib.config中设置。 -

resume (bool) – 是否恢复给定实验下指定名称的记录器。

返回类型:

一个已启动的实验实例。

end_exp(recorder_status='FINISHED')

手动结束实验的方法。它将结束当前活动的实验及其活动的记录器,并指定 status 类型。以下是此方法的示例代码:

PythonR.start_exp(experiment_name='test') ... # further operations R.end_exp('FINISHED') or R.end_exp(Recorder.STATUS_S)参数:

-

status(str) – 记录器的状态,可以是 SCHEDULED、RUNNING、FINISHED、FAILED。

search_records(experiment_ids, **kwargs)

获取符合搜索条件的记录的 pandas DataFrame。

此函数的参数不是固定的,它们会因 Qlib 中 ExpManager 的不同实现而异。Qlib 现在提供了 ExpManager 的 mlflow 实现,以下是使用 MLflowExpManager 的此方法的示例代码:

PythonR.log_metrics(m=2.50, step=0) records = R.search_records([experiment_id], order_by=["metrics.m DESC"])参数:

-

experiment_ids(list) – 实验 ID 列表。 -

filter_string(str) – 筛选查询字符串,默认为搜索所有运行。 -

run_view_type(int) – 枚举值 ACTIVE_ONLY、DELETED_ONLY 或 ALL 之一(例如在mlflow.entities.ViewType中)。 -

max_results(int) – 放入 DataFrame 的最大运行次数。 -

order_by (list) – 按列排序的列表(例如,“metrics.rmse”)。

返回:

一个 pandas.DataFrame 记录,其中每个指标、参数和标签分别扩展为名为 metrics.*、params.* 和 tags.* 的列。对于没有特定指标、参数或标签的记录,它们的值将分别为 (NumPy) Nan、None 或 None。

list_experiments()

列出所有现有实验的方法(已删除的除外)。

Pythonexps = R.list_experiments()返回类型:

一个存储的实验信息字典(名称 -> 实验)。

list_recorders(experiment_id=None, experiment_name=None)

列出具有给定 ID 或名称的实验的所有记录器的方法。

如果用户没有提供实验的 ID 或名称,此方法将尝试检索默认实验并列出默认实验的所有记录器。如果默认实验不存在,该方法将首先创建默认实验,然后在其下创建一个新的记录器。(有关默认实验的更多信息可以在此处找到)。

以下是示例代码:

Pythonrecorders = R.list_recorders(experiment_name='test')参数:

-

experiment_id(str) – 实验的 ID。 -

experiment_name (str) – 实验的名称。

返回类型:

一个存储的记录器信息字典(ID -> 记录器)。

get_exp(*, experiment_id=None, experiment_name=None, create: bool = True, start: bool = False) -> Experiment

使用给定 ID 或名称检索实验的方法。一旦将 create 参数设置为 True,如果找不到有效的实验,此方法将为您创建一个。否则,它只会检索特定的实验或引发错误。

如果 'create' 为 True:

-

如果活动实验存在:

-

未指定 ID 或名称,返回活动实验。

-

如果指定了 ID 或名称,则返回指定的实验。如果找不到此类实验,则使用给定 ID 或名称创建一个新实验。

-

-

如果活动实验不存在:

-

未指定 ID 或名称,创建默认实验,并将该实验设置为活动状态。

-

如果指定了 ID 或名称,则返回指定的实验。如果找不到此类实验,则使用给定名称或默认实验创建一个新实验。

否则,如果 'create' 为 False:

-

-

如果活动实验存在:

-

未指定 ID 或名称,返回活动实验。

-

如果指定了 ID 或名称,则返回指定的实验。如果找不到此类实验,则引发错误。

-

-

如果活动实验不存在:

-

未指定 ID 或名称。如果默认实验存在,则返回它,否则引发错误。

-

如果指定了 ID 或名称,则返回指定的实验。如果找不到此类实验,则引发错误。

以下是一些用例:

-

Python# Case 1 with R.start('test'): exp = R.get_exp() recorders = exp.list_recorders() # Case 2 with R.start('test'): exp = R.get_exp(experiment_name='test1') # Case 3 exp = R.get_exp() -> a default experiment. # Case 4 exp = R.get_exp(experiment_name='test') # Case 5 exp = R.get_exp(create=False) -> the default experiment if exists.参数:

-

experiment_id(str) – 实验的 ID。 -

experiment_name(str) – 实验的名称。 -

create(boolean) – 一个参数,用于确定如果实验之前未创建,该方法是否会自动根据用户的规范创建一个新实验。 -

start (bool) – 当 start 为 True 时,如果实验尚未启动(未激活),它将启动。它专为 R.log_params 自动启动实验而设计。

返回类型:

具有给定 ID 或名称的实验实例。

delete_exp(experiment_id=None, experiment_name=None)

删除具有给定 ID 或名称的实验的方法。必须至少提供 ID 或名称中的一个,否则会发生错误。

以下是示例代码:

PythonR.delete_exp(experiment_name='test')参数:

-

experiment_id(str) – 实验的 ID。 -

experiment_name(str) – 实验的名称。

get_uri()

检索当前实验管理器的 URI 的方法。

以下是示例代码:

Pythonuri = R.get_uri()返回类型:

当前实验管理器的 URI。

set_uri(uri: str | None)

重置当前实验管理器的默认 URI 的方法。

注意:

当 URI 指的是文件路径时,请使用绝对路径,而不是像 “~/mlruns/” 这样的字符串。后端不支持这样的字符串。

uri_context(uri: str)

暂时将 exp_manager 的 default_uri 设置为 uri。

注意:

-

请参阅 set_uri 中的注意。

参数:

-

uri(Text) – 临时 URI。

get_recorder(*, recorder_id=None, recorder_name=None, experiment_id=None, experiment_name=None) -> Recorder

检索记录器的方法。

-

如果活动记录器存在:

-

未指定 ID 或名称,返回活动记录器。

-

如果指定了 ID 或名称,则返回指定的记录器。

-

-

如果活动记录器不存在:

-

未指定 ID 或名称,引发错误。

-

如果指定了 ID 或名称,则必须提供相应的 experiment_name,返回指定的记录器。否则,引发错误。

记录器可用于进一步处理,例如 save_object、load_object、log_params、log_metrics 等。

以下是一些用例:

-

Python# Case 1 with R.start(experiment_name='test'): recorder = R.get_recorder() # Case 2 with R.start(experiment_name='test'): recorder = R.get_recorder(recorder_id='2e7a4efd66574fa49039e00ffaefa99d') # Case 3 recorder = R.get_recorder() -> Error # Case 4 recorder = R.get_recorder(recorder_id='2e7a4efd66574fa49039e00ffaefa99d') -> Error # Case 5 recorder = R.get_recorder(recorder_id='2e7a4efd66574fa49039e00ffaefa99d', experiment_name='test')用户可能会关心的一些问题:

-

问:如果多个记录器符合查询条件(例如,使用

experiment_name查询),它将返回哪个记录器? -

答:如果使用 mlflow 后端,将返回 start_time 最晚的记录器。因为 MLflow 的 search_runs 函数保证了这一点。

参数:

-

recorder_id(str) – 记录器的 ID。 -

recorder_name(str) – 记录器的名称。 -

experiment_name (str) – 实验的名称。

返回类型:

一个记录器实例。

delete_recorder(recorder_id=None, recorder_name=None)

删除具有给定 ID 或名称的记录器的方法。必须至少提供 ID 或名称中的一个,否则会发生错误。

以下是示例代码:

PythonR.delete_recorder(recorder_id='2e7a4efd66574fa49039e00ffaefa99d')参数:

-

recorder_id(str) – 实验的 ID。 -

recorder_name(str) – 实验的名称。

save_objects(local_path=None, artifact_path=None, **kwargs: Dict[str, Any])

将对象作为工件保存在实验中到 URI 的方法。它支持从本地文件/目录保存,或直接保存对象。用户可以使用有效的 Python 关键字参数来指定要保存的对象及其名称(名称:值)。

总而言之,此 API 旨在将对象保存到实验管理后端路径,

-

Qlib 提供两种方法来指定对象:

-

通过

**kwargs直接传入对象(例如R.save_objects(trained_model=model))。 -

传入对象的本地路径,即

local_path参数。

-

-

artifact_path 表示实验管理后端路径。

如果活动记录器存在:它将通过活动记录器保存对象。

如果活动记录器不存在:系统将创建一个默认实验和一个新的记录器,并在其下保存对象。

注意

如果想使用特定的记录器保存对象,建议先通过 get_recorder API 获取特定的记录器,然后使用该记录器保存对象。支持的参数与此方法相同。

以下是一些用例:

Python# Case 1 with R.start(experiment_name='test'): pred = model.predict(dataset) R.save_objects(**{"pred.pkl": pred}, artifact_path='prediction') rid = R.get_recorder().id ... R.get_recorder(recorder_id=rid).load_object("prediction/pred.pkl") # after saving objects, you can load the previous object with this api # Case 2 with R.start(experiment_name='test'): R.save_objects(local_path='results/pred.pkl', artifact_path="prediction") rid = R.get_recorder().id ... R.get_recorder(recorder_id=rid).load_object("prediction/pred.pkl") # after saving objects, you can load the previous object with this api参数:

-

local_path(str) – 如果提供,则将文件或目录保存到工件 URI。 -

artifact_path(str) – 要存储在 URI 中的工件的相对路径。 -

**kwargs(Dict[Text, Any]) – 要保存的对象。例如,{"pred.pkl": pred}。

load_object(name: str)

从 URI 中实验的工件中加载对象的方法。

log_params(**kwargs)

在实验期间记录参数的方法。除了使用 R,用户还可以在使用 get_recorder API 获取特定记录器后,记录到该记录器。

如果活动记录器存在:它将通过活动记录器记录参数。

如果活动记录器不存在:系统将创建一个默认实验和一个新的记录器,并在其下记录参数。

以下是一些用例:

Python# Case 1 with R.start('test'): R.log_params(learning_rate=0.01) # Case 2 R.log_params(learning_rate=0.01)参数:

-

argument(keyword) –name1=value1, name2=value2, ...。

log_metrics(step=None, **kwargs)

在实验期间记录指标的方法。除了使用 R,用户还可以在使用 get_recorder API 获取特定记录器后,记录到该记录器。

如果活动记录器存在:它将通过活动记录器记录指标。

如果活动记录器不存在:系统将创建一个默认实验和一个新的记录器,并在其下记录指标。

以下是一些用例:

Python# Case 1 with R.start('test'): R.log_metrics(train_loss=0.33, step=1) # Case 2 R.log_metrics(train_loss=0.33, step=1)参数:

-

argument(keyword) –name1=value1, name2=value2, ...。

log_artifact(local_path: str, artifact_path: str | None = None)

将本地文件或目录作为工件记录到当前活动的运行中。

如果活动记录器存在:它将通过活动记录器设置标签。

如果活动记录器不存在:系统将创建一个默认实验和一个新的记录器,并在其下设置标签。

参数:

-

local_path(str) – 要写入的文件路径。 -

artifact_path(Optional[str]) – 如果提供,则为要写入的artifact_uri中的目录。

download_artifact(path: str, dst_path: str | None = None) -> str

将工件文件或目录从运行下载到本地目录(如果适用),并返回其本地路径。

参数:

-

path(str) – 目标工件的相对源路径。 -

dst_path (Optional[str]) – 用于下载指定工件的本地文件系统目标目录的绝对路径。此目录必须已存在。如果未指定,工件将下载到本地文件系统上一个新创建的、具有唯一名称的目录中。

返回:

目标工件的本地路径。

返回类型:str

set_tags(**kwargs)

为记录器设置标签的方法。除了使用 R,用户还可以在使用 get_recorder API 获取特定记录器后,为该记录器设置标签。

如果活动记录器存在:它将通过活动记录器设置标签。

如果活动记录器不存在:系统将创建一个默认实验和一个新的记录器,并在其下设置标签。

以下是一些用例:

Python# Case 1 with R.start('test'): R.set_tags(release_version="2.2.0") # Case 2 R.set_tags(release_version="2.2.0")参数:

-

argument(keyword) –name1=value1, name2=value2, ...。

实验管理器 (Experiment Manager)

Qlib 中的

ExpManager模块负责管理不同的实验。ExpManager的大多数 API 与 QlibRecorder 类似,其中最重要的 API 是get_exp方法。用户可以直接参考上面的文档,获取有关如何使用get_exp方法的一些详细信息。class qlib.workflow.expm.ExpManager(uri: str, default_exp_name: str | None)

这是用于管理实验的 ExpManager 类。其 API 设计类似于 mlflow。(链接:https://mlflow.org/docs/latest/python_api/mlflow.html)

ExpManager 预期是一个单例(顺便说一下,我们可以有多个 URI 不同的 Experiment。用户可以从不同的 URI 获取不同的实验,然后比较它们的记录)。全局配置(即 C)也是一个单例。

因此,我们试图将它们对齐。它们共享同一个变量,称为 default uri。有关变量共享的详细信息,请参阅 ExpManager.default_uri。

当用户启动一个实验时,他们可能希望将 URI 设置为特定的 URI(在此期间它将覆盖 default uri),然后取消设置该特定 URI 并回退到默认 URI。ExpManager._active_exp_uri 就是那个特定 URI。

__init__(uri: str, default_exp_name: str | None)start_exp(*, experiment_id: str | None = None, experiment_name: str | None = None, recorder_id: str | None = None, recorder_name: str | None = None, uri: str | None = None, resume: bool = False, **kwargs) -> Experiment

启动一个实验。此方法首先获取或创建一个实验,然后将其设置为活动状态。

_active_exp_uri 的维护包含在 start_exp 中,其余实现应包含在子类中的 _end_exp 中。

参数:

-

experiment_id(str) – 活动实验的 ID。 -

experiment_name(str) – 活动实验的名称。 -

recorder_id(str) – 要启动的记录器 ID。 -

recorder_name(str) – 要启动的记录器名称。 -

uri(str) – 当前跟踪 URI。 -

resume (boolean) – 是否恢复实验和记录器。

返回类型:

一个活动实验。

end_exp(recorder_status: str = 'SCHEDULED', **kwargs)

结束一个活动实验。

_active_exp_uri 的维护包含在 end_exp 中,其余实现应包含在子类中的 _end_exp 中。

参数:

-

experiment_name(str) – 活动实验的名称。 -

recorder_status(str) – 实验中活动记录器的状态。

create_exp(experiment_name: str | None = None)

创建一个实验。

参数:

-

experiment_name (str) – 实验名称,必须是唯一的。

返回类型:

一个实验对象。

引发:

ExpAlreadyExistError –

search_records(experiment_ids=None, **kwargs)

获取符合实验搜索条件的记录的 pandas DataFrame。输入是用户想要应用的搜索条件。

返回:

一个 pandas.DataFrame 记录,其中每个指标、参数和标签分别扩展为名为 metrics.*、params.* 和 tags.* 的列。对于没有特定指标、参数或标签的记录,它们的值将分别为 (NumPy) Nan、None 或 None。

get_exp(*, experiment_id=None, experiment_name=None, create: bool = True, start: bool = False)

检索一个实验。此方法包括获取一个活动实验,以及获取或创建一个特定实验。

当用户指定实验 ID 和名称时,该方法将尝试返回特定实验。当用户未提供记录器 ID 或名称时,该方法将尝试返回当前活动实验。create 参数决定了如果实验之前未创建,该方法是否会自动根据用户的规范创建一个新实验。

如果 create 为 True:

-

如果活动实验存在:

-

未指定 ID 或名称,返回活动实验。

-

如果指定了 ID 或名称,则返回指定的实验。如果找不到此类实验,则使用给定 ID 或名称创建一个新实验。如果

start设置为True,则将实验设置为活动状态。

-

-

如果活动实验不存在:

-

未指定 ID 或名称,创建默认实验。

-

如果指定了 ID 或名称,则返回指定的实验。如果找不到此类实验,则使用给定 ID 或名称创建一个新实验。如果 start 设置为 True,则将实验设置为活动状态。

否则,如果 create 为 False:

-

-

如果活动实验存在:

-

未指定 ID 或名称,返回活动实验。

-

如果指定了 ID 或名称,则返回指定的实验。如果找不到此类实验,则引发错误。

-

-

如果活动实验不存在:

-

未指定 ID 或名称。如果默认实验存在,则返回它,否则引发错误。

-

如果指定了 ID 或名称,则返回指定的实验。如果找不到此类实验,则引发错误。

参数:

-

-

experiment_id(str) – 要返回的实验 ID。 -

experiment_name(str) – 要返回的实验名称。 -

create(boolean) – 如果实验之前未创建,则创建它。 -

start (boolean) – 如果创建了新实验,则启动它。

返回类型:

一个实验对象。

delete_exp(experiment_id=None, experiment_name=None)

删除一个实验。

参数:

-

experiment_id(str) – 实验 ID。 -

experiment_name(str) – 实验名称。

property default_uri

从 qlib.config.C 获取默认跟踪 URI。

property uri

获取默认跟踪 URI 或当前 URI。

返回类型:

跟踪 URI 字符串。

list_experiments()

列出所有现有实验。

返回类型:

一个存储的实验信息字典(名称 -> 实验)。

对于 create_exp、delete_exp 等其他接口,请参阅实验管理器 API。

实验 (Experiment)

Experiment类只负责一个单独的实验,它将处理与实验相关的任何操作。包括start、end实验等基本方法。此外,与记录器相关的方法也可用:此类方法包括get_recorder和list_recorders。class qlib.workflow.exp.Experiment(id, name)

这是每个正在运行的实验的 Experiment 类。其 API 设计类似于 mlflow。(链接:https://mlflow.org/docs/latest/python_api/mlflow.html)

__init__(id, name)start(*, recorder_id=None, recorder_name=None, resume=False)

启动实验并将其设置为活动状态。此方法还将启动一个新的记录器。

参数:

-

recorder_id(str) – 要创建的记录器 ID。 -

recorder_name(str) – 要创建的记录器名称。 -

resume (bool) – 是否恢复第一个记录器。

返回类型:

一个活动记录器。

end(recorder_status='SCHEDULED')

结束实验。

参数:

-

recorder_status(str) – 结束时要设置的记录器状态(SCHEDULED、RUNNING、FINISHED、FAILED)。

create_recorder(recorder_name=None)

为每个实验创建一个记录器。

参数:

-

recorder_name (str) – 要创建的记录器名称。

返回类型:

一个记录器对象。

search_records(**kwargs)

获取符合实验搜索条件的记录的 pandas DataFrame。输入是用户想要应用的搜索条件。

返回:

一个 pandas.DataFrame 记录,其中每个指标、参数和标签分别扩展为名为 metrics.*、params.* 和 tags.* 的列。对于没有特定指标、参数或标签的记录,它们的值将分别为 (NumPy) Nan、None 或 None。

delete_recorder(recorder_id)

为每个实验创建一个记录器。

参数:

-

recorder_id(str) – 要删除的记录器 ID。

get_recorder(recorder_id=None, recorder_name=None, create: bool = True, start: bool = False) -> Recorder

为用户检索记录器。当用户指定记录器 ID 和名称时,该方法将尝试返回特定记录器。当用户未提供记录器 ID 或名称时,该方法将尝试返回当前活动记录器。create 参数决定了如果记录器之前未创建,该方法是否会自动根据用户的规范创建一个新记录器。

如果 create 为 True:

-

如果活动记录器存在:

-

未指定 ID 或名称,返回活动记录器。

-

如果指定了 ID 或名称,则返回指定的记录器。如果找不到此类实验,则使用给定 ID 或名称创建一个新记录器。如果

start设置为True,则将记录器设置为活动状态。

-

-

如果活动记录器不存在:

-

未指定 ID 或名称,创建一个新的记录器。

-

如果指定了 ID 或名称,则返回指定的实验。如果找不到此类实验,则使用给定 ID 或名称创建一个新记录器。如果 start 设置为 True,则将记录器设置为活动状态。

否则,如果 create 为 False:

-

-

如果活动记录器存在:

-

未指定 ID 或名称,返回活动记录器。

-

如果指定了 ID 或名称,则返回指定的记录器。如果找不到此类实验,则引发错误。

-

-

如果活动记录器不存在:

-

未指定 ID 或名称,引发错误。

-

如果指定了 ID 或名称,则返回指定的记录器。如果找不到此类实验,则引发错误。

参数:

-

-

recorder_id(str) – 要删除的记录器 ID。 -

recorder_name(str) – 要删除的记录器名称。 -

create(boolean) – 如果记录器之前未创建,则创建它。 -

start (boolean) – 如果创建了新记录器,则启动它。

返回类型:

一个记录器对象。

list_recorders(rtype: Literal['dict', 'list'] = 'dict', **flt_kwargs) -> List[Recorder] | Dict[str, Recorder]

列出此实验的所有现有记录器。在调用此方法之前,请先获取实验实例。如果用户想使用 R.list_recorders() 方法,请参阅 QlibRecorder 中的相关 API 文档。

-

flt_kwargsdict-

按条件筛选记录器,例如 list_recorders(status=Recorder.STATUS_FI)

返回:

-

-

如果

rtype== "dict":-

一个存储的记录器信息字典(ID -> 记录器)。

-

-

如果

rtype== "list":-

一个 Recorder 列表。

返回类型:

返回类型取决于 rtype。

对于 search_records、delete_recorder 等其他接口,请参阅实验 API。

Qlib 还提供了一个默认的 Experiment,当用户使用 log_metrics 或 get_exp 等 API 时,在某些情况下会创建和使用它。如果使用默认的 Experiment,在运行 Qlib 时会有相关的日志信息。用户可以在 Qlib 的配置文件中或在 Qlib 的初始化期间更改默认 Experiment 的名称,该名称设置为“Experiment”。

-

记录器 (Recorder)

Recorder类负责一个单独的记录器。它将处理一些详细的操作,例如一次运行的log_metrics、log_params。它旨在帮助用户轻松跟踪在一次运行中生成的结果和事物。以下是一些未包含在 QlibRecorder 中的重要 API:

class qlib.workflow.recorder.Recorder(experiment_id, name)

这是用于记录实验的 Recorder 类。其 API 设计类似于 mlflow。(链接:https://mlflow.org/docs/latest/python_api/mlflow.html)

记录器的状态可以是 SCHEDULED、RUNNING、FINISHED、FAILED。

__init__(experiment_id, name)save_objects(local_path=None, artifact_path=None, **kwargs)

将预测文件或模型检查点等对象保存到工件 URI。用户可以通过关键字参数(名称:值)保存对象。

请参阅 qlib.workflow:R.save_objects 的文档。

参数:

-

local_path(str) – 如果提供,则将文件或目录保存到工件 URI。 -

artifact_path=None(str) – 要存储在 URI 中的工件的相对路径。

load_object(name)

加载预测文件或模型检查点等对象。

参数:

-

name (str) – 要加载的文件名。

返回类型:

保存的对象。

start_run()

启动或恢复记录器。返回值可以用作 with 块中的上下文管理器;否则,您必须调用 end_run() 来终止当前运行。(请参阅 mlflow 中的 ActiveRun 类)

返回类型:

一个活动运行对象(例如 mlflow.ActiveRun 对象)。

end_run()

结束一个活动记录器。

log_params(**kwargs)

为当前运行记录一批参数。

参数:

-

arguments(keyword) – 要记录为参数的键值对。

log_metrics(step=None, **kwargs)

为当前运行记录多个指标。

参数:

-

arguments(keyword) – 要记录为指标的键值对。

log_artifact(local_path: str, artifact_path: str | None = None)

将本地文件或目录作为工件记录到当前活动的运行中。

参数:

-

local_path(str) – 要写入的文件路径。 -

artifact_path(Optional[str]) – 如果提供,则为要写入的artifact_uri中的目录。

set_tags(**kwargs)

为当前运行记录一批标签。

参数:

-

arguments(keyword) – 要记录为标签的键值对。

delete_tags(*keys)

从运行中删除一些标签。

参数:

-

keys(series of strs of the keys) – 要删除的所有标签名称。

list_artifacts(artifact_path: str | None = None)

列出记录器的所有工件。

参数:

-

artifact_path (str) – 要存储在 URI 中的工件的相对路径。

返回类型:

一个存储的工件信息列表(名称、路径等)。

download_artifact(path: str, dst_path: str | None = None) -> str

将工件文件或目录从运行下载到本地目录(如果适用),并返回其本地路径。

参数:

-

path(str) – 目标工件的相对源路径。 -

dst_path (Optional[str]) – 用于下载指定工件的本地文件系统目标目录的绝对路径。此目录必须已存在。如果未指定,工件将下载到本地文件系统上一个新创建的、具有唯一名称的目录中。

返回:

目标工件的本地路径。

返回类型:str

list_metrics()

列出记录器的所有指标。

返回类型:

一个存储的指标字典。

list_params()

列出记录器的所有参数。

返回类型:

一个存储的参数字典。

list_tags()

列出记录器的所有标签。

返回类型:

一个存储的标签字典。

对于 save_objects、load_object 等其他接口,请参阅记录器 API。

记录模板 (Record Template)

RecordTemp类是一个能够以特定格式生成实验结果(例如 IC 和回测)的类。我们提供了三种不同的Record Template类:-

SignalRecord:此类生成模型的预测结果。

-

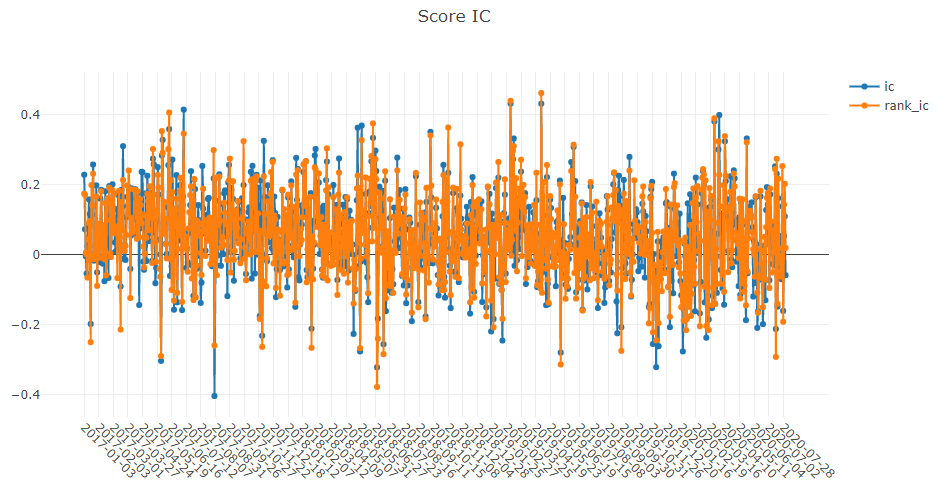

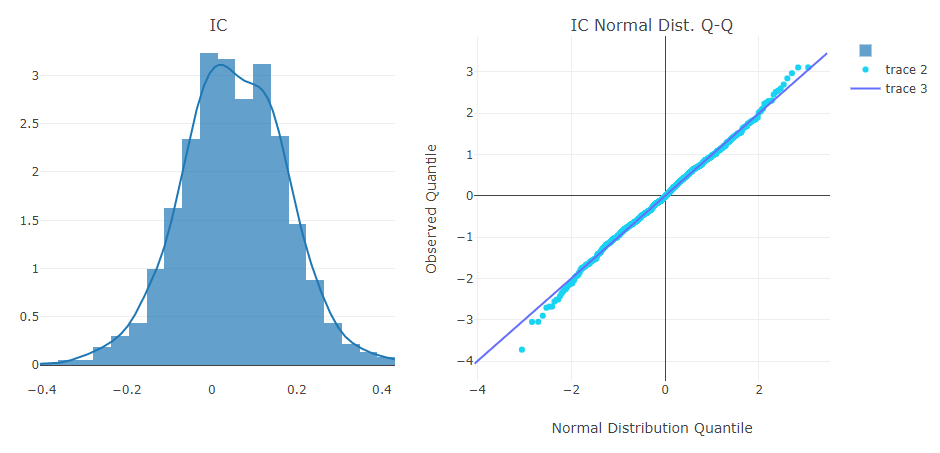

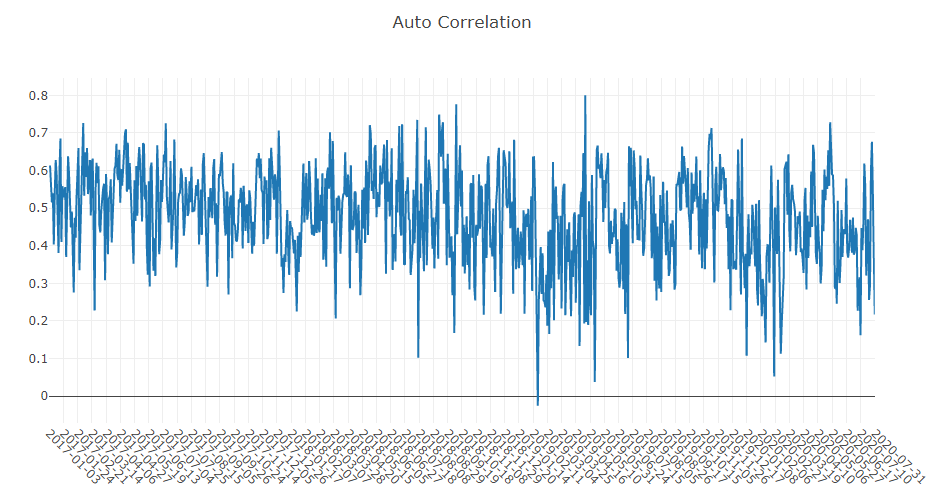

SigAnaRecord:此类生成模型的 IC、ICIR、Rank IC 和 Rank ICIR。

以下是 SigAnaRecord 中所做的一个简单示例,如果用户想用自己的预测和标签计算 IC、Rank IC、多空收益,可以参考:

Pythonfrom qlib.contrib.eva.alpha import calc_ic, calc_long_short_return ic, ric = calc_ic(pred.iloc[:, 0], label.iloc[:, 0]) long_short_r, long_avg_r = calc_long_short_return(pred.iloc[:, 0], label.iloc[:, 0]) -

PortAnaRecord:此类生成回测的结果。有关回测以及可用策略的详细信息,用户可以参阅策略和回测。

以下是 PortAnaRecord 中所做的一个简单示例,如果用户想基于自己的预测和标签进行回测,可以参考:

Pythonfrom qlib.contrib.strategy.strategy import TopkDropoutStrategy from qlib.contrib.evaluate import ( backtest as normal_backtest, risk_analysis, ) # backtest STRATEGY_CONFIG = { "topk": 50, "n_drop": 5, } BACKTEST_CONFIG = { "limit_threshold": 0.095, "account": 100000000, "benchmark": BENCHMARK, "deal_price": "close", "open_cost": 0.0005, "close_cost": 0.0015, "min_cost": 5, } strategy = TopkDropoutStrategy(**STRATEGY_CONFIG) report_normal, positions_normal = normal_backtest(pred_score, strategy=strategy, **BACKTEST_CONFIG) # analysis analysis = dict() analysis["excess_return_without_cost"] = risk_analysis(report_normal["return"] - report_normal["bench"]) analysis["excess_return_with_cost"] = risk_analysis(report_normal["return"] - report_normal["bench"] - report_normal["cost"]) analysis_df = pd.concat(analysis) # type: pd.DataFrame print(analysis_df)

有关 API 的更多信息,请参阅记录模板 API。

已知限制

Python 对象是基于 pickle 保存的,当转储对象和加载对象的环境不同时,可能会导致问题。

活动:0 -

-

分析:评估与结果分析

简介

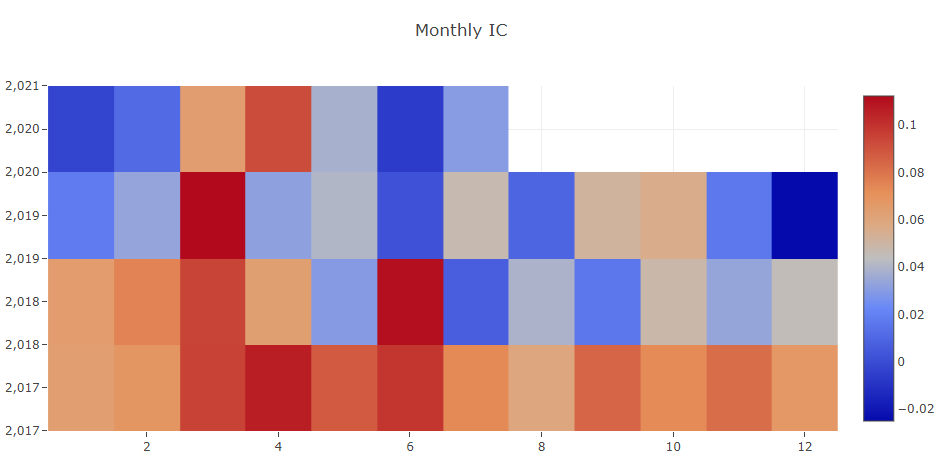

分析旨在展示日内交易的图形化报告,帮助用户直观地评估和分析投资组合。以下是一些可供查看的图表:

-

analysis_position-

report_graph -

score_ic_graph -

cumulative_return_graph -

risk_analysis_graph -

rank_label_graph

-

-

analysis_model-

model_performance_graph

-

Qlib 中所有累积利润指标(例如,收益、最大回撤)都通过求和计算。这避免了指标或图表随时间呈指数级倾斜。

图形化报告

用户可以运行以下代码来获取所有支持的报告。

Python>> import qlib.contrib.report as qcr >> print(qcr.GRAPH_NAME_LIST) ['analysis_position.report_graph', 'analysis_position.score_ic_graph', 'analysis_position.cumulative_return_graph', 'analysis_position.risk_analysis_graph', 'analysis_position.rank_label_graph', 'analysis_model.model_performance_graph']注意

有关更多详细信息,请参阅函数文档:类似于 help(qcr.analysis_position.report_graph)。

用法与示例

analysis_position.report的用法API

图形结果

注意

-

X 轴:交易日

-

Y 轴:

-

cum bench:基准的累计收益系列。 -

cum return wo cost:无成本投资组合的累计收益系列。 -