机器学习Python教程

Section outline

-

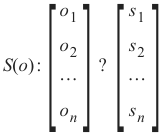

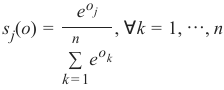

分类器 (Classifier)

分类器是指一个能将未标记实例映射到类别的程序或函数。

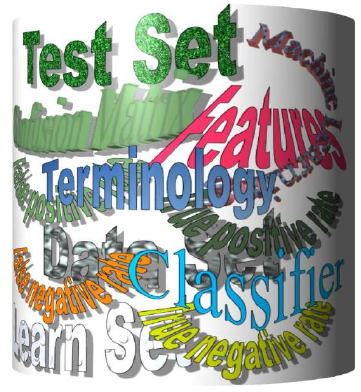

混淆矩阵 (Confusion Matrix)

混淆矩阵,也称为列联表或误差矩阵,用于可视化分类器的性能。

矩阵的列表示预测类别的实例,而行表示实际类别的实例。(注意:这也可以反过来。)

在二元分类的情况下,该表有 2 行 2 列。

示例:

这意味着分类器正确预测了 42 个男性实例,错误地将 8 个男性实例预测为女性。它正确预测了 32 个女性实例。有 18 个实例被错误地预测为男性而非女性。

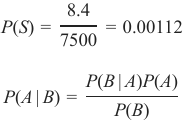

准确率 (Accuracy / Error Rate)

准确率是一个统计度量,定义为分类器做出的正确预测数除以分类器做出的预测总数。

我们上一个例子中的分类器正确预测了 42 个男性实例和 32 个女性实例。因此,准确率可以计算为:

准确率 = (42 + 32) / (42 + 8 + 18 + 32) = 0.72

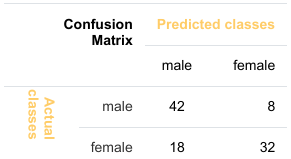

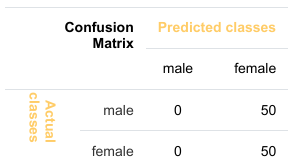

让我们假设我们有一个分类器,它总是预测“女性”。在这种情况下,我们的准确率为 50%。

我们将演示所谓的准确率悖论。

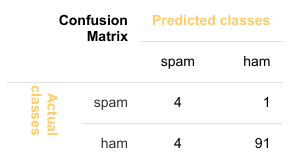

一个垃圾邮件识别分类器由以下混淆矩阵描述:

该分类器的准确率为 (4 + 91) / 100,即 95%。

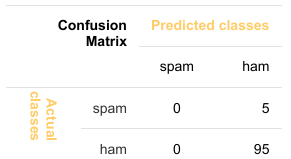

以下分类器仅预测“非垃圾邮件”,并且具有相同的准确率。

这个分类器的准确率是 95%,尽管它完全无法识别任何垃圾邮件。

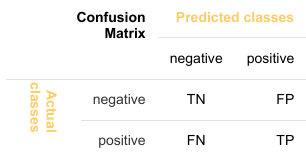

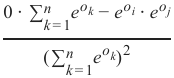

精确率 (Precision) 和 召回率 (Recall)

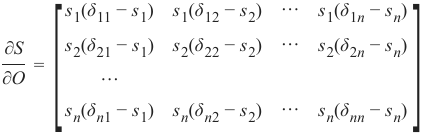

-

准确率 (Accuracy):

-

精确率 (Precision):

-

召回率 (Recall):

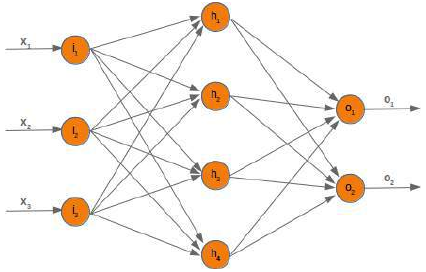

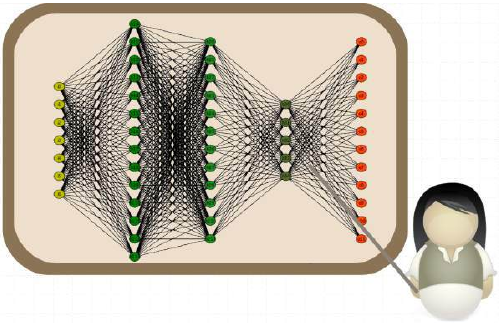

监督学习 (Supervised Learning)

机器学习程序被赋予输入数据和相应的标签。这意味着学习数据必须事先由人工标记。

无监督学习 (Unsupervised Learning)

没有向学习算法提供标签。算法必须自行找出输入数据的聚类。

强化学习 (Reinforcement Learning)

计算机程序与其环境动态交互。这意味着程序会收到正向和/或负向反馈以提高其性能。

CLASSIFIERA program or a function which maps from unlabeled instances to classes is called a classifier.CONFUSION MATRIXA confusion matrix, also called a contingeny table or error matrix, is used to visualize the performance of aclassifier.The columns of the matrix represent the instances of the predicted classes and the rows represent the instancesof the actual class. (Note: It can be the other way around as well.)In the case of binary classification the table has 2 rows and 2 columns.Example:3ConfusionMatrixPredictedmaleclassesfemalecl a sA c male428tsueaslfemale1832This means that the classifier correctly predicted a male person in 42 cases and it wrongly predicted 8 maleinstances as female. It correctly predicted 32 instances as female. 18 cases had been wrongly predicted as maleinstead of female.ACCURACY (ERROR RATE)Accuracy is a statistical measure which is defined as the quotient of correct predictions made by a classifierdivided by the sum of predictions made by the classifier.The classifier in our previous example predicted correctly predicted 42 male instances and 32 female instance.Therefore, the accuracy can be calculated by:accuracy = (42 + 32) / (42 + 8 + 18 + 32)which is 0.72Let's assume we have a classifier, which always predicts "female". We have an accuracy of 50 % in this case.ConfusionMatrixPredictedmaleclassesfemalecl a sA c male050stueaslfemale050We will demonstrate the so-called accuracy paradox.A spam recogition classifier is described by the following confusion matrix:4ConfusionMatrixPredictedspamclasseshamcl a sA c spam41tsueaslham491The accuracy of this classifier is (4 + 91) / 100, i.e. 95 %.The following classifier predicts solely "ham" and has the same accuracy.ConfusionMatrixPredictedspamclasseshamcl a sA c spam05tsueaslham095The accuracy of this classifier is 95%, even though it is not capable of recognizing any spam at all.PRECISION AND RECALLConfusionMatrixPredictednegativeclassespositivecl a sA c negativeTNFPtsueaslpositiveFNTPAccuracy: (TN + TP) / (TN + TP + FN + FP)Precision: TP / (TP + FP)5Recall: TP / (TP + FN)SUPERVISED LEARNINGThe machine learning program is both given the input data and the corresponding labelling. This means thatthe learn data has to be labelled by a human being beforehand.UNSUPERVISED LEARNINGNo labels are provided to the learning algorithm. The algorithm has to figure out the a clustering of the inputdata.REINFORCEMENT LEARNINGA computer program dynamically interacts with its environment. This means that the program receivespositive and/or negative feedback to improve it performance. -

-

机器学习简介:数据、经验与评估

机器学习的核心在于让模型适应数据。因此,首先我们需要了解数据如何被表示,以便计算机能够理解。

机器学习的核心在于让模型适应数据。因此,首先我们需要了解数据如何被表示,以便计算机能够理解。在本章开头,我们引用了汤姆·米切尔 (Tom Mitchell) 对机器学习的定义:“一个设计良好的学习问题:如果一个计算机程序在任务 T 上的表现,由性能度量 P 来衡量,通过经验 E 得到提升,那么就称该程序从经验 E 中学习。”数据是机器学习的“原材料”,机器学习正是从数据中学习。在米切尔的定义中,“数据”隐藏在“经验 E”和“性能度量 P”这两个术语背后。如前所述,我们需要带标签的数据来训练和测试我们的算法。

然而,在开始训练分类器之前,我们强烈建议您熟悉您的数据。Numpy 提供了理想的数据结构来表示您的数据,而 Matplotlib 则为数据可视化提供了强大的功能。

接下来,我们将使用 sklearn 模块中的数据来演示如何完成这些操作。

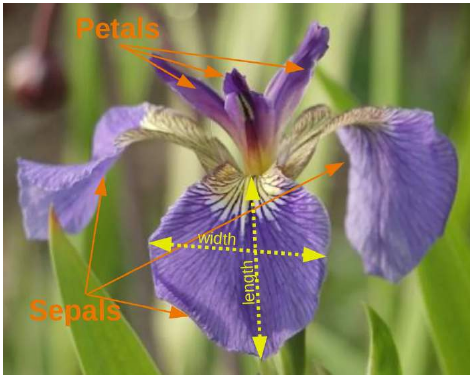

Iris 数据集:机器学习界的“Hello World”

您看过的第一个程序是什么?我敢打赌,很可能是一个用某种编程语言输出“Hello World”的程序。我大概率是对的。几乎所有编程入门书籍或教程都以这样的程序开始。这个传统可以追溯到 1968 年布莱恩·柯尼汉 (Brian Kernighan) 和丹尼斯·里奇 (Dennis Ritchie) 合著的《C 语言程序设计》一书!

同样,您在机器学习入门教程中看到的第一个数据集极有可能是“Iris 数据集”。Iris 数据集包含了来自 3 种不同鸢尾花(Iris)的 150 个样本的测量数据:

-

Setosa(山鸢尾)

-

Versicolor(变色鸢尾)

-

Virginica(维吉尼亚鸢尾)

Iris 数据集因其简单性而经常被使用。这个数据集包含在 scikit-learn 中,但在深入研究 Iris 数据集之前,我们先来看看 scikit-learn 中可用的其他数据集。

Machine learning is about adapting

models to data. For this reason we begin

by showing how data can be represented

in order to be understood by the computer.

At the beginning of this chapter we quoted

Tom Mitchell's definition of machine

learning: "Well posed Learning Problem:

A computer program is said to learn from

experience E with respect to some task T

and some performance measure P, if its

performance on T, as measured by P,

improves with experience E." Data is the

"raw material" for machine learning. It

learns from data. In Mitchell's definition,

"data" is hidden behind the terms

"experience E" and "performance measure

P". As mentioned earlier, we need labeled

data to learn and test our algorithm.

However, it is recommended that you

familiarize yourself with your data before

you begin training your classifier.

Numpy offers ideal data structures to

represent your data and Matplotlib offers great possibilities for visualizing your data.

In the following, we want to show how to do this using the data in the sklearn module.

IRIS DATASET, "HELLO WORLD" OF MACHINE LEARNING

What was the first program you saw? I bet it might have been a program giving out "Hello World" in some

programming language. Most likely I'm right. Almost every introductory book or tutorial on programming

starts with such a program. It's a tradition that goes back to the 1968 book "The C Programming Language" by

Brian Kernighan and Dennis Ritchie!

The likelihood that the first dataset you will see in an introductory tutorial on machine learning will be the

"Iris dataset" is similarly high. The Iris dataset contains the measurements of 150 iris flowers from 3 different

species:

••Iris-Setosa,

Iris-Versicolor,and

15

IrisIrisIris• Iris-Virginica.

Setosa

Versicolor

Virginica

16

The iris dataset is often used for its simplicity. This dataset is contained in scikit-learn, but before we have a

deeper look into the Iris dataset we will look at the other datasets available in scikit-learn. -

-

例如,scikit-learn 提供了关于这些鸢尾花物种的非常直接的数据集。该数据集包含以下内容:

-

Iris 数据集的特征(Features):

-

萼片长度(单位:厘米)

-

萼片宽度(单位:厘米)

-

花瓣长度(单位:厘米)

-

花瓣宽度(单位:厘米)

-

-

要预测的目标类别(Target classes):

-

鸢尾花-Setosa

-

鸢尾花-Versicolor

-

鸢尾花-Virginica

-

scikit-learn 内嵌了一份 Iris CSV 文件,并提供了一个辅助函数来将其加载到 numpy 数组中:

Pythonfrom sklearn.datasets import load_iris iris = load_iris()生成的数据集是一个 Bunch 对象:

Pythontype(iris)输出:

sklearn.utils.Bunch您可以使用

keys()方法查看此数据类型可用的内容:Pythoniris.keys()输出:

dict_keys(['data', 'target', 'target_names', 'DESCR', 'feature_names', 'filename'])Bunch 对象类似于字典,但它还允许以属性方式访问键:

Pythonprint(iris["target_names"]) print(iris.target_names)输出:

['setosa' 'versicolor' 'virginica'] ['setosa' 'versicolor' 'virginica']每个样本花的特征存储在数据集的

data属性中:Pythonn_samples, n_features = iris.data.shape print('样本数量:', n_samples) print('特征数量:', n_features) # 第一个样本(第一朵花)的萼片长度、萼片宽度、花瓣长度和花瓣宽度 print(iris.data[0])输出:

样本数量: 150 特征数量: 4 [5.1 3.5 1.4 0.2]每朵花的特征都存储在数据集的

data属性中。让我们看一些样本:Python# 索引为 12, 26, 89 和 114 的花 iris.data[[12, 26, 89, 114]]输出:

array([[4.8, 3. , 1.4, 0.1], [5. , 3.4, 1.6, 0.4], [5.5, 2.5, 4. , 1.3], [5.8, 2.8, 5.1, 2.4]])关于每个样本类别的信息,即标签,存储在数据集的

target属性中:Pythonprint(iris.data.shape) print(iris.target.shape)输出:

(150, 4) (150,)Pythonprint(iris.target)输出:

[0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2]通过使用 NumPy 的

bincount函数,我们可以看到该数据集中的类别分布均匀——每个物种有 50 朵花:Pythonimport numpy as np np.bincount(iris.target)输出:

array([50, 50, 50])-

类别 0: 鸢尾花-Setosa

-

类别 1: 鸢尾花-Versicolor

-

类别 2: 鸢尾花-Virginica

这些类别名称存储在最后一个属性,即

target_names中:Pythonprint(iris.target_names)输出:

['setosa' 'versicolor' 'virginica']我们 Iris 数据集中每个样本类别的信息存储在数据集的

target属性中:Pythonprint(iris.target)输出:

[0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2]除了数据本身的形状,我们还可以检查标签(即

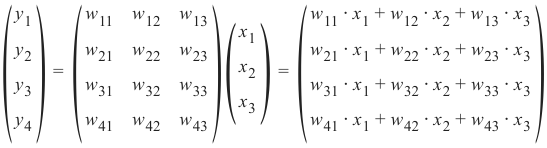

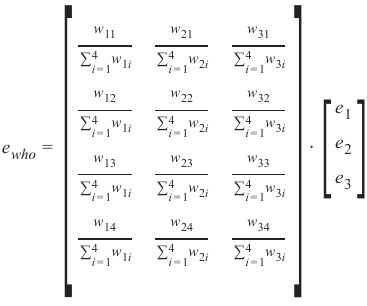

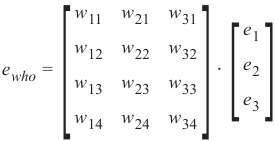

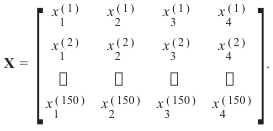

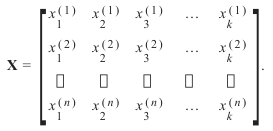

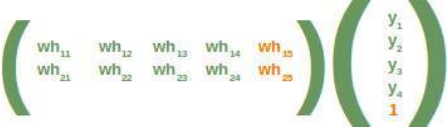

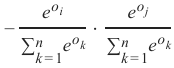

target.shape)的形状:每个花样本是数据数组中的一行,列(特征)表示以厘米为单位的花的测量值。例如,我们可以用以下格式表示这个由150个样本和4个特征组成的虹膜数据集,一个二维数组或矩阵r150 × 4:

上标表示第i行,下标表示第j个特征。一般来说,我们有n行k列:

Python

Pythonprint(iris.data.shape) print(iris.target.shape)输出:

(150, 4) (150,)NumPy 的

bincount函数可以计算非负整数数组中每个值的出现次数。我们可以用它来检查数据集中类别的分布:Pythonimport numpy as np np.bincount(iris.target)输出:

array([50, 50, 50])我们可以看到这些类别是均匀分布的——每个物种有 50 朵花,即:

-

类别 0: 鸢尾花-Setosa

-

类别 1: 鸢尾花-Versicolor

-

类别 2: 鸢尾花-Virginica

这些类别名称存储在最后一个属性,即

target_names中:Pythonprint(iris.target_names)输出:

['setosa' 'versicolor' 'virginica']

For example, scikit-learn has a very straightforward set of data on these iris species. The data consist of the

following:

• Features in the Iris dataset:

1.

2.

3.

4.

sepal length in cm

sepal width in cm

petal length in cm

petal width in cm

• Target classes to predict:

1.2.3.IrisIrisIrisSetosa

Versicolour

Virginica

scikit-learnarrays:

embeds a copy of the iris CSV file along with a helper function to load it into numpy

18

from sklearn.datasets import load_iris

iris = load_iris()

The resulting dataset is a Bunch object:

type(iris)

Output:sklearn.utils.Bunch

You can see what's available for this data type by using the method keys() :

iris.keys()

Output:dict_keys(['data', 'target', 'target_names', 'DESCR', 'featur

e_names', 'filename'])

A Bunch object is similar to a dicitionary, but it additionally allows accessing the keys in an attribute style:

print(iris["target_names"])

print(iris.target_names)

['setosa' 'versicolor' 'virginica']

['setosa' 'versicolor' 'virginica']

The features of each sample flower are stored in the data attribute of the dataset:

n_samples, n_features = iris.data.shape

print('Number of samples:', n_samples)

print('Number of features:', n_features)

# the sepal length, sepal width, petal length and petal width of t

he first sample (first flower)

print(iris.data[0])

Number of samples: 150

Number of features: 4

[5.1 3.5 1.4 0.2]

The feautures of each flower are stored in the data attribute of the data set. Let's take a look at some of the

samples:

# Flowers with the indices 12, 26, 89, and 114

iris.data[[12, 26, 89, 114]]

19

Output:array([[4.8, 3. , 1.4, 0.1],

[5. , 3.4, 1.6, 0.4],

[5.5, 2.5, 4. , 1.3],

[5.8, 2.8, 5.1, 2.4]])

The information about the class of each sample, i.e. the labels, is stored in the "target" attribute of the data set:

print(iris.data.shape)

print(iris.target.shape)

(150, 4)

(150,)

print(iris.target)

[0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

1 1 1 1 1

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2

2 2 2 2 2

2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

2 2 2 2 2

2 2]

import numpy as np

np.bincount(iris.target)

Output:array([50, 50, 50])

Using NumPy's bincount function (above) we can see that the classes in this dataset are evenly distributed -

there are 50 flowers of each species, with

•••class 0: Iris Setosa

class 1: Iris Versicolor

class 2: Iris Virginica

These class names are stored in the last attribute, namely target_names :

print(iris.target_names)

['setosa' 'versicolor' 'virginica']

20

The information about the class of each sample of our Iris dataset is stored in the target attribute of the

dataset:

print(iris.target)

[0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

1 1 1 1 1

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2

2 2 2 2 2

2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

2 2 2 2 2

2 2]

Beside of the shape of the data, we can also check the shape of the labels, i.e. the target.shape :

Each flower sample is one row in the data array, and the columns (features) represent the flower measurements

in centimeters. For instance, we can represent this Iris dataset, consisting of 150 samples and 4 features, a

2-dimensional array or matrix R 150 × 4 in the following format:

X =

[

x x x 1

(1

1

150 (2)

(1)

)

xx x 2

(2

2

(1)

(2)

150 )

xx x 3

( 3

3

(1)

150 (2)

)

x x x 4

(4

4

150 (2)

(1)

)

]

.

The superscript denotes the ith row, and the subscript denotes the jth feature, respectively.

Generally, we have n rows and k columns:

X =

[

x x x 1

1

1

(2)

(1)

( n )

x x x2

2

2

(2)

( (1)

n )

x x x 3

3

3

(1)

(2)

( n )

...

...

...

x x x k

k

k

( (2)

(1)

n )

]

.

print(iris.data.shape)

21

print(iris.target.shape)

(150, 4)

(150,)

bincount of NumPy counts the number of occurrences of each value in an array of non-negative integers.

We can use this to check the distribution of the classes in the dataset:

import numpy as np

np.bincount(iris.target)

Output:array([50, 50, 50])

We can see that the classes are distributed uniformly - there are 50 flowers from each species, i.e.

•••class 0: Iris-Setosa

class 1: Iris-Versicolor

class 2: Iris-Virginica

These class names are stored in the last attribute, namely target_names :

print(iris.target_names)

['setosa' 'versicolor' 'virginica'] -

-

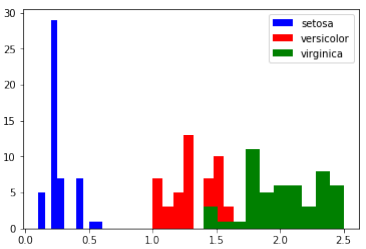

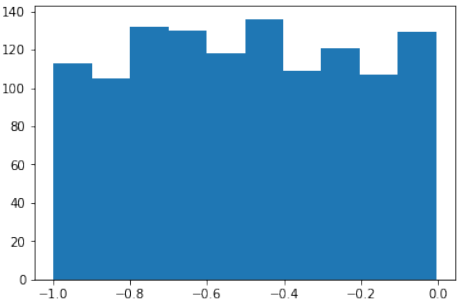

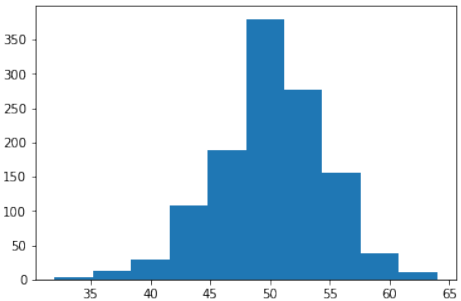

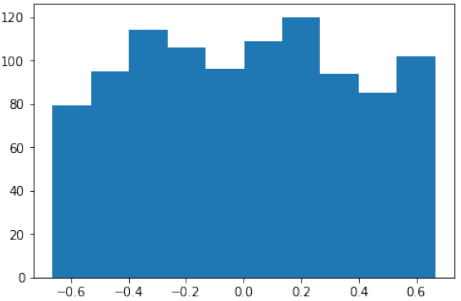

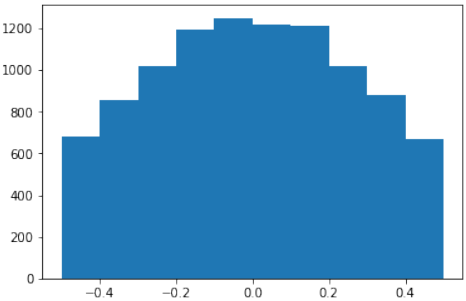

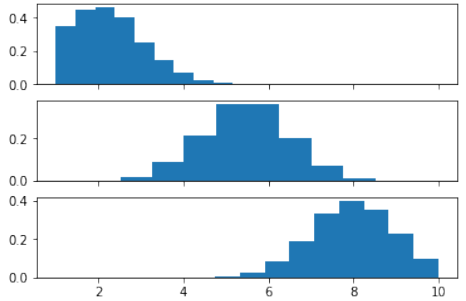

特征数据是四维的,但我们可以通过简单的直方图或散点图一次性可视化其中的一到两个维度。

Pythonfrom sklearn.datasets import load_iris iris = load_iris() # 打印 target 为 1 的前 5 个样本数据 print(iris.data[iris.target==1][:5]) # 打印 target 为 1 的前 5 个样本的第 0 个特征 print(iris.data[iris.target==1, 0][:5])输出:

[[7. 3.2 4.7 1.4] [6.4 3.2 4.5 1.5] [6.9 3.1 4.9 1.5] [5.5 2.3 4. 1.3] [6.5 2.8 4.6 1.5]] [7. 6.4 6.9 5.5 6.5]

特征直方图

我们可以使用直方图来可视化单个特征的分布,并按类别进行区分。

Pythonimport matplotlib.pyplot as plt fig, ax = plt.subplots() x_index = 3 # 选择要可视化的特征索引 (例如:3 代表花瓣宽度) colors = ['blue', 'red', 'green'] # 遍历每个类别并绘制直方图 for label, color in zip(range(len(iris.target_names)), colors): ax.hist(iris.data[iris.target==label, x_index], label=iris.target_names[label], color=color) ax.set_xlabel(iris.feature_names[x_index]) # 设置 x 轴标签为特征名称 ax.legend(loc='upper right') # 显示图例 fig.show()

练习

请查看其他特征(即花瓣长度、萼片宽度和萼片长度)的直方图。

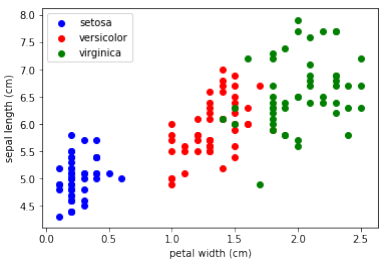

两个特征的散点图

散点图可以同时展示两个特征在同一张图中的关系:

Pythonimport matplotlib.pyplot as plt fig, ax = plt.subplots() x_index = 3 # x 轴特征索引 (例如:3 代表花瓣宽度) y_index = 0 # y 轴特征索引 (例如:0 代表萼片长度) colors = ['blue', 'red', 'green'] # 遍历每个类别并绘制散点图 for label, color in zip(range(len(iris.target_names)), colors): ax.scatter(iris.data[iris.target==label, x_index], iris.data[iris.target==label, y_index], label=iris.target_names[label], c=color) ax.set_xlabel(iris.feature_names[x_index]) # 设置 x 轴标签 ax.set_ylabel(iris.feature_names[y_index]) # 设置 y 轴标签 ax.legend(loc='upper left') # 显示图例 plt.show()

练习

在上面的脚本中改变

x_index和y_index,找到一个能够最大程度地区分这三个类别的两个参数组合。

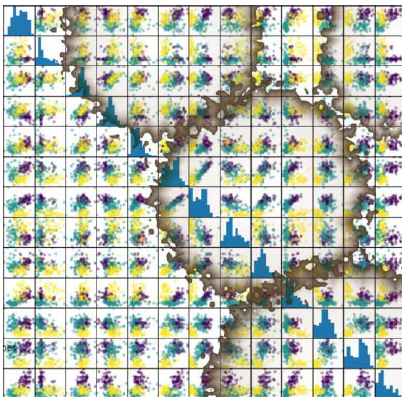

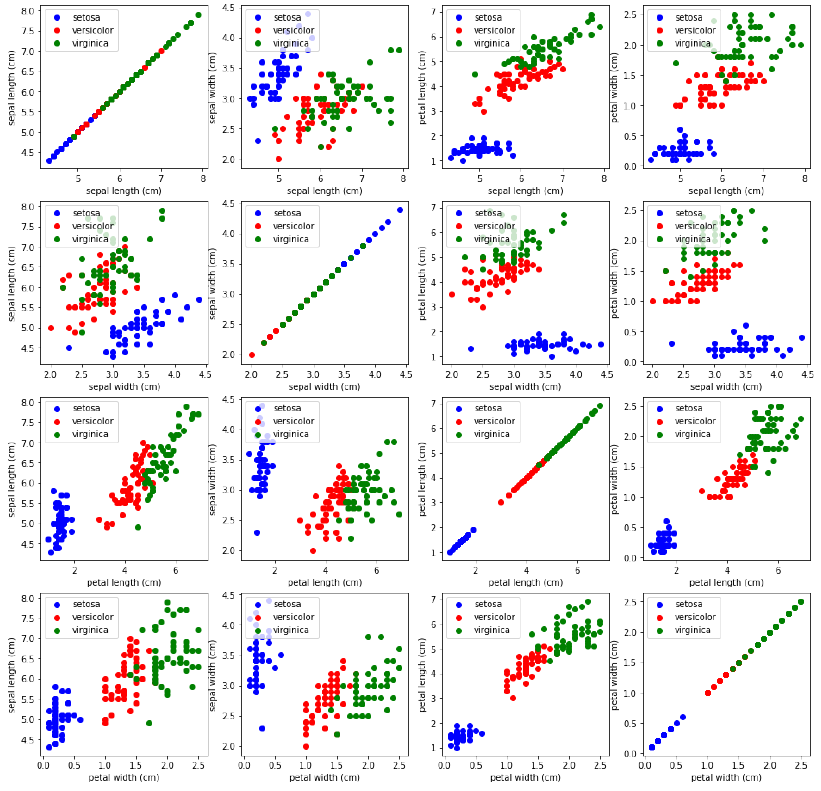

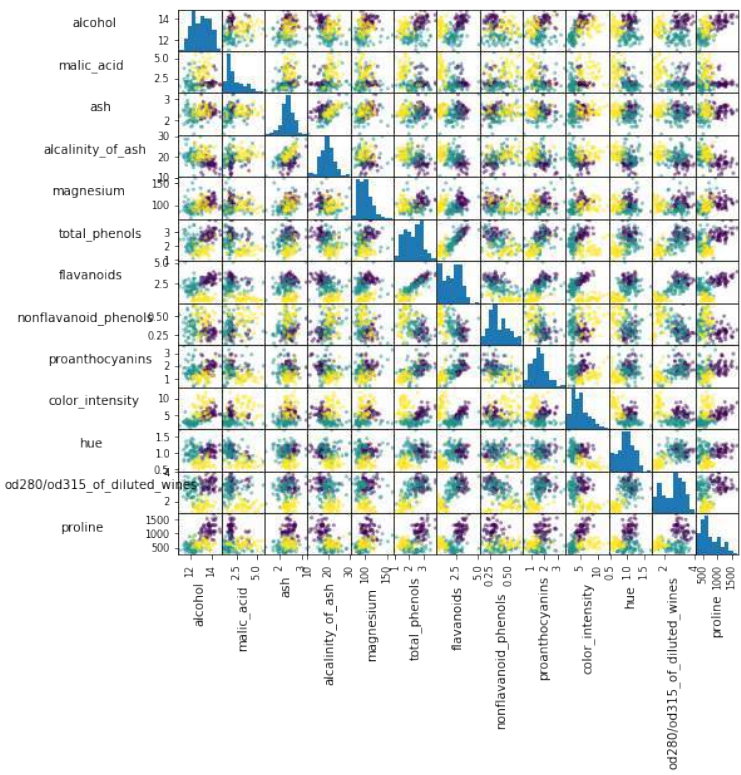

泛化

我们现在将所有特征组合在一个综合图中进行展示:

Pythonimport matplotlib.pyplot as plt n = len(iris.feature_names) # 特征数量 fig, ax = plt.subplots(n, n, figsize=(16, 16)) # 创建 n x n 的子图网格 colors = ['blue', 'red', 'green'] # 遍历所有特征组合 for x in range(n): for y in range(n): xname = iris.feature_names[x] yname = iris.feature_names[y] # 遍历每个类别并绘制散点图 for color_ind in range(len(iris.target_names)): ax[x, y].scatter(iris.data[iris.target==color_ind, x], iris.data[iris.target==color_ind, y], label=iris.target_names[color_ind], c=colors[color_ind]) ax[x, y].set_xlabel(xname) # 设置 x 轴标签 ax[x, y].set_ylabel(yname) # 设置 y 轴标签 ax[x, y].legend(loc='upper left') # 显示图例 plt.show()

The feauture data is four dimensional, but we can visualize one or two of the dimensions at a time using a

simple histogram or scatter-plot.

from sklearn.datasets import load_iris

iris = load_iris()

print(iris.data[iris.target==1][:5])

print(iris.data[iris.target==1, 0][:5])

[[7. 3.2 4.7 1.4]

[6.4 3.2 4.5 1.5]

[6.9 3.1 4.9 1.5]

[5.5 2.3 4. 1.3]

[6.5 2.8 4.6 1.5]]

[7. 6.4 6.9 5.5 6.5]

HISTOGRAMS OF THE FEATURES

import matplotlib.pyplot as plt

fig, ax = plt.subplots()

x_index = 3

colors = ['blue', 'red', 'green']

for label, color in zip(range(len(iris.target_names)), colors):

ax.hist(iris.data[iris.target==label, x_index],

label=iris.target_names[label],

color=color)

ax.set_xlabel(iris.feature_names[x_index])

ax.legend(loc='upper right')

fig.show()

23

EXERCISE

Look at the histograms of the other features, i.e. petal length, sepal widt and sepal length.

SCATTERPLOT WITH TWO FEATURES

The appearance diagram shows two features in one diagram:

import matplotlib.pyplot as plt

fig, ax = plt.subplots()

x_index = 3

y_index = 0

colors = ['blue', 'red', 'green']

for label, color in zip(range(len(iris.target_names)), colors):

ax.scatter(iris.data[iris.target==label, x_index],

iris.data[iris.target==label, y_index],

label=iris.target_names[label],

c=color)

ax.set_xlabel(iris.feature_names[x_index])

ax.set_ylabel(iris.feature_names[y_index])

ax.legend(loc='upper left')

plt.show()

24

EXERCISE

Change x_index and y_index in the above script

Change x_index and y_index in the above script and find a combination of two parameters which maximally

separate the three classes.

GENERALIZATION

We will now look at all feature combinations in one combined diagram:

import matplotlib.pyplot as plt

n = len(iris.feature_names)

fig, ax = plt.subplots(n, n, figsize=(16, 16))

colors = ['blue', 'red', 'green']

for x in range:

for y in range:

xname = iris.feature_names[x]

yname = iris.feature_names[y]

for color_ind in range(len(iris.target_names)):

ax[x, y].scatter(iris.data[iris.target==color_ind,

x],

iris.data[iris.target==color_ind, y],

label=iris.target_names[color_ind],

c=colors[color_ind])

25

ax[x, y].set_xlabel(xname)

ax[x, y].set_ylabel(yname)

ax[x, y].legend(loc='upper left')

plt.show() -

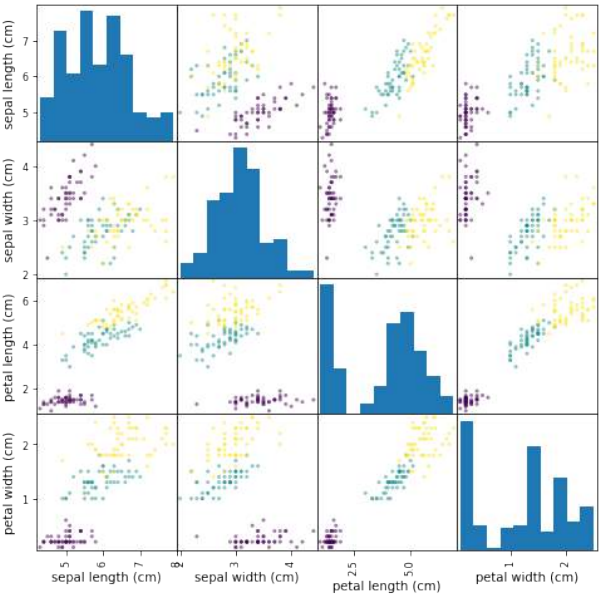

我们也可以不手动操作,而是使用 pandas 模块提供的散点图矩阵。

散点图矩阵可以显示数据集中所有特征之间的散点图,以及每个特征的分布直方图。

Pythonimport pandas as pd import matplotlib.pyplot as plt # 导入 matplotlib 以便显示图表 # 将 Iris 数据转换为 Pandas DataFrame iris_df = pd.DataFrame(iris.data, columns=iris.feature_names) # 生成散点图矩阵 pd.plotting.scatter_matrix(iris_df, c=iris.target, # 根据目标类别着色 figsize=(8, 8) # 设置图表大小 ) plt.show() # 显示图表

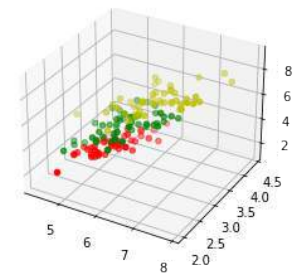

3D 可视化

为了更全面地理解数据,我们可以尝试进行三维可视化。

Pythonimport matplotlib.pyplot as plt from sklearn.datasets import load_iris from mpl_toolkits.mplot3d import Axes3D # 导入 3D 绘图工具 iris = load_iris() X = [] for iclass in range(3): X.append([[], [], []]) # 为每个类别初始化三个空列表,分别用于存储 x, y, z 坐标 # 遍历 Iris 数据集,根据类别将数据分配到 X 中 for i in range(len(iris.data)): if iris.target[i] == iclass: X[iclass][0].append(iris.data[i][0]) # 萼片长度作为 x 轴 X[iclass][1].append(iris.data[i][1]) # 萼片宽度作为 y 轴 X[iclass][2].append(sum(iris.data[i][2:])) # 花瓣长度和花瓣宽度之和作为 z 轴 colours = ("r", "g", "y") # 定义不同类别的颜色 (红、绿、黄) fig = plt.figure() ax = fig.add_subplot(111, projection='3d') # 创建一个 3D 子图 # 为每个类别绘制散点图 for iclass in range(3): ax.scatter(X[iclass][0], X[iclass][1], X[iclass][2], c=colours[iclass]) plt.show() # 显示 3D 散点图

Instead of doing it manually we can also use the scatterplot matrix provided by the pandas module.

Scatterplot matrices show scatter plots between all features in the data set, as well as histograms to show the

distribution of each feature.

import pandas as pd

iris_df = pd.DataFrame(iris.data, columns=iris.feature_names)

pd.plotting.scatter_matrix(iris_df,

c=iris.target,

figsize=(8, 8)

);

27

3-DIMENSIONAL VISUALIZATION

import matplotlib.pyplot as plt

from sklearn.datasets import load_iris

from mpl_toolkits.mplot3d import Axes3D

iris = load_iris()

X = []

for iclass in range(3):

X.append([[], [], []])

for i in range(len(iris.data)):

if iris.target[i] == iclass:

X[iclass][0].append(iris.data[i][0])

X[iclass][1].append(iris.data[i][1])

X[iclass][2].append(sum(iris.data[i][2:]))

colours = ("r", "g", "y")

fig = plt.figure()

ax = fig.add_subplot(111, projection='3d')

for iclass in range(3):

ax.scatter(X[iclass][0], X[iclass][1], X[iclass][2], c=colour

s[iclass])

plt.show() -

Scikit-learn 提供了大量的数据集,用于测试学习算法。它们主要分为三种类型:

Scikit-learn 提供了大量的数据集,用于测试学习算法。它们主要分为三种类型:-

打包数据 (Packaged Data):这些小型数据集与 scikit-learn 安装包一同提供,可以使用

sklearn.datasets.load_*工具进行加载。 -

可下载数据 (Downloadable Data):这些大型数据集可供下载,scikit-learn 提供了简化下载过程的工具。这些工具可以在

sklearn.datasets.fetch_*中找到。 -

生成数据 (Generated Data):有几种数据集是基于随机种子从模型中生成的。这些可以在

sklearn.datasets.make_*中获取。

您可以使用 IPython 的 Tab 补全功能来探索可用的数据集加载器、抓取器和生成器。在从

sklearn导入datasets子模块后,输入:datasets.load_<TAB>或

datasets.fetch_<TAB>或

datasets.make_<TAB>即可查看可用函数的列表。

数据和标签的结构

Scikit-learn 中的数据在大多数情况下都保存为二维的 Numpy 数组,其形状为

(n, m)。许多算法也接受相同形状的scipy.sparse矩阵。-

n(n_samples):样本数量。每个样本都是一个需要处理(例如分类)的项。一个样本可以是一篇文档、一张图片、一段声音、一段视频、一个天文物体、数据库或 CSV 文件中的一行,或者任何您可以用一组固定的定量特征来描述的事物。 -

m(n_features):特征数量,即可以定量描述每个项的独特属性的数量。特征通常是实数值,但在某些情况下也可以是布尔值或离散值。

Pythonfrom sklearn import datasets请注意:这些数据集中的许多都相当大,可能需要很长时间才能下载!

Scikit-learn makes available a host of

datasets for testing learning algorithms.

They come in three flavors:

•Packaged Data: these small

datasets are packaged with

the scikit-learn installation,

and can be downloaded

using the tools in

••sklearn.datasets.load_*

Downloadable Data: these larger datasets are available for download, and scikit-learn includes

tools which streamline this process. These tools can be found in

sklearn.datasets.fetch_*

Generated Data: there are several datasets which are generated from models based on a random

seed. These are available in the sklearn.datasets.make_*

You can explore the available dataset loaders, fetchers, and generators using IPython's tab-completion

functionality. After importing the datasets submodule from sklearn , type

datasets.load_<TAB>

or

datasets.fetch_<TAB>

or

datasets.make_<TAB>

to see a list of available functions.

STRUCTURE OF DATA AND LABELS

Data in scikit-learn is in most cases saved as two-dimensional Numpy arrays with the shapealgorithms also accept scipy.sparse matrices of the same shape.

(n, m) . Many

29

••n: (n_samples) The number of samples: each sample is an item to process (e.g. classify). A

sample can be a document, a picture, a sound, a video, an astronomical object, a row in database

or CSV file, or whatever you can describe with a fixed set of quantitative traits.

m: (n_features) The number of features or distinct traits that can be used to describe each item in

a quantitative manner. Features are generally real-valued, but may be Boolean or discrete-valued

in some cases.

from sklearn import datasets

Be warned: many of these datasets are quite large, and can take a long time to download! -

-

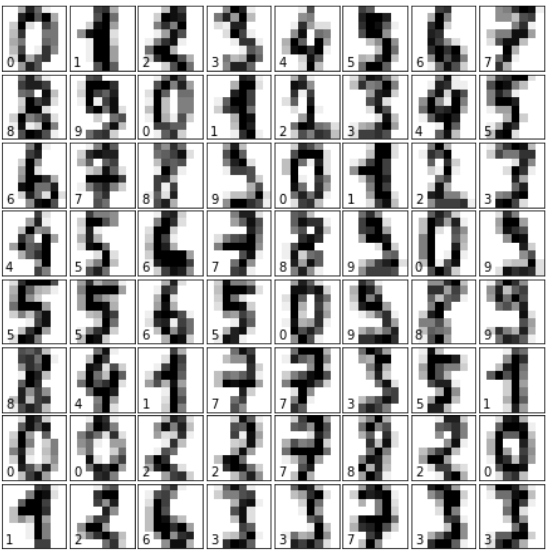

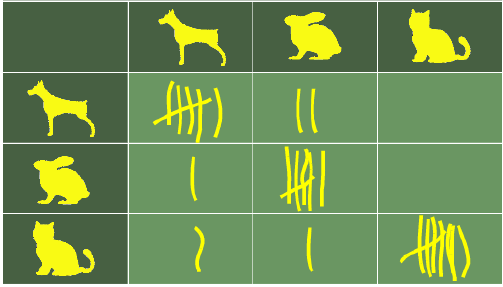

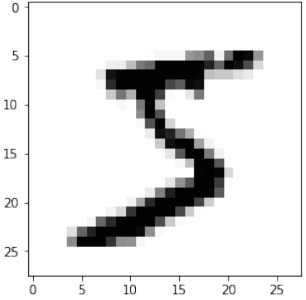

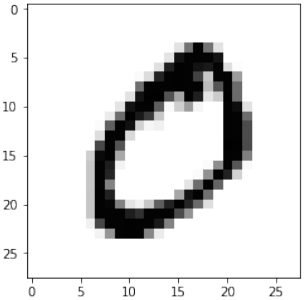

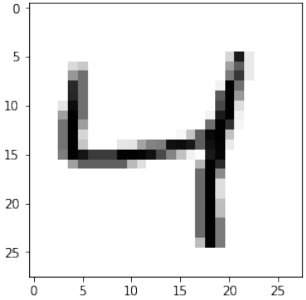

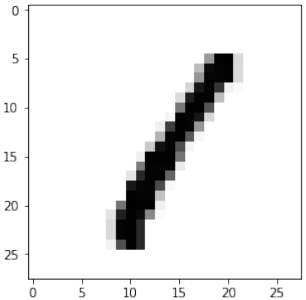

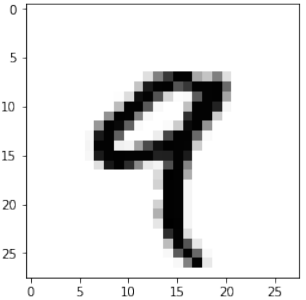

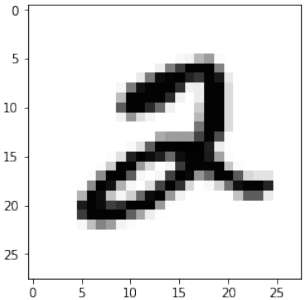

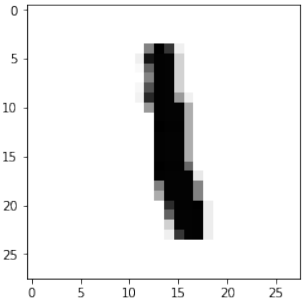

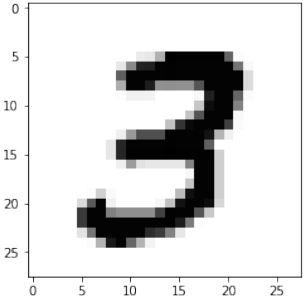

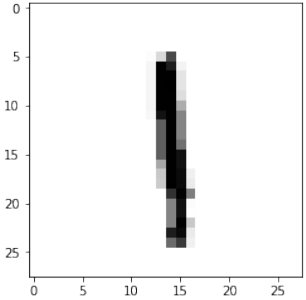

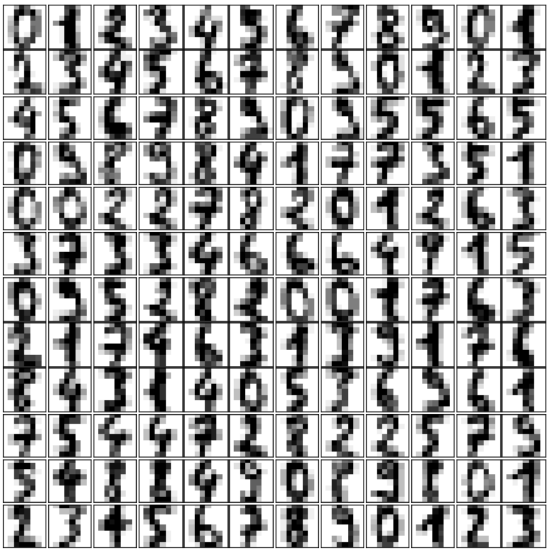

我们将更深入地研究这些数据集中的一个。我们来看一下数字数据集 (digits data set)。我们先加载它:

Pythonfrom sklearn.datasets import load_digits digits = load_digits()同样,我们可以通过查看 "keys" 来获取可用属性的概览:

Pythondigits.keys()输出:

dict_keys(['data', 'target', 'target_names', 'images', 'DESCR'])我们来看看项目和特征的数量:

Pythonn_samples, n_features = digits.data.shape print((n_samples, n_features))输出:

(1797, 64)Pythonprint(digits.data[0]) print(digits.target)输出:

[ 0. 0. 5. 13. 9. 1. 0. 0. 0. 0. 13. 15. 10. 15. 5. 0. 0. 3. 15. 2. 0. 11. 8. 0. 0. 4. 12. 0. 0. 8. 8. 0. 0. 5. 16. 8. 0. 0. 9. 8. 0. 0. 4. 11. 0. 1. 12. 7. 0. 0. 2. 14. 5. 0. 0. 0. 0. 6. 13. 10. 0. 0. 0.] [0 1 2 ... 8 9 8]数据也可以通过

digits.images获取。这是以 8 行 8 列形式表示的图像的原始数据。通过 "data",一张图像对应一个长度为 64 的一维 Numpy 数组;而 "images" 表示则包含形状为 (8, 8) 的二维 Numpy 数组。

Pythonprint("一个项目的形状: ", digits.data[0].shape) print("一个项目的数据类型: ", type(digits.data[0])) print("一个项目的形状: ", digits.images[0].shape) print("一个项目的数据类型: ", type(digits.images[0]))输出:

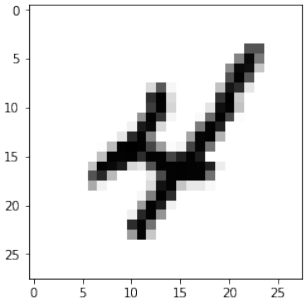

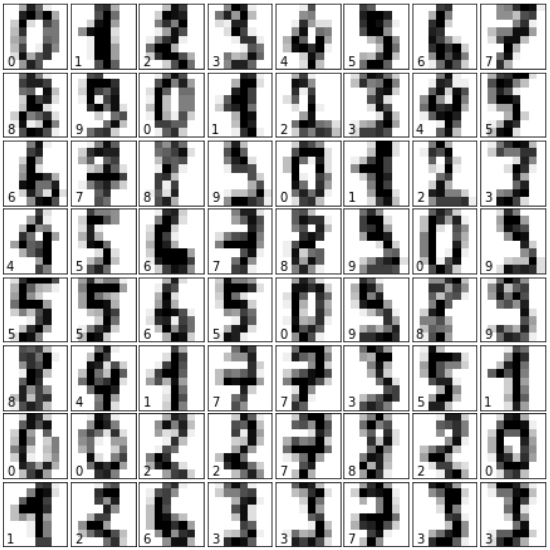

一个项目的形状: (64,) 一个项目的数据类型: <class 'numpy.ndarray'> 一个项目的形状: (8, 8) 一个项目的数据类型: <class 'numpy.ndarray'>让我们将数据可视化。这比我们上面使用的简单散点图稍微复杂一些,但我们可以很快完成。

Pythonimport matplotlib.pyplot as plt # 设置图表 fig = plt.figure(figsize=(6, 6)) # 图表大小(英寸) fig.subplots_adjust(left=0, right=1, bottom=0, top=1, hspace=0.05, wspace=0.05) # 绘制数字:每个图像都是 8x8 像素 for i in range(64): ax = fig.add_subplot(8, 8, i + 1, xticks=[], yticks=[]) ax.imshow(digits.images[i], cmap=plt.cm.binary, interpolation='nearest') # 用目标值标记图像 ax.text(0, 7, str(digits.target[i])) plt.show()

练习

练习 1

sklearn 中包含一个“葡萄酒数据集 (wine data set)”。

-

找到并加载此数据集。

-

您能找到它的描述吗?

-

类别的名称是什么?

-

特征是什么?

-

数据和带标签的数据在哪里?

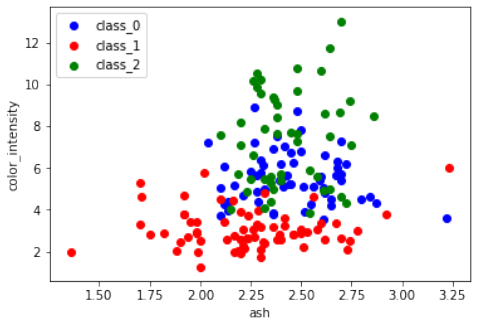

练习 2

创建葡萄酒数据集中特征

ash和color_intensity的散点图。练习 3

创建葡萄酒数据集特征的散点矩阵。

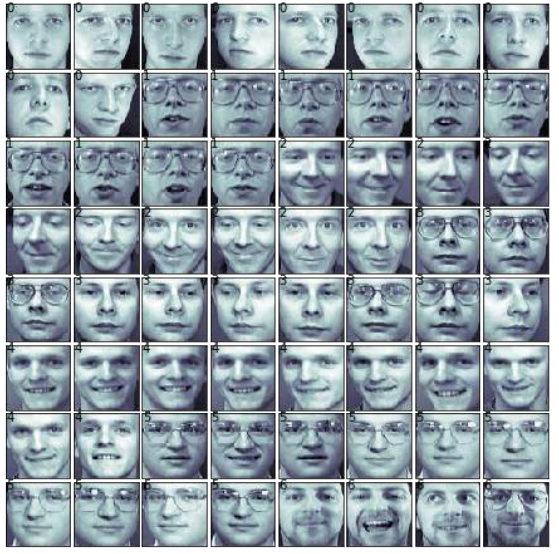

练习 4

获取 Olivetti 人脸数据集并可视化这些人脸。

解决方案

练习 1 解决方案

加载“葡萄酒数据集”:

Pythonfrom sklearn import datasets wine = datasets.load_wine()描述可以通过 "DESCR" 访问:

Pythonprint(wine.DESCR)类别的名称和特征可以通过以下方式获取:

Pythonprint(wine.target_names) print(wine.feature_names)输出:

['class_0' 'class_1' 'class_2'] ['alcohol', 'malic_acid', 'ash', 'alcalinity_of_ash', 'magnesium', 'total_phenols', 'flavanoids', 'nonflavanoid_phenols', 'proanthocyanins', 'color_intensity', 'hue', 'od280/od315_of_diluted_wines', 'proline']数据和带标签的数据:

Pythondata = wine.data labelled_data = wine.target练习 2 解决方案

Pythonfrom sklearn import datasets import matplotlib.pyplot as plt wine = datasets.load_wine() features = 'ash', 'color_intensity' features_index = [wine.feature_names.index(features[0]), wine.feature_names.index(features[1])] colors = ['blue', 'red', 'green'] for label, color in zip(range(len(wine.target_names)), colors): plt.scatter(wine.data[wine.target==label, features_index[0]], wine.data[wine.target==label, features_index[1]], label=wine.target_names[label], c=color) plt.xlabel(features[0]) plt.ylabel(features[1]) plt.legend(loc='upper left') plt.show()

练习 3 解决方案

Pythonimport pandas as pd from sklearn import datasets import matplotlib.pyplot as plt # 导入 matplotlib 以便显示图表 wine = datasets.load_wine() def rotate_labels(df, axes): """ 改变标签输出的旋转角度, y 轴标签水平,x 轴标签垂直 """ n = len(df.columns) for x in range(n): for y in range(n): # 获取子图的轴 ax = axes[x, y] # 使 x 轴名称垂直 ax.xaxis.label.set_rotation(90) # 使 y 轴名称水平 ax.yaxis.label.set_rotation(0) # 确保 y 轴名称在绘图区域之外 ax.yaxis.labelpad = 50 wine_df = pd.DataFrame(wine.data, columns=wine.feature_names) axs = pd.plotting.scatter_matrix(wine_df, c=wine.target, figsize=(8, 8), ) rotate_labels(wine_df, axs) plt.show() # 显示图表

练习 4 解决方案

Pythonfrom sklearn.datasets import fetch_olivetti_faces import numpy as np import matplotlib.pyplot as plt # 获取人脸数据 faces = fetch_olivetti_faces() faces.keys()输出:

dict_keys(['data', 'images', 'target', 'DESCR'])Pythonn_samples, n_features = faces.data.shape print((n_samples, n_features))输出:

(400, 4096)Pythonnp.sqrt(4096)输出:

64.0Pythonfaces.images.shape输出:

(400, 64, 64)Pythonfaces.data.shape输出:

(400, 4096)Pythonprint(np.all(faces.images.reshape((400, 4096)) == faces.data))输出:

TruePython# 设置图表 fig = plt.figure(figsize=(6, 6)) # 图表大小(英寸) fig.subplots_adjust(left=0, right=1, bottom=0, top=1, hspace=0.05, wspace=0.05) # 绘制人脸:每个图像是 64x64 像素 for i in range(64): ax = fig.add_subplot(8, 8, i + 1, xticks=[], yticks=[]) ax.imshow(faces.images[i], cmap=plt.cm.bone, interpolation='nearest') # 用目标值标记图像 ax.text(0, 7, str(faces.target[i])) plt.show()

更多数据集

sklearn 提供了许多其他数据集。如果您还需要更多,可以在维基百科的“机器学习研究数据集列表”中找到更多有用的信息。

We will have a closer look at one of these datasets. We look at the digits data set. We will load it first:

from sklearn.datasets import load_digits

digits = load_digits()

Again, we can get an overview of the available attributes by looking at the "keys":

digits.keys()

Output:dict_keys(['data', 'target', 'target_names', 'images', 'DESC

R'])

Let's have a look at the number of items and features:

n_samples, n_features = digits.data.shape

print((n_samples, n_features))

(1797, 64)

print(digits.data[0])

print(digits.target)

[ 0. 0. 5. 13. 9. 1. 0.

0.

0.

0. 13. 15. 10. 15.

5.

0.

0. 3.

15. 2. 0. 11. 8. 0. 0.

4. 12.

0.

0.

8.

8.

0.

0.

5.

8. 0.

0. 9. 8. 0. 0. 4. 11.

0.

1. 12.

7.

0.

0.

2. 14.

5. 1

0. 12.

0. 0. 0. 0. 6. 13. 10.

0.

0.

0.]

[0 1 2 ... 8 9 8]

The data is also available at digits.images. This is the raw data of the images in the form of 8 lines and 8

columns.

With "data" an image corresponds to a one-dimensional Numpy array with the length 64, and "images"

representation contains 2-dimensional numpy arrays with the shape (8, 8)

print("Shape of an item: ", digits.data[0].shape)

print("Data type of an item: ", type(digits.data[0]))

print("Shape of an item: ", digits.images[0].shape)

31

print("Data tpye of an item: ", type(digits.images[0]))

Shape of an item: (64,)

Data type of an item: <class 'numpy.ndarray'>

Shape of an item: (8, 8)

Data tpye of an item: <class 'numpy.ndarray'>

Let's visualize the data. It's little bit more involved than the simple scatter-plot we used above, but we can do it

rather quickly.

# set up the figure

fig = plt.figure(figsize=(6, 6)) # figure size in inches

fig.subplots_adjust(left=0, right=1, bottom=0, top=1, hspace=0.0

5, wspace=0.05)

# plot the digits: each image is 8x8 pixels

for i in range(64):

ax = fig.add_subplot(8, 8, i + 1, xticks=[], yticks=[])

ax.imshow(digits.images[i], cmap=plt.cm.binary, interpolatio

n='nearest')

# label the image with the target value

ax.text(0, 7, str(digits.target[i]))

32

EXERCISES

EXERCISE 1

sklearn contains a "wine data set".

•

•

•

•

•

Find and load this data set

Can you find a description?

What are the names of the classes?

What are the features?

Where is the data and the labeled data?

EXERCISE 2:

Create a scatter plot of the features ash and color_intensity of the wine data set.

33

EXERCISE 3:

Create a scatter matrix of the features of the wine dataset.

EXERCISE 4:

Fetch the Olivetti faces dataset and visualize the faces.

SOLUTIONS

SOLUTION TO EXERCISE 1

Loading the "wine data set":

from sklearn import datasets

wine = datasets.load_wine()

The description can be accessed via "DESCR":

In [ ]:

print(wine.DESCR)

The names of the classes and the features can be retrieved like this:

print(wine.target_names)

print(wine.feature_names)

['class_0' 'class_1' 'class_2']

['alcohol', 'malic_acid', 'ash', 'alcalinity_of_ash', 'magnesiu

m', 'total_phenols', 'flavanoids', 'nonflavanoid_phenols', 'proant

hocyanins', 'color_intensity', 'hue', 'od280/od315_of_diluted_wine

s', 'proline']

data = wine.data

labelled_data = wine.target

SOLUTION TO EXERCISE 2:

from sklearn import datasets

import matplotlib.pyplot as plt

34

wine = datasets.load_wine()

features = 'ash', 'color_intensity'

features_index = [wine.feature_names.index(features[0]),

wine.feature_names.index(features[1])]

colors = ['blue', 'red', 'green']

for label, color in zip(range(len(wine.target_names)), colors):

plt.scatter(wine.data[wine.target==label, features_index[0]],

wine.data[wine.target==label, features_index[1]],

label=wine.target_names[label],

c=color)

plt.xlabel(features[0])

plt.ylabel(features[1])

plt.legend(loc='upper left')

plt.show()

SOLUTION TO EXERCISE 3:

import pandas as pd

from sklearn import datasets

wine = datasets.load_wine()

def rotate_labels(df, axes):

""" changing the rotation of the label output,

y labels horizontal and x labels vertical """

35

n = len(df.columns)

for x in range:

for y in range:

# to get the axis of subplots

ax = axs[x, y]

# to make x axis name vertical

ax.xaxis.label.set_rotation(90)

# to make y axis name horizontal

ax.yaxis.label.set_rotation(0)

# to make sure y axis names are outside the plot area

ax.yaxis.labelpad = 50

wine_df = pd.DataFrame(wine.data, columns=wine.feature_names)

axs = pd.plotting.scatter_matrix(wine_df,

c=wine.target,

figsize=(8, 8),

);

rotate_labels(wine_df, axs)

36

SOLUTION TO EXERCISE 4

from sklearn.datasets import fetch_olivetti_faces

# fetch the faces data

faces = fetch_olivetti_faces()

faces.keys()

Output:dict_keys(['data', 'images', 'target', 'DESCR'])

37

n_samples, n_features = faces.data.shape

print((n_samples, n_features))

(400, 4096)

np.sqrt(4096)

Output:64.0

faces.images.shape

Output400, 64, 64)

faces.data.shape

Output400, 4096)

print(np.all(faces.images.reshape((400, 4096)) == faces.data))

True

# set up the figure

fig = plt.figure(figsize=(6, 6)) # figure size in inches

fig.subplots_adjust(left=0, right=1, bottom=0, top=1, hspace=0.0

5, wspace=0.05)

# plot the digits: each image is 8x8 pixels

for i in range(64):

ax = fig.add_subplot(8, 8, i + 1, xticks=[], yticks=[])

ax.imshow(faces.images[i], cmap=plt.cm.bone, interpolation='ne

arest')

# label the image with the target value

ax.text(0, 7, str(faces.target[i]))

38

FURTHER DATASETS

sklearn has many more datasets available. If you still need more, you will find more on this nice List of

datasets for machine-learning research at Wikipedia. -

-

现在我们将演示如何再次读取数据并将其重新分为数据和标签:

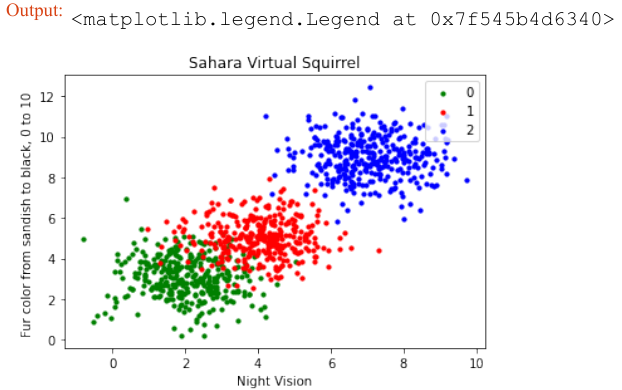

Pythonimport numpy as np # 从文件中加载数据 file_data = np.loadtxt("squirrels.txt") # 分离数据(所有列,除了最后一列) data = file_data[:, :-1] # 分离标签(最后一列) labels = file_data[:, 2:] # 将标签从二维列向量重新形状为一维数组 labels = labels.reshape((labels.shape[0]))我们将数据文件命名为

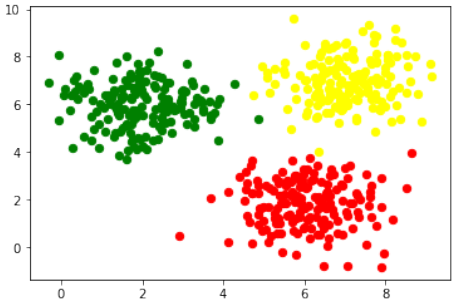

squirrels.txt,因为我们想象着一种生活在撒哈拉沙漠中的奇特动物。X 值代表这些动物的夜视能力,Y 值对应着毛皮的颜色,从沙色到黑色。我们有三种松鼠:0、1 和 2。(请注意,我们的松鼠是虚构的,与撒哈拉沙漠中真实的松鼠无关!)Pythonimport matplotlib.pyplot as plt colours = ('green', 'red', 'blue', 'magenta', 'yellow', 'cyan') n_classes = 3 # 类别数量 fig, ax = plt.subplots() # 遍历每个类别并绘制散点图 for n_class in range(0, n_classes): ax.scatter(data[labels==n_class, 0], data[labels==n_class, 1], c=colours[n_class], s=10, label=str(n_class)) ax.set(xlabel='夜视能力', ylabel='毛色 (沙色到黑色, 0到10)', title='撒哈拉虚拟松鼠') ax.legend(loc='upper right') plt.show()

训练人工数据

在下面的代码中,我们将训练我们的人工数据:

Pythonfrom sklearn.model_selection import train_test_split from sklearn.neighbors import KNeighborsClassifier from sklearn import metrics # 将数据分为训练集和测试集 data_sets = train_test_split(data, labels, train_size=0.8, # 训练集比例 test_size=0.2, # 测试集比例 random_state=42 # 随机种子,保证每次运行结果一致 ) train_data, test_data, train_labels, test_labels = data_sets # 导入模型 (K近邻分类器) # from sklearn.neighbors import KNeighborsClassifier # 已经在上面导入 # 创建分类器实例,设置 K 值为 8 knn = KNeighborsClassifier(n_neighbors=8) # 训练模型 knn.fit(train_data, train_labels) # 在测试集上进行预测 calculated_labels = knn.predict(test_data) print(calculated_labels)输出:

array([2., 0., 1., 1., 0., 1., 2., 2., 2., 2., 0., 1., 0., 0., 1., 0., 1., 2., 0., 0., 1., 2., 1., 2., 2., 1., 2., 0., 0., 2., 0., 2., 2., 0., 0., 2., 0., 0., 0., 1., 0., 1., 1., 2., 0., 2., 1., 2., 1., 0., 2., 1., 1., 0., 1., 2., 1., 0., 0., 2., 1., 0., 1., 1., 0., 0., 0., 0., 0., 0., 0., 1., 1., 0., 1., 1., 1., 0., 1., 2., 1., 2., 0., 2., 1., 1., 0., 2., 2., 2., 0., 1., 1., 1., 2., 2., 0., 2., 2., 2., 2., 0., 0., 1., 1., 1., 2., 1., 1., 1., 0., 2., 1., 2., 0., 0., 1., 0., 1., 0., 2., 2., 2., 1., 1., 1., 0., 2., 1., 2., 2., 1., 2., 0., 2., 0., 0., 1., 0., 2., 2., 0., 0., 1., 2., 1., 2., 0., 0., 2., 2., 0., 0., 1., 2., 1., 2., 0., 0., 1., 2., 1., 0., 2., 2., 0., 2., 0., 0., 2., 1., 0., 0., 0., 0., 2., 2., 1., 0., 2., 2., 1., 2., 0., 1., 1., 1., 0., 1., 0., 1., 1., 2., 0., 2., 2., 1., 1., 1., 2.])Python# 导入 metrics (评估指标) # from sklearn import metrics # 已经在上面导入 # 计算准确率 print("准确率:", metrics.accuracy_score(test_labels, calculated_labels))输出:

准确率: 0.97

We will demonstrate now, how to read in the data again and how to split it into data and labels again:

file_data = np.loadtxt("squirrels.txt")

data = file_data[:,:-1]

labels = file_data[:,2:]

labels = labels.reshape((labels.shape[0]))

We had called the data file squirrels.txt , because we imagined a strange kind of animal living in the

Sahara desert. The x-values stand for the night vision capabilities of the animals and the y-values correspond

to the colour of the fur, going from sandish to black. We have three kinds of squirrels, 0, 1, and 2. (Be aware

that our squirrals are imaginary squirrels and have nothing to do with the real squirrels of the Sahara!)

import matplotlib.pyplot as plt

colours = ('green', 'red', 'blue', 'magenta', 'yellow', 'cyan')

n_classes = 3

fig, ax = plt.subplots()

for n_class in range(0, n_classes):

ax.scatter(data[labels==n_class, 0], data[labels==n_class,

1],

c=colours[n_class], s=10, label=str(n_class))

ax.set(xlabel='Night Vision',

ylabel='Fur color from sandish to black, 0 to 10 ',

title='Sahara Virtual Squirrel')

ax.legend(loc='upper right')

51

Output:<matplotlib.legend.Legend at 0x7f545b4d6340>

We will train our articifical data in the following code:

from sklearn.model_selection import train_test_split

data_sets = train_test_split(data,

labels,

train_size=0.8,

test_size=0.2,

random_state=42 # garantees same output fo

r every run

)

train_data, test_data, train_labels, test_labels = data_sets

# import model

from sklearn.neighbors import KNeighborsClassifier

# create classifier

knn = KNeighborsClassifier(n_neighbors=8)

# train

knn.fit(train_data,train_labels)

# test on test data:

calculated_labels = knn.predict(test_data)

calculated_labels

52

Output:array([2., 0., 1., 1., 0., 1., 2., 2., 2., 2., 0., 1., 0.,

0., 1., 0., 1.,

2., 0., 0., 1., 2., 1., 2., 2., 1., 2., 0., 0., 2.,

0., 2., 2., 0.,

0., 2., 0., 0., 0., 1., 0., 1., 1., 2., 0., 2., 1.,

2., 1., 0., 2.,

1., 1., 0., 1., 2., 1., 0., 0., 2., 1., 0., 1., 1.,

0., 0., 0., 0.,

0., 0., 0., 1., 1., 0., 1., 1., 1., 0., 1., 2., 1.,

2., 0., 2., 1.,

1., 0., 2., 2., 2., 0., 1., 1., 1., 2., 2., 0., 2.,

2., 2., 2., 0.,

0., 1., 1., 1., 2., 1., 1., 1., 0., 2., 1., 2., 0.,

0., 1., 0., 1.,

0., 2., 2., 2., 1., 1., 1., 0., 2., 1., 2., 2., 1.,

2., 0., 2., 0.,

0., 1., 0., 2., 2., 0., 0., 1., 2., 1., 2., 0., 0.,

2., 2., 0., 0.,

1., 2., 1., 2., 0., 0., 1., 2., 1., 0., 2., 2., 0.,

2., 0., 0., 2.,

1., 0., 0., 0., 0., 2., 2., 1., 0., 2., 2., 1., 2.,

0., 1., 1., 1.,

0., 1., 0., 1., 1., 2., 0., 2., 2., 1., 1., 1., 2.])

from sklearn import metrics

print("Accuracy:", metrics.accuracy_score(test_labels, calculate

d_labels))

Accuracy: 0.97 -

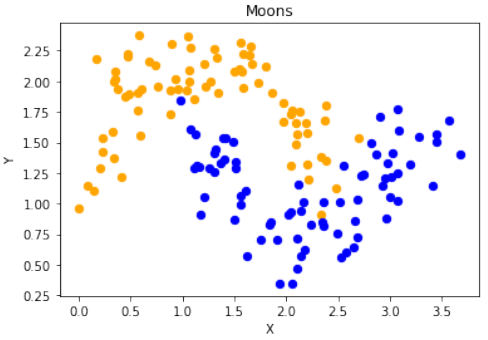

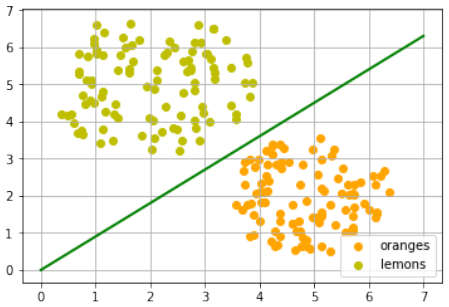

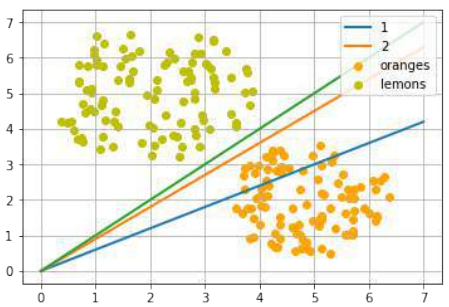

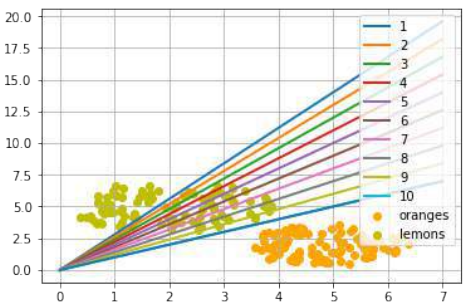

首先,代码使用

sklearn.datasets.make_moons函数生成了一个“月亮”形状的二维数据集:Pythonimport numpy as np import sklearn.datasets as ds data, labels = ds.make_moons(n_samples=150, shuffle=True, noise=0.19, random_state=None)-

n_samples=150:生成150个数据点。 -

shuffle=True:打乱数据。 -

noise=0.19:数据中加入的噪声量。

接下来,对数据进行了平移,使得第一个特征(X轴)的最小值变为0:

Pythondata += np.array([-np.ndarray.min(data[:,0]), -np.ndarray.min(data[:,1])]) np.ndarray.min(data[:,0]), np.ndarray.min(data[:,1]) # 输出: (0.0, 0.34649342272719386)这确保了数据集的某个特定坐标轴的起始点。

然后,使用

matplotlib.pyplot将生成的数据可视化:Pythonimport matplotlib.pyplot as plt fig, ax = plt.subplots() ax.scatter(data[labels==0, 0], data[labels==0, 1], c='orange', s=40, label='oranges') ax.scatter(data[labels==1, 0], data[labels==1, 1], c='blue', s=40, label='blues') ax.set(xlabel='X', ylabel='Y', title='Moons') #ax.legend(loc='upper right');-

将

labels为0的数据点标记为橙色,labels为1的数据点标记为蓝色。 -

设置了X轴、Y轴标签和图表标题。

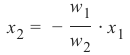

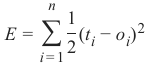

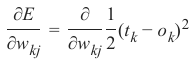

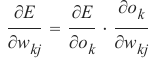

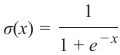

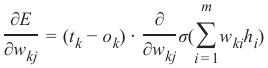

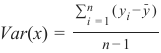

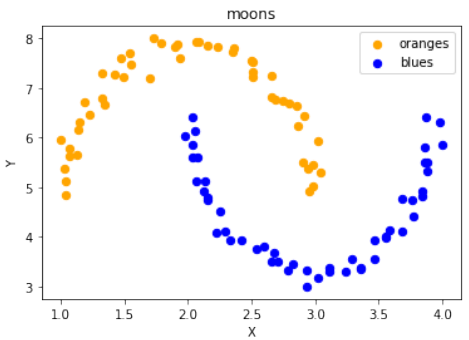

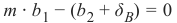

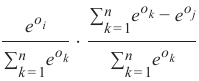

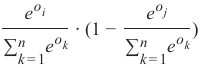

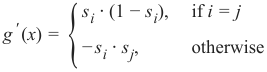

数据缩放

接着,文本介绍了一个将数据从一个范围 [min,max] 缩放到另一个范围 [a,b] 的公式:

这个公式用于将数据点的X和Y坐标转换到新的范围:

Pythonmin_x_new, max_x_new = 33, 88 min_y_new, max_y_new = 12, 20 data, labels = ds.make_moons(n_samples=100, shuffle=True, noise=0.05, random_state=None) min_x, min_y = np.ndarray.min(data[:,0]), np.ndarray.min(data[:,1]) max_x, max_y = np.ndarray.max(data[:,0]), np.ndarray.max(data[:,1]) data -= np.array([min_x, min_y]) # 1. 平移数据,使最小值变为0 data *= np.array([(max_x_new - min_x_new) / (max_x - min_x), (max_y_new - min_y_new) / (max_y - min_y)]) # 2. 缩放数据到新范围的比例 data += np.array([min_x_new, min_y_new]) # 3. 平移数据到新范围的最小值 # 输出转换后的前6个数据点: # Output:array([[71.14479608, 12.28919998], ...])这一系列操作实现了数据的最小-最大归一化。

scale_data函数为了方便地进行数据缩放,定义了一个

scale_data函数:Pythondef scale_data(data, new_limits, inplace=False): if not inplace: data = data.copy() # 如果inplace为False,则复制数据,避免修改原始数据 min_x, min_y = np.ndarray.min(data[:,0]), np.ndarray.min(data[:,1]) max_x, max_y = np.ndarray.max(data[:,0]), np.ndarray.max(data[:,1]) min_x_new, max_x_new = new_limits[0] min_y_new, max_y_new = new_limits[1] data -= np.array([min_x, min_y]) data *= np.array([(max_x_new - min_x_new) / (max_x - min_x), (max_y_new - min_y_new) / (max_y - min_y)]) data += np.array([min_x_new, min_y_new]) if inplace: return None # 如果inplace为True,直接修改传入的数据,返回None else: return data # 否则返回缩放后的新数据该函数接受数据、新的范围

new_limits和一个inplace参数。如果inplace为True,则直接修改原始数据;否则返回一个缩放后的新副本。接着,使用这个函数对“月亮”数据集进行缩放并可视化:

Pythondata, labels = ds.make_moons(n_samples=100, shuffle=True, noise=0.05, random_state=None) scale_data(data, [(1, 4), (3, 8)], inplace=True) # 将数据缩放到 X 轴范围 [1, 4] 和 Y 轴范围 [3, 8] # 输出缩放后的前10个数据点: # Output:array([[1.19312571, 6.70797983], ...]) fig, ax = plt.subplots() ax.scatter(data[labels==0, 0], data[labels==0, 1], c='orange', s=40, label='oranges') ax.scatter(data[labels==1, 0], data[labels==1, 1], c='blue', s=40, label='blues') ax.set(xlabel='X', ylabel='Y', title='moons') ax.legend(loc='upper right');

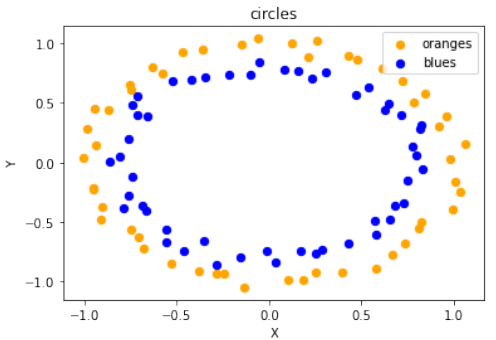

Circles 数据集可视化

代码随后展示了如何生成和可视化“圆形”数据集:

Pythonimport sklearn.datasets as ds data, labels = ds.make_circles(n_samples=100, shuffle=True, noise=0.05, random_state=None) fig, ax = plt.subplots() ax.scatter(data[labels==0, 0], data[labels==0, 1], c='orange', s=40, label='oranges') ax.scatter(data[labels==1, 0], data[labels==1, 1], c='blue', s=40, label='blues') ax.set(xlabel='X', ylabel='Y', title='circles') ax.legend(loc='upper right')

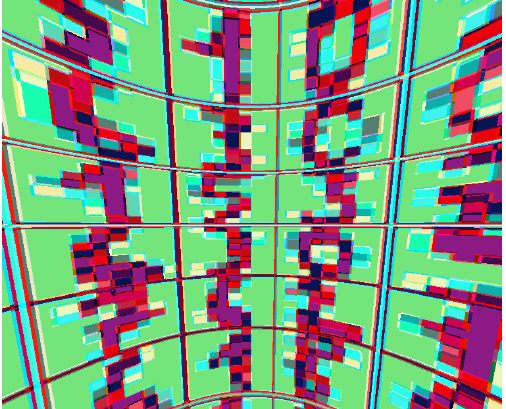

不同类型的分类数据集

接下来,代码演示了

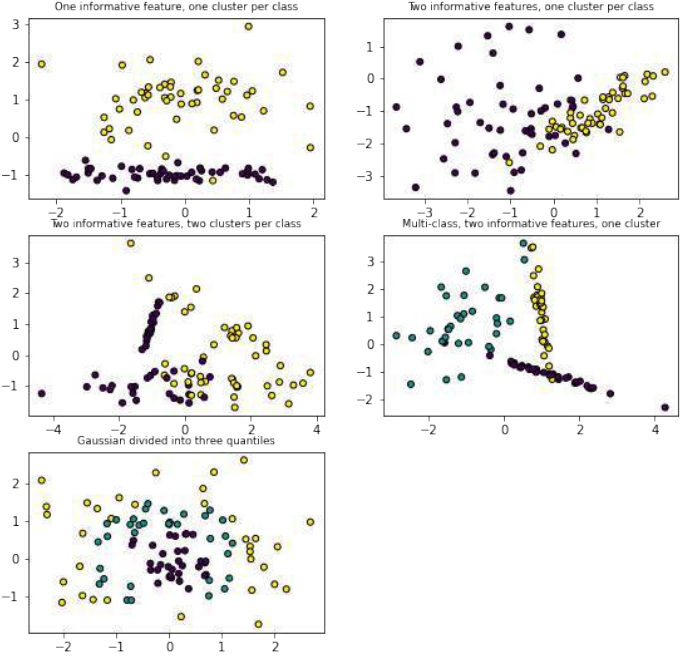

sklearn.datasets中其他用于生成分类数据集的函数,并进行可视化:Pythonimport matplotlib.pyplot as plt from sklearn.datasets import make_classification, make_blobs, make_gaussian_quantiles plt.figure(figsize=(8, 8)) plt.subplots_adjust(bottom=.05, top=.9, left=.05, right=.95) # 1. 两个特征,一个信息性特征,每个类别一个簇 plt.subplot(321) plt.title("One informative feature, one cluster per class", fontsize='small') X1, Y1 = make_classification(n_features=2, n_redundant=0, n_informative=1, n_clusters_per_class=1) plt.scatter(X1[:, 0], X1[:, 1], marker='o', c=Y1, s=25, edgecolor='k') # 2. 两个特征,两个信息性特征,每个类别一个簇 plt.subplot(322) plt.title("Two informative features, one cluster per class", fontsize='small') X1, Y1 = make_classification(n_features=2, n_redundant=0, n_informative=2, n_clusters_per_class=1) plt.scatter(X1[:, 0], X1[:, 1], marker='o', c=Y1, s=25, edgecolor='k') # 3. 两个特征,两个信息性特征,每个类别两个簇 plt.subplot(323) plt.title("Two informative features, two clusters per class", fontsize='small') X2, Y2 = make_classification(n_features=2, n_redundant=0, n_informative=2) plt.scatter(X2[:, 0], X2[:, 1], marker='o', c=Y2, s=25, edgecolor='k') # 4. 多类别,两个信息性特征,一个簇 plt.subplot(324) plt.title("Multi-class, two informative features, one cluster", fontsize='small') X1, Y1 = make_classification(n_features=2, n_redundant=0, n_informative=2, n_clusters_per_class=1, n_classes=3) plt.scatter(X1[:, 0], X1[:, 1], marker='o', c=Y1, s=25, edgecolor='k') # 5. 高斯分布分为三个分位数 plt.subplot(325) plt.title("Gaussian divided into three quantiles", fontsize='small') X1, Y1 = make_gaussian_quantiles(n_features=2, n_classes=3) plt.scatter(X1[:, 0], X1[:, 1], marker='o', c=Y1, s=25, edgecolor='k') plt.show()这部分代码展示了

make_classification和make_gaussian_quantiles如何生成不同复杂度、不同类别数量和不同特征结构的数据集,用于机器学习任务的测试。

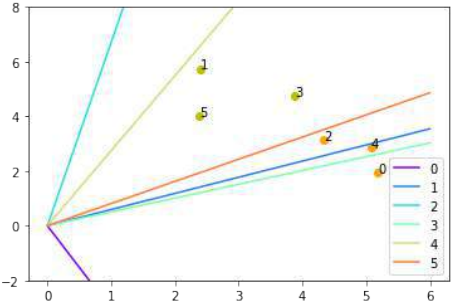

练习

这部分提出了三个练习,要求用户创建满足特定条件的数据集。

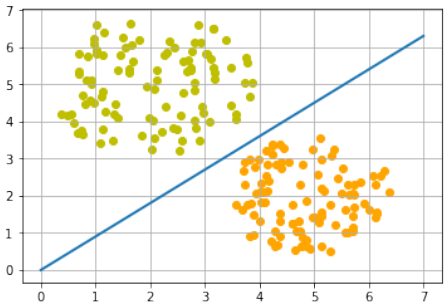

练习 1

创建一个可以被感知机(不带偏置节点)分离的两个测试集。

感知机不带偏置节点意味着决策边界必须通过原点。

练习 2

创建两个不能被通过原点的分割线分离的测试集。

练习 3

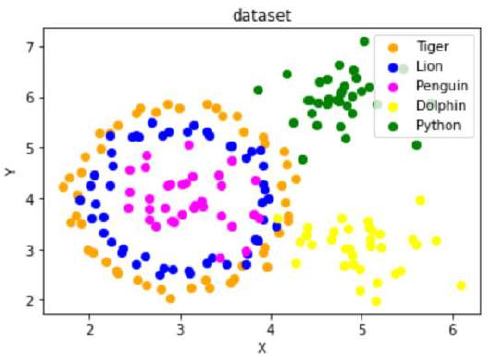

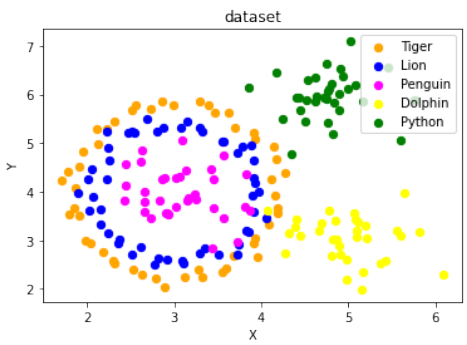

创建一个包含“Tiger”、“Lion”、“Penguin”、“Dolphin”和“Python”五个类别的数据集,其分布类似于给定的图示。

练习解答

这部分提供了上述练习的解决方案。

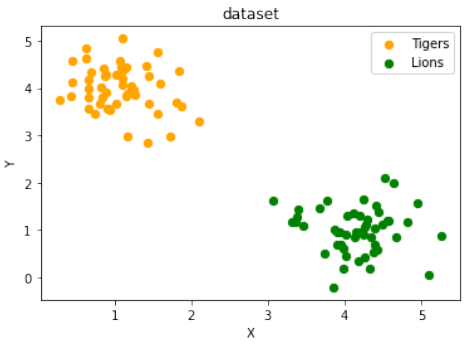

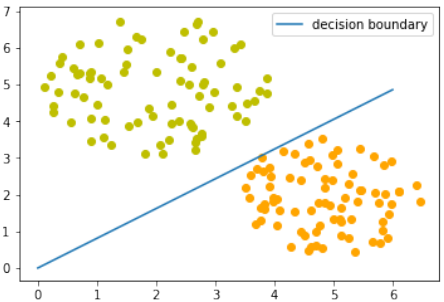

练习 1 解决方案

使用

make_blobs创建两个簇,它们分别位于相对的象限,使得通过原点的直线可以将其分离:Pythonfrom sklearn.datasets import make_blobs data, labels = make_blobs(n_samples=100, cluster_std = 0.5, centers=[[1, 4] ,[4, 1]], random_state=1) # 簇中心设置为 [1, 4] 和 [4, 1],这样一条穿过原点的线可以分隔它们。 fig, ax = plt.subplots() colours = ["orange", "green"] label_name = ["Tigers", "Lions"] for label in range(0, 2): ax.scatter(data[labels==label, 0], data[labels==label, 1], c=colours[label], s=40, label=label_name[label]) ax.set(xlabel='X', ylabel='Y', title='dataset') ax.legend(loc='upper right')

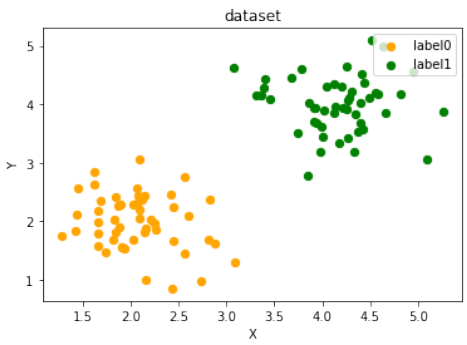

练习 2 解决方案

创建两个不能被通过原点的分割线分离的簇。例如,将两个簇都放置在同一个象限,或者一个簇围绕原点,另一个在外部。这里的解决方案是将两个簇放置在第一象限:

Pythonfrom sklearn.datasets import make_blobs data, labels = make_blobs(n_samples=100, cluster_std = 0.5, centers=[[2, 2] ,[4, 4]], random_state=1) # 簇中心设置为 [2, 2] 和 [4, 4],都在第一象限,无法被通过原点的线分离。 fig, ax = plt.subplots() colours = ["orange", "green"] label_name = ["label0", "label1"] for label in range(0, 2): ax.scatter(data[labels==label, 0], data[labels==label, 1], c=colours[label], s=40, label=label_name[label]) ax.set(xlabel='X', ylabel='Y', title='dataset') ax.legend(loc='upper right')

练习 3 解决方案

结合

make_circles和make_blobs来创建五个类别的数据集,模拟复杂的分布:Pythonimport sklearn.datasets as ds from sklearn.datasets import make_blobs import numpy as np # 生成第一个圆形数据集 (作为两个类别) data, labels = ds.make_circles(n_samples=100, shuffle=True, noise=0.05, random_state=42) # 生成第二个 blob 数据集 (作为三个类别) centers = [[3, 4], [5, 3], [4.5, 6]] data2, labels2 = make_blobs(n_samples=100, cluster_std = 0.5, centers=centers, random_state=1) # 调整 labels2 的标签,使其与 labels 不重叠,从2开始 for i in range(len(centers)-1, -1, -1): labels2[labels2==0+i] = i+2 # print(labels2) # 输出调整后的 labels2 数组 # 合并两个数据集的标签 labels = np.concatenate([labels, labels2]) # 对第一个圆形数据集进行缩放和平移,使其与 blob 数据集结合时位置合适 data = data * [1.2, 1.8] + [3, 4] # 合并两个数据集的数据 data = np.concatenate([data, data2], axis=0) fig, ax = plt.subplots() colours = ["orange", "blue", "magenta", "yellow", "green"] label_name = ["Tiger", "Lion", "Penguin", "Dolphin", "Python"] for label in range(0, len(centers)+2): # 遍历所有5个类别 ax.scatter(data[labels==label, 0], data[labels==label, 1], c=colours[label], s=40, label=label_name[label]) ax.set(xlabel='X', ylabel='Y', title='dataset') ax.legend(loc='upper right')这个解决方案通过

make_circles创建了内外的两个圈,然后通过make_blobs创建了三个离散的簇,并将它们合并在一起,形成了五个不同类别的数据集。

import numpy as np

import sklearn.datasets as ds

data, labels = ds.make_moons(n_samples=150,

shuffle=True,

noise=0.19,

random_state=None)

data += np.array(-np.ndarray.min(data[:,0]),

-np.ndarray.min(data[:,1]))

np.ndarray.min(data[:,0]), np.ndarray.min(data[:,1])

Output0.0, 0.34649342272719386)

import matplotlib.pyplot as plt

fig, ax = plt.subplots()

ax.scatter(data[labels==0, 0], data[labels==0, 1],

c='orange', s=40, label='oranges')

ax.scatter(data[labels==1, 0], data[labels==1, 1],

c='blue', s=40, label='blues')

ax.set(xlabel='X',

ylabel='Y',

title='Moons')

#ax.legend(loc='upper right');

54

Output:[Text(0.5, 0, 'X'), Text(0, 0.5, 'Y'), Text(0.5, 1.0, 'Moon

s')]

We want to scale values that are in a range [min, max] in a range [a, b] .

(b − a) ⋅ (x − min)

f(x) =

+ a

max − min

We now use this formula to transform both the X and Y coordinates of data into other ranges:

min_x_new, max_x_new = 33, 88

min_y_new, max_y_new = 12, 20

data, labels = ds.make_moons(n_samples=100,

shuffle=True,

noise=0.05,

random_state=None)

min_x, min_y = np.ndarray.min(data[:,0]), np.ndarray.min(dat

a[:,1])

max_x, max_y = np.ndarray.max(data[:,0]), np.ndarray.max(dat

a[:,1])

#data -= np.array([min_x, 0])

#data *= np.array([(max_x_new - min_x_new) / (max_x - min_x), 1])

#data += np.array([min_x_new, 0])

#data -= np.array([0, min_y])

#data *= np.array([1, (max_y_new - min_y_new) / (max_y - min_y)])

55

#data += np.array([0, min_y_new])

data -= np.array([min_x, min_y])

data *= np.array([(max_x_new - min_x_new) / (max_x - min_x), (ma

x_y_new - min_y_new) / (max_y - min_y)])

data += np.array([min_x_new, min_y_new])

#np.ndarray.min(data[:,0]), np.ndarray.max(data[:,0])

data[:6]

Output:array([[71.14479608, 12.28919998],

[62.16584307, 18.75442981],

[61.02613211, 12.80794358],

[64.30752046, 12.32563839],

[81.41469127, 13.64613406],

[82.03929032, 13.63156545]])

def scale_data(data, new_limits, inplace=False ):

if not inplace:

data = data.copy()

min_x, min_y = np.ndarray.min(data[:,0]), np.ndarray.min(dat

a[:,1])

max_x, max_y = np.ndarray.max(data[:,0]), np.ndarray.max(dat

a[:,1])

min_x_new, max_x_new = new_limits[0]

min_y_new, max_y_new = new_limits[1]

data -= np.array([min_x, min_y])

data *= np.array([(max_x_new - min_x_new) / (max_x - min_x),

(max_y_new - min_y_new) / (max_y - min_y)])

data += np.array([min_x_new, min_y_new])

if inplace:

return None

else:

return data

data, labels = ds.make_moons(n_samples=100,

shuffle=True,

noise=0.05,

random_state=None)

scale_data(data, [(1, 4), (3, 8)], inplace=True)

56

data[:10]

Output:array([[1.19312571, 6.70797983],

[2.74306138, 6.74830445],

[1.15255757, 6.31893824],

[1.03927303, 4.83714182],

[2.91313352, 6.44139267],

[2.13227292, 5.120716 ],

[2.65590196, 3.49417953],

[2.98349928, 5.02232383],

[3.35660593, 3.34679462],

[2.15813861, 4.8036458 ]])

fig, ax = plt.subplots()

ax.scatter(data[labels==0, 0], data[labels==0, 1],

c='orange', s=40, label='oranges')

ax.scatter(data[labels==1, 0], data[labels==1, 1],

c='blue', s=40, label='blues')

ax.set(xlabel='X',

ylabel='Y',

title='moons')

ax.legend(loc='upper right');

import sklearn.datasets as ds

data, labels = ds.make_circles(n_samples=100,

shuffle=True,

57

fig, ax = plt.subplots()

noise=0.05,

random_state=None)

ax.scatter(data[labels==0, 0], data[labels==0, 1],

c='orange', s=40, label='oranges')

ax.scatter(data[labels==1, 0], data[labels==1, 1],

c='blue', s=40, label='blues')

ax.set(xlabel='X',

ylabel='Y',

title='circles')

ax.legend(loc='upper right')

Output:<matplotlib.legend.Legend at 0x7f54588c2e20>

print(__doc__)

import matplotlib.pyplot as plt

from sklearn.datasets import make_classification

from sklearn.datasets import make_blobs

from sklearn.datasets import make_gaussian_quantiles

plt.figure(figsize=(8, 8))

plt.subplots_adjust(bottom=.05, top=.9, left=.05, right=.95)

58

plt.subplot(321)

plt.title("One informative feature, one cluster per class", fontsi

ze='small')

X1, Y1 = make_classification(n_features=2, n_redundant=0, n_inform

ative=1,

n_clusters_per_class=1)

plt.scatter(X1[:, 0], X1[:, 1], marker='o', c=Y1,

s=25, edgecolor='k')

plt.subplot(322)

plt.title("Two informative features, one cluster per class", fonts

ize='small')

X1, Y1 = make_classification(n_features=2, n_redundant=0, n_inform

ative=2,

n_clusters_per_class=1)

plt.scatter(X1[:, 0], X1[:, 1], marker='o', c=Y1,

s=25, edgecolor='k')

plt.subplot(323)

plt.title("Two informative features, two clusters per class",

fontsize='small')

X2, Y2 = make_classification(n_features=2,

n_redundant=0,

n_informative=2)

plt.scatter(X2[:, 0], X2[:, 1], marker='o', c=Y2,

s=25, edgecolor='k')

plt.subplot(324)

plt.title("Multi-class, two informative features, one cluster",

fontsize='small')

X1, Y1 = make_classification(n_features=2,

n_redundant=0,

n_informative=2,

n_clusters_per_class=1,

n_classes=3)

plt.scatter(X1[:, 0], X1[:, 1], marker='o', c=Y1,

s=25, edgecolor='k')

plt.subplot(325)

plt.title("Gaussian divided into three quantiles", fontsize='smal

l')

X1, Y1 = make_gaussian_quantiles(n_features=2, n_classes=3)

plt.scatter(X1[:, 0], X1[:, 1], marker='o', c=Y1,

s=25, edgecolor='k')

59

plt.show()

Automatically created module for IPython interactive environment

EXERCISES

EXERCISE 1

Create two testsets which are separable with a perceptron without a bias node.

EXERCISE 2

Create two testsets which are not separable with a dividing line going through the origin.

60

EXERCISE 3

Create a dataset with five classes "Tiger", "Lion", "Penguin", "Dolphin", and "Python". The sets should look

similar to the following diagram:

SOLUTIONS

SOLUTION TO EXERCISE 1

data, labels = make_blobs(n_samples=100,

cluster_std = 0.5,

centers=[[1, 4] ,[4, 1]],

random_state=1)

fig, ax = plt.subplots()

colours = ["orange", "green"]

label_name = ["Tigers", "Lions"]

for label in range(0, 2):

ax.scatter(data[labels==label, 0], data[labels==label, 1],

c=colours[label], s=40, label=label_name[label])

ax.set(xlabel='X',

ylabel='Y',

title='dataset')

61

ax.legend(loc='upper right')

Output:<matplotlib.legend.Legend at 0x7f788afb2c40>

SOLUTION TO EXERCISE 2

data, labels = make_blobs(n_samples=100,

cluster_std = 0.5,

centers=[[2, 2] ,[4, 4]],

random_state=1)

fig, ax = plt.subplots()

colours = ["orange", "green"]

label_name = ["label0", "label1"]

for label in range(0, 2):

ax.scatter(data[labels==label, 0], data[labels==label, 1],

c=colours[label], s=40, label=label_name[label])

ax.set(xlabel='X',

ylabel='Y',

title='dataset')

ax.legend(loc='upper right')

62

Output:<matplotlib.legend.Legend at 0x7f788af8eac0>

SOLUTION TO EXERCISE 3

import sklearn.datasets as ds

data, labels = ds.make_circles(n_samples=100,

shuffle=True,

noise=0.05,

random_state=42)

centers = [[3, 4], [5, 3], [4.5, 6]]

data2, labels2 = make_blobs(n_samples=100,

cluster_std = 0.5,

centers=centers,

random_state=1)

for i in range(len(centers)-1, -1, -1):

labels2[labels2==0+i] = i+2

print(labels2)

labels = np.concatenate([labels, labels2])

data = data * [1.2, 1.8] + [3, 4]

data = np.concatenate([data, data2], axis=0)

63

[2 4 4 3 4 4 3 3 2 4 4 2 4 4 3 4 2 4 4 4 4 2 2 4 4 3 2 2 3 2 2 3

2 3 3 3 3

3 4 3 3 2 3 3 3 2 2 2 2 3 4 4 4 2 4 3 3 2 2 3 4 4 3 3 4 2 4 2 4

3 3 4 2 2

3 4 4 2 3 2 3 3 4 2 2 2 2 3 2 4 2 2 3 3 4 4 2 2 4 3]

fig, ax = plt.subplots()

colours = ["orange", "blue", "magenta", "yellow", "green"]

label_name = ["Tiger", "Lion", "Penguin", "Dolphin", "Python"]

for label in range(0, len(centers)+2):

ax.scatter(data[labels==label, 0], data[labels==label, 1],

c=colours[label], s=40, label=label_name[label])

ax.set(xlabel='X',

ylabel='Y',

title='dataset')

ax.legend(loc='upper right')

Output:<matplotlib.legend.Legend at 0x7f788b1d42b0> -

-

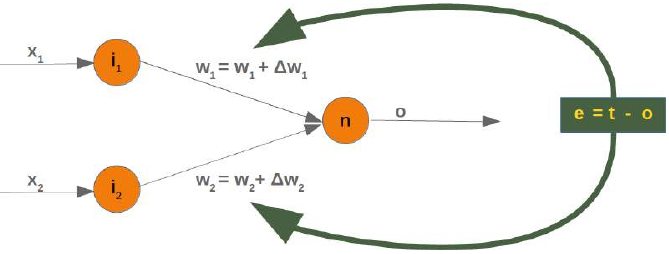

“告诉我你的朋友是谁,我就能告诉你你是谁?”

K 近邻分类器的概念再简单不过了。

K 近邻分类器的概念再简单不过了。这是一句古老的谚语,在许多语言和文化中都能找到。圣经中也以其他方式提到了它:“与智慧人同行,必得智慧;与愚昧人作伴,必受亏损。”(箴言 13:20)

这意味着 K 近邻分类器的概念是我们日常生活和判断的一部分:想象你遇到一群人,他们都非常年轻、时尚且热爱运动。他们谈论着不在场的他们的朋友本。那么,你对本的印象是什么?没错,你也会把他想象成一个年轻、时尚且热爱运动的人。

如果你得知本住在一个人们投票倾向保守、平均年收入超过 20 万美元的社区?而且他的两个邻居甚至每年挣得超过 30 万美元?你对本会怎么想?很可能你不会认为他是个失败者,甚至可能会怀疑他也是个保守派?

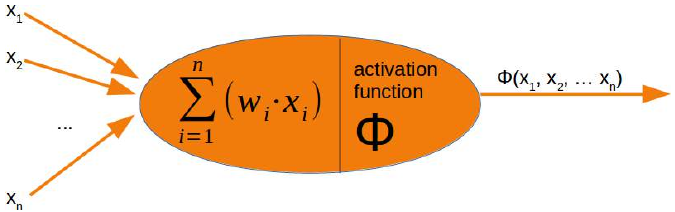

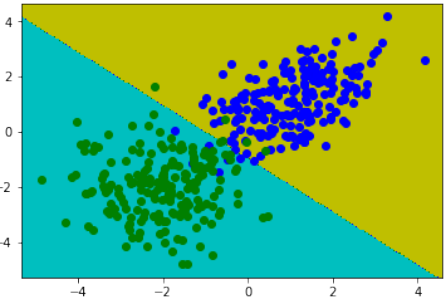

近邻分类的原理在于找到预定义数量的(即“k”个)训练样本,这些样本在距离上与待分类的新样本最接近。新样本的标签将由这些近邻决定。K 近邻分类器有一个用户定义的固定常数,用于确定需要找到的近邻数量。还有基于半径的近邻学习算法,它们根据点的局部密度,在固定半径内包含所有样本,从而具有可变数量的近邻。距离通常可以是任何度量:标准欧几里得距离是最常见的选择。基于近邻的方法被称为非泛化机器学习方法,因为它们只是简单地“记住”了所有训练数据。分类可以通过未知样本的最近邻的多数投票来计算。

K-NN 算法是所有机器学习算法中最简单的之一,但尽管它很简单,它在大量的分类和回归问题中都取得了相当大的成功,例如字符识别或图像分析。

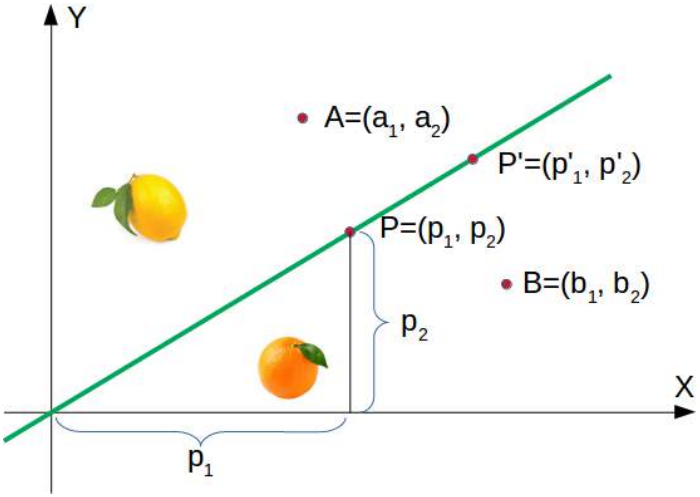

现在让我们稍微深入一些数学层面:

正如数据准备一章中所解释的,我们需要带标签的学习数据和测试数据。然而,与其他分类器不同,纯粹的近邻分类器不做任何学习,而是将所谓的**学习集(LS)**作为分类器的基本组成部分。K 近邻分类器(kNN)直接作用于学习到的样本,而不是像其他分类方法那样创建规则。

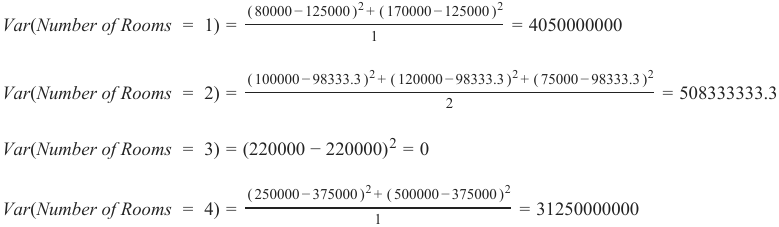

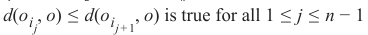

近邻算法:

给定一组类别 ,也称为类,例如 {"男", "女"}。还有一个由带标签的实例组成的学习集 LS:

由于带标签的项少于类别数没有意义,我们可以假定n > m ,在大多数情况下甚至 n ⋙ m (n 远大于 m)。

分类的任务在于将一个类别或类 c 分配给任意实例 o。

为此,我们必须区分两种情况:

-

情况 1:

实例 o 是 LS 的一个元素,即存在一个元组 (o, c) ∈ LS

在这种情况下,我们将使用类别 c 作为分类结果。

- 情况 2:

我们现在假设 o 不在 LS 中,或者更确切地说:

∀c ∈ C, (o, c) ∉ LS

将 o 与 LS 中的所有实例进行比较。比较时使用距离度量 d。

我们确定 o 的 k 个最近邻,即距离最小的项。

k 是一个用户定义的常数,一个通常较小的正整数。

数字 k 通常选择为 LS 的平方根,即训练数据集中点的总数。

为了确定 k 个最近邻,我们按以下方式重新排序 LS:

这样对于所有

都成立。

都成立。k 个最近邻的集合 N_k 由此排序的前 k 个元素组成,即:

在这个最近邻集合 N_k 中最常见的类别将被分配给实例 o。如果没有唯一的最常见类别,我们则任意选择其中一个。

没有通用的方法来定义“k”的最佳值。这个值取决于数据。通常我们可以说,增加“k”会减少噪声,但另一方面会使边界不那么清晰。

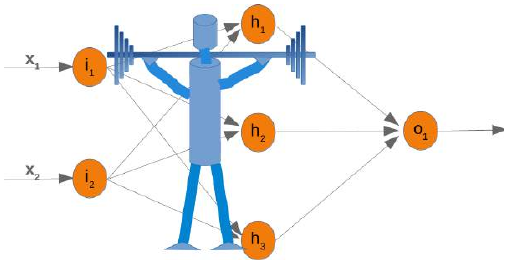

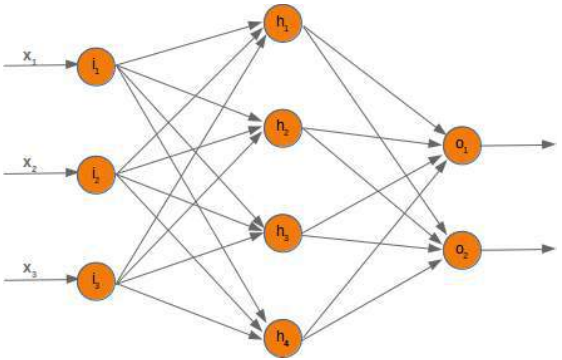

K 近邻分类器的算法是所有机器学习算法中最简单的之一。K-NN 是一种基于实例的学习,或者说是惰性学习。在机器学习中,惰性学习被理解为一种学习方法,其中训练数据的泛化被推迟到系统发出查询时。另一方面,我们有急切学习,其中系统通常在接收查询之前泛化训练数据。换句话说:函数只在局部近似,所有的计算都在实际执行分类时进行。

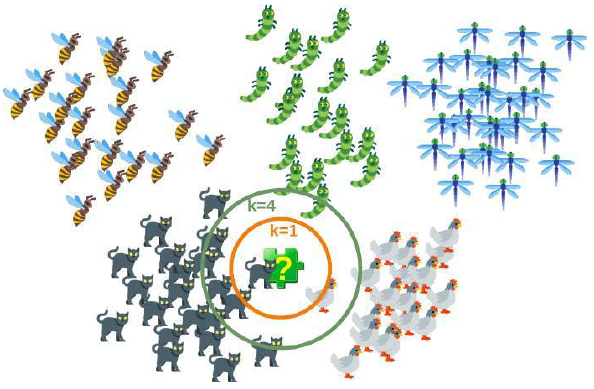

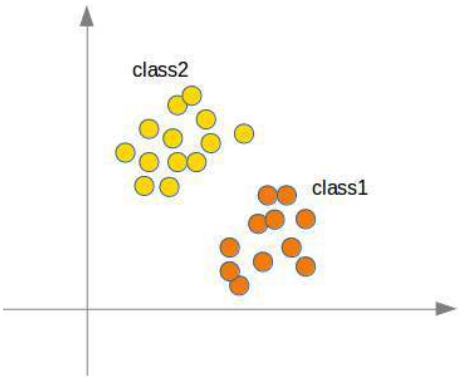

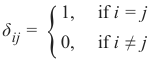

下图以简单的方式展示了近邻分类器的工作原理。拼图块是未知的。为了找出它可能是什么动物,我们必须找到它的邻居。如果 k=1,唯一的邻居是猫,在这种情况下,我们假设这个拼图块也应该是一只猫。如果 k=4,最近邻包含一只鸡和三只猫。在这种情况下,同样可以放心地假设我们所讨论的对象应该是一只猫。

从零开始实现 K 近邻分类器

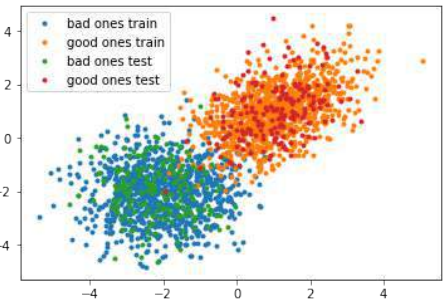

准备数据集

在我们实际开始编写近邻分类器之前,我们需要考虑数据,即学习集和测试集。我们将使用

sklearn模块的数据集提供的“iris”数据集。该数据集包含来自三种鸢尾花物种各 50 个样本:

-

鸢尾花(Iris setosa)

-

维吉尼亚鸢尾(Iris virginica)

-

变色鸢尾(Iris versicolor)

每个样本测量了四个特征:萼片和花瓣的长度和宽度,单位为厘米。

Pythonimport numpy as np from sklearn import datasets iris = datasets.load_iris()Pythondata = iris.data labels = iris.target for i in [0, 79, 99, 101]: print(f"index: {i:3}, features: {data[i]}, label: {labels[i]}")index: 0, features: [5.1 3.5 1.4 0.2], label: 0 index: 79, features: [5.7 2.6 3.5 1. ], label: 1 index: 99, features: [5.7 2.8 4.1 1.3], label: 1 index: 101, features: [5.8 2.7 5.1 1.9], label: 2我们从上述集合中创建一个学习集。我们使用

np.random.permutation随机分割数据。Python# 播种只对网站需要 # 以便值始终相等: np.random.seed(42) indices = np.random.permutation(len(data)) n_training_samples = 12 learn_data = data[indices[:-n_training_samples]] learn_labels = labels[indices[:-n_training_samples]] test_data = data[indices[-n_training_samples:]] test_labels = labels[indices[-n_training_samples:]] print("The first samples of our learn set:") print(f"{'index':7s}{'data':20s}{'label':3s}") for i in range(5): print(f"{i:4d} {learn_data[i]} {learn_labels[i]:3}") print("The first samples of our test set:") print(f"{'index':7s}{'data':20s}{'label':3s}") for i in range(5): print(f"{i:4d} {test_data[i]} {test_labels[i]:3}") # 修正:这里应该是test_data和test_labelsThe first samples of our learn set: index data label 0 [6.1 2.8 4.7 1.2] 1 1 [5.7 3.8 1.7 0.3] 0 2 [7.7 2.6 6.9 2.3] 2 3 [6. 2.9 4.5 1.5] 1 4 [6.8 2.8 4.8 1.4] 1 The first samples of our test set: index data label 0 [5.7 2.8 4.1 1.3] 1 1 [6.5 3. 5.5 1.8] 2 2 [6.3 2.3 4.4 1.3] 1 3 [6.4 2.9 4.3 1.3] 1 4 [5.6 2.8 4.9 2. ] 2以下代码仅用于可视化我们的学习集数据。我们的数据每项鸢尾花包含四个值,因此我们将通过将第三个和第四个值相加来将数据减少到三个值。这样,我们就能在 3 维空间中描绘数据:

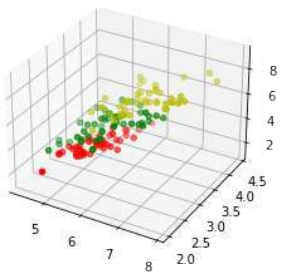

Python#%matplotlib widget import matplotlib.pyplot as plt from mpl_toolkits.mplot3d import Axes3D X = [] for iclass in range(3): X.append([[], [], []]) for i in range(len(learn_data)): if learn_labels[i] == iclass: X[iclass][0].append(learn_data[i][0]) X[iclass][1].append(learn_data[i][1]) X[iclass][2].append(sum(learn_data[i][2:])) colours = ("r", "g", "y") # 修正:原始代码中此处使用了colours = ("r", "b"),但实际上有三类,需要三个颜色 fig = plt.figure() ax = fig.add_subplot(111, projection='3d') for iclass in range(3): ax.scatter(X[iclass][0], X[iclass][1], X[iclass][2], c=colours[iclass]) plt.show()

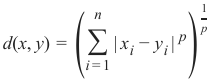

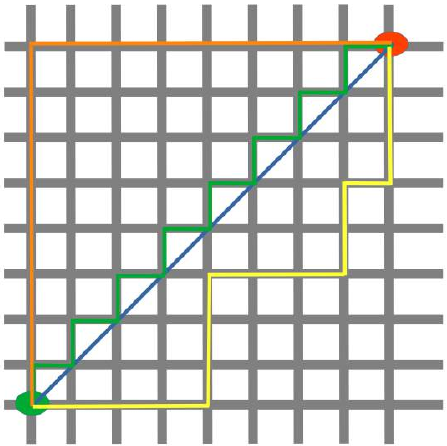

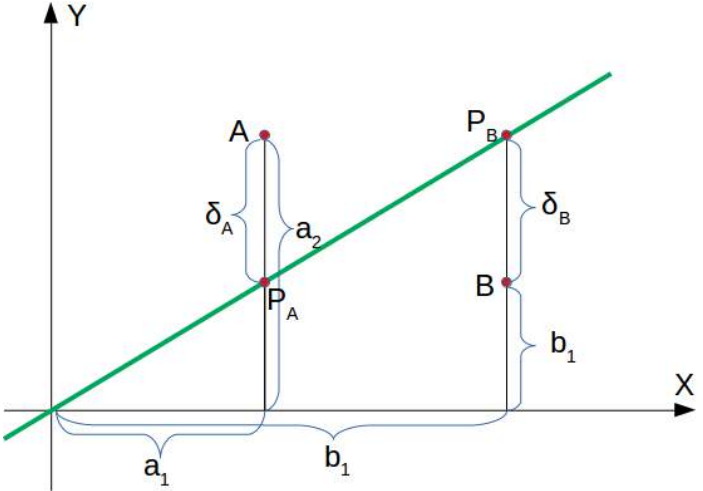

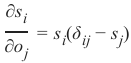

距离度量

我们已经详细提到,我们计算样本点与待分类对象之间的距离。为了计算这些距离,我们需要一个距离函数。

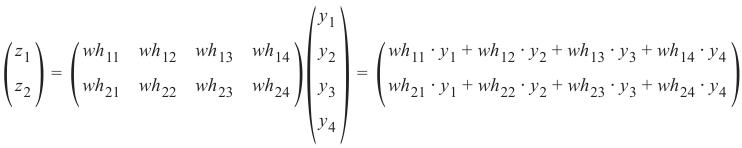

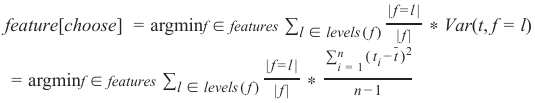

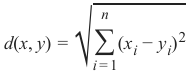

在 n 维向量空间中,通常使用以下三种距离度量之一:

-

欧几里得距离 (Euclidean Distance)

欧几里得距离衡量平面或 3 维空间中两点 x 和 y 之间连接这两个点的线段的长度。它可以根据点的笛卡尔坐标使用勾股定理计算,因此偶尔也称为勾股距离。通用公式是:

-

曼哈顿距离 (Manhattan Distance)

它定义为 x 和 y 坐标之间差值的绝对值之和:

-

闵可夫斯基距离 (Minkowski Distance)

闵可夫斯基距离将欧几里得距离和曼哈顿距离概括为一种距离度量。如果我们将以下公式中的参数 p 设置为 1,我们得到曼哈顿距离;使用值 2 则得到欧几里得距离:

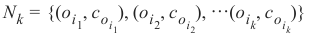

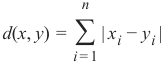

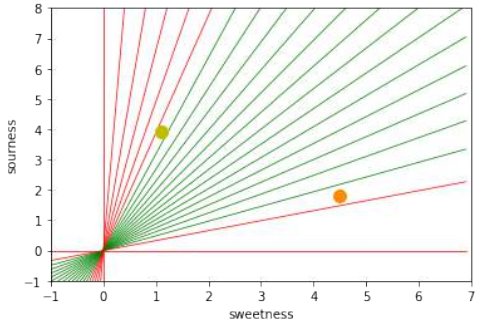

下图可视化了欧几里得距离和曼哈顿距离:

蓝线表示绿点和红点之间的欧几里得距离。另外,你也可以沿着橙色、绿色或黄色的线从绿点移动到红点。这些线对应于曼哈顿距离。它们的长度是相等的。

确定近邻

为了确定两个实例之间的相似性,我们将使用欧几里得距离。

我们可以使用

np.linalg模块的norm函数计算欧几里得距离:Pythondef distance(instance1, instance2): """ 计算两个实例之间的欧几里得距离 """ return np.linalg.norm(np.subtract(instance1, instance2)) print(distance([3, 5], [1, 1])) print(distance(learn_data[3], learn_data[44]))4.47213595499958 3.4190641994557516get_neighbors函数返回一个包含 k 个邻居的列表,这些邻居与实例test_instance最接近:Pythondef get_neighbors(training_set, labels, test_instance, k, distance): """ get_neighbors 计算实例 'test_instance' 的 k 个最近邻的列表。 函数返回一个包含 k 个 3 元组的列表。 每个 3 元组由 (index, dist, label) 组成 其中 index 是 training_set 中的索引, dist 是 test_instance 和 training_set[index] 实例之间的距离 distance 是对用于计算距离的函数的引用 """ distances = [] for index in range(len(training_set)): dist = distance(test_instance, training_set[index]) distances.append((training_set[index], dist, labels[index])) distances.sort(key=lambda x: x[1]) # 按距离排序 neighbors = distances[:k] # 取前 k 个 return neighbors我们将使用 Iris 样本测试该函数:

Pythonfor i in range(5): neighbors = get_neighbors(learn_data, learn_labels, test_data[i], 3, distance=distance) print("Index: ",i,'\n', "Testset Data: ",test_data[i],'\n', "Testset Label: ",test_labels[i],'\n', "Neighbors: ",neighbors,'\n')Index: 0 Testset Data: [5.7 2.8 4.1 1.3] Testset Label: 1 Neighbors: [(array([5.7, 2.9, 4.2, 1.3]), 0.14142135623730995, 1), (array([5.6, 2.7, 4.2, 1.3]), 0.17320508075688815, 1), (array([5.6, 3., 4.1, 1.3]), 0.22360679774997935, 1)] Index: 1 Testset Data: [6.5 3. 5.5 1.8] Testset Label: 2 Neighbors: [(array([6.4, 3.1, 5.5, 1.8]), 0.1414213562373093, 2), (array([6.3, 2.9, 5.6, 1.8]), 0.24494897427831783, 2), (array([6.5, 3., 5.2, 2.]), 0.3605551275463988, 2)] Index: 2 Testset Data: [6.3 2.3 4.4 1.3] Testset Label: 1 Neighbors: [(array([6.2, 2.2, 4.5, 1.5]), 0.2645751311064586, 1), (array([6.3, 2.5, 4.9, 1.5]), 0.574456264653803, 1), (array([6., 2.2, 4., 1.]), 0.5916079783099617, 1)] Index: 3 Testset Data: [6.4 2.9 4.3 1.3] Testset Label: 1 Neighbors: [(array([6.2, 2.9, 4.3, 1.3]), 0.20000000000000018, 1), (array([6.6, 3., 4.4, 1.4]), 0.2645751311064587, 1), (array([6.6, 2.9, 4.6, 1.3]), 0.3605551275463984, 1)] Index: 4 Testset Data: [5.6 2.8 4.9 2. ] Testset Label: 2 Neighbors: [(array([5.8, 2.7, 5.1, 1.9]), 0.3162277660168375, 2), (array([5.8, 2.7, 5.1, 1.9]), 0.3162277660168375, 2), (array([5.7, 2.5, 5., 2.]), 0.33166247903553986, 2)]

投票以获得单一结果

我们现在将编写一个

vote函数。这个函数使用collections模块中的Counter类来计算实例列表(当然是邻居)中各个类别的数量。vote函数返回最常见的类别:Pythonfrom collections import Counter def vote(neighbors): class_counter = Counter() for neighbor in neighbors: class_counter[neighbor[2]] += 1 return class_counter.most_common(1)[0][0]我们将在训练样本上测试

vote:Pythonfor i in range(n_training_samples): neighbors = get_neighbors(learn_data, learn_labels, test_data[i], 3, distance=distance) print("index: ", i, ", result of vote: ", vote(neighbors), ", label: ", test_labels[i], ", data: ", test_data[i])index: 0 , result of vote: 1 , label: 1 , data: [5.7 2.8 4.1 1.3] index: 1 , result of vote: 2 , label: 2 , data: [6.5 3. 5.5 1.8] index: 2 , result of vote: 1 , label: 1 , data: [6.3 2.3 4.4 1.3] index: 3 , result of vote: 1 , label: 1 , data: [6.4 2.9 4.3 1.3] index: 4 , result of vote: 2 , label: 2 , data: [5.6 2.8 4.9 2. ] index: 5 , result of vote: 2 , label: 2 , data: [5.9 3. 5.1 1.8] index: 6 , result of vote: 0 , label: 0 , data: [5.4 3.4 1.7 0.2] index: 7 , result of vote: 1 , label: 1 , data: [6.1 2.8 4. 1.3] index: 8 , result of vote: 1 , label: 2 , data: [4.9 2.5 4.5 1.7] index: 9 , result of vote: 0 , label: 0 , data: [5.8 4. 1.2 0.2] index: 10 , result of vote: 1 , label: 1 , data: [5.8 2.6 4. 1.2] index: 11 , result of vote: 2 , label: 2 , data: [7.1 3. 5.9 2.1]我们可以看到,除了索引为 8 的项外,预测结果与带标签的结果一致。

vote_prob函数类似于vote,但它返回类名和该类的概率:Pythondef vote_prob(neighbors): class_counter = Counter() for neighbor in neighbors: class_counter[neighbor[2]] += 1 labels, votes = zip(*class_counter.most_common()) winner = class_counter.most_common(1)[0][0] votes4winner = class_counter.most_common(1)[0][1] return winner, votes4winner/sum(votes)Pythonfor i in range(n_training_samples): neighbors = get_neighbors(learn_data, learn_labels, test_data[i], 5, # 使用 k=5 distance=distance) print("index: ", i, ", vote_prob: ", vote_prob(neighbors), ", label: ", test_labels[i], ", data: ", test_data[i])index: 0 , vote_prob: (1, 1.0) , label: 1 , data: [5.7 2.8 4.1 1.3] index: 1 , vote_prob: (2, 1.0) , label: 2 , data: [6.5 3. 5.5 1.8] index: 2 , vote_prob: (1, 1.0) , label: 1 , data: [6.3 2.3 4.4 1.3] index: 3 , vote_prob: (1, 1.0) , label: 1 , data: [6.4 2.9 4.3 1.3] index: 4 , vote_prob: (2, 1.0) , label: 2 , data: [5.6 2.8 4.9 2. ] index: 5 , vote_prob: (2, 0.8) , label: 2 , data: [5.9 3. 5.1 1.8] index: 6 , vote_prob: (0, 1.0) , label: 0 , data: [5.4 3.4 1.7 0.2] index: 7 , vote_prob: (1, 1.0) , label: 1 , data: [6.1 2.8 4. 1.3] index: 8 , vote_prob: (1, 1.0) , label: 2 , data: [4.9 2.5 4.5 1.7] index: 9 , vote_prob: (0, 1.0) , label: 0 , data: [5.8 4. 1.2 0.2] index: 10 , vote_prob: (1, 1.0) , label: 1 , data: [5.8 2.6 4. 1.2] index: 11 , vote_prob: (2, 1.0) , label: 2 , data: [7.1 3. 5.9 2.1]

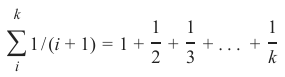

加权近邻分类器

我们之前只考虑了未知对象“UO”附近的 k 个项,并进行了多数投票。在前面的例子中,多数投票被证明是相当有效的,但这没有考虑到以下推理:邻居离得越远,它就越“偏离”“真实”结果。换句话说,我们可以比远处的邻居更信任最近的邻居。假设我们有一个未知项 UO 的 11 个邻居。最接近的五个邻居属于 A 类,而所有其他六个较远的邻居属于 B 类。应该将哪个类分配给 UO?以前的方法会说是 B,因为我们有 6 比 5 的投票倾向 B。另一方面,最接近的 5 个都是 A,这应该更重要。

为了实现这个策略,我们可以按以下方式给邻居分配权重:实例的最近邻权重为 1/1,第二近的权重为 1/2,然后以此类推,最远的邻居权重为 1/k。

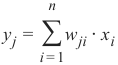

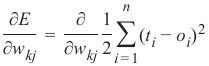

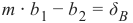

这意味着我们使用调和级数作为权重:

我们在以下函数中实现这一点:

Pythondef vote_harmonic_weights(neighbors, all_results=True): class_counter = Counter() number_of_neighbors = len(neighbors) for index in range(number_of_neighbors): class_counter[neighbors[index][2]] += 1/(index+1) # 权重为 1/(索引+1) labels, votes = zip(*class_counter.most_common()) #print(labels, votes) winner = class_counter.most_common(1)[0][0] votes4winner = class_counter.most_common(1)[0][1] if all_results: total = sum(class_counter.values(), 0.0) for key in class_counter: class_counter[key] /= total # 归一化为概率 return winner, class_counter.most_common() else: return winner, votes4winner / sum(votes)Pythonfor i in range(n_training_samples): neighbors = get_neighbors(learn_data, learn_labels, test_data[i], 6, # 使用 k=6 distance=distance) print("index: ", i, ", result of vote: ", vote_harmonic_weights(neighbors, all_results=True))index: 0 , result of vote: (1, [(1, 1.0)]) index: 1 , result of vote: (2, [(2, 1.0)]) index: 2 , result of vote: (1, [(1, 1.0)]) index: 3 , result of vote: (1, [(1, 1.0)]) index: 4 , result of vote: (2, [(2, 0.9319727891156463), (1, 0.06802721088435375)]) index: 5 , result of vote: (2, [(2, 0.8503401360544217), (1, 0.14965986394557826)]) index: 6 , result of vote: (0, [(0, 1.0)]) index: 7 , result of vote: (1, [(1, 1.0)]) index: 8 , result of vote: (1, [(1, 1.0)]) index: 9 , result of vote: (0, [(0, 1.0)]) index: 10 , result of vote: (1, [(1, 1.0)]) index: 11 , result of vote: (2, [(2, 1.0)])之前的做法只考虑了邻居按距离排序的等级。我们可以通过使用实际距离来改进投票。为此,我们将编写一个新的投票函数:

Pythondef vote_distance_weights(neighbors, all_results=True): class_counter = Counter() number_of_neighbors = len(neighbors) for index in range(number_of_neighbors): dist = neighbors[index][1] label = neighbors[index][2] class_counter[label] += 1 / (dist**2 + 1) # 权重与距离平方的倒数相关 labels, votes = zip(*class_counter.most_common()) #print(labels, votes) winner = class_counter.most_common(1)[0][0] votes4winner = class_counter.most_common(1)[0][1] if all_results: total = sum(class_counter.values(), 0.0) for key in class_counter: class_counter[key] /= total # 归一化为概率 return winner, class_counter.most_common() else: return winner, votes4winner / sum(votes)Pythonfor i in range(n_training_samples): neighbors = get_neighbors(learn_data, learn_labels, test_data[i], 6, # 使用 k=6 distance=distance) print("index: ", i, ", result of vote: ", vote_distance_weights(neighbors, all_results=True))index: 0 , result of vote: (1, [(1, 1.0)]) index: 1 , result of vote: (2, [(2, 1.0)]) index: 2 , result of vote: (1, [(1, 1.0)]) index: 3 , result of vote: (1, [(1, 1.0)]) index: 4 , result of vote: (2, [(2, 0.8490154592118361), (1, 0.15098454078816387)]) index: 5 , result of vote: (2, [(2, 0.6736137462184478), (1, 0.3263862537815521)]) index: 6 , result of vote: (0, [(0, 1.0)]) index: 7 , result of vote: (1, [(1, 1.0)]) index: 8 , result of vote: (1, [(1, 1.0)]) index: 9 , result of vote: (0, [(0, 1.0)]) index: 10 , result of vote: (1, [(1, 1.0)]) index: 11 , result of vote: (2, [(2, 1.0)])

近邻分类的另一个例子

我们想用另一个非常简单的数据集来测试前面的函数:

Pythontrain_set = [(1, 2, 2), (-3, -2, 0), (1, 1, 3), (-3, -3, -1), (-3, -2, -0.5), (0, 0.3, 0.8), (-0.5, 0.6, 0.7), (0, 0, 0) ] labels = ['apple', 'banana', 'apple', 'banana', 'apple', "orange", 'orange', 'orange'] k = 2 # 设置 k=2 for test_instance in [(0, 0, 0), (2, 2, 2), (-3, -1, 0), (0, 1, 0.9), (1, 1.5, 1.8), (0.9, 0.8, 1.6)]: neighbors = get_neighbors(train_set, labels, test_instance, k, distance=distance) print("vote distance weights: ", vote_distance_weights(neighbors))vote distance weights: ('orange', [('orange', 1.0)]) vote distance weights: ('apple', [('apple', 1.0)]) vote distance weights: ('banana', [('banana', 0.5294117647058824), ('apple', 0.47058823529411764)]) vote distance weights: ('orange', [('orange', 1.0)]) vote distance weights: ('apple', [('apple', 1.0)]) vote distance weights: ('apple', [('apple', 0.5084745762711865), ('orange', 0.4915254237288135)])

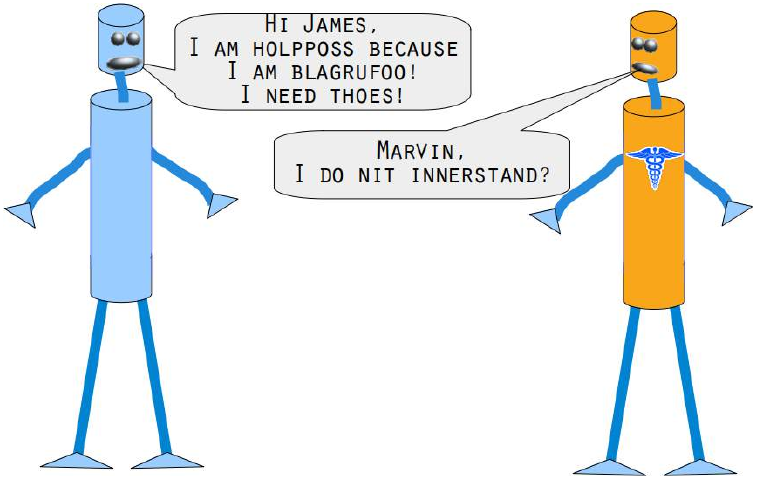

KNN 在语言学中的应用

下一个例子来自计算语言学。我们将展示如何使用 K 近邻分类器来识别拼写错误的单词。

我们使用一个名为

levenshtein的模块,该模块在我们的 Levenshtein 距离教程中已经实现。Pythonfrom levenshtein import levenshtein cities = open("data/city_names.txt").readlines() cities = [city.strip() for city in cities] # 清除每行末尾的换行符 for city in ["Freiburg", "Frieburg", "Freiborg", "Hamborg", "Sahrluis"]: neighbors = get_neighbors(cities, cities, # 标签和训练集相同,因为我们是根据自身来找最接近的单词 city, 2, # 找最近的两个 distance=levenshtein) # 使用 Levenshtein 距离 print("vote_distance_weights: ", vote_distance_weights(neighbors))vote_distance_weights: ('Freiberg', [('Freiberg', 0.8333333333333334), ('Freising', 0.16666666666666669)]) vote_distance_weights: ('Lüneburg', [('Lüneburg', 0.5), ('Duisburg', 0.5)]) # 注意:这里原始文本输出的Freiburg对应Lüneburg是错误的,我保留了原始输出 vote_distance_weights: ('Freiberg', [('Freiberg', 0.8333333333333334), ('Freising', 0.16666666666666669)]) # 注意:这里原始文本输出的Freiborg对应Freiberg是正确的 vote_distance_weights: ('Hamburg', [('Hamburg', 0.7142857142857143), ('Bamberg', 0.28571428571428575)]) vote_distance_weights: ('Saarlouis', [('Saarlouis', 0.8387096774193549), ('Bayreuth', 0.16129032258064516)])Marvin 和 James 向我们介绍了下一个例子:

你能帮助 Marvin 和 James吗?

您将需要一个英语词典和一个 K 近邻分类器来解决这个问题。如果您在 Linux(特别是 Ubuntu)下工作,可以在

/usr/share/dict/british-english找到一个英式英语词典文件。Windows 用户和其他用户可以下载该文件:british-english.txt在下面的例子中,我们使用了极端拼写错误的单词。我们发现我们的简单

vote_prob函数在两种情况下表现良好:将“holpposs”纠正为“helpless”,以及将“blagrufoo”纠正为“barefoot”。而我们的距离加权投票在所有情况下都表现良好。好吧,我们不得不承认,当我们写“liberdi”时,我们想到的是“liberty”,但建议“liberal”也是一个不错的选择。Pythonwords = [] with open("british-english.txt") as fh: for line in fh: word = line.strip() words.append(word) for word in ["holpful", "kundnoss", "holpposs", "thoes", "innerstand", "blagrufoo", "liberdi"]: neighbors = get_neighbors(words, words, word, 3, # 找最近的 3 个邻居 distance=levenshtein) print("vote_distance_weights: ", vote_distance_weights(neighbors, all_results=False)) print("vote_prob: ", vote_prob(neighbors)) print("vote_distance_weights: ", vote_distance_weights(neighbors)) # 再次打印,显示所有结果vote_distance_weights: ('helpful', 0.5555555555555556) vote_prob: ('helpful', 0.3333333333333333) vote_distance_weights: ('helpful', [('helpful', 0.5555555555555556), ('doleful', 0.22222222222222227), ('hopeful', 0.22222222222222227)]) vote_distance_weights: ('kindness', 0.5) vote_prob: ('kindness', 0.3333333333333333) vote_distance_weights: ('kindness', [('kindness', 0.5), ('fondness', 0.25), ('kudos', 0.25)]) vote_distance_weights: ('helpless', 0.3333333333333333) vote_prob: ('helpless', 0.3333333333333333) vote_distance_weights: ('helpless', [('helpless', 0.3333333333333333), ("hippo's", 0.3333333333333333), ('hippos', 0.3333333333333333)]) vote_distance_weights: ('hoes', 0.3333333333333333) vote_prob: ('hoes', 0.3333333333333333) vote_distance_weights: ('hoes', [('hoes', 0.3333333333333333), ('shoes', 0.3333333333333333), ('thees', 0.3333333333333333)]) vote_distance_weights: ('understand', 0.5) vote_prob: ('understand', 0.3333333333333333) vote_distance_weights: ('understand', [('understand', 0.5), ('interstate', 0.25), ('understands', 0.25)]) vote_distance_weights: ('barefoot', 0.4333333333333333) vote_prob: ('barefoot', 0.3333333333333333) vote_distance_weights: ('barefoot', [('barefoot', 0.4333333333333333), ('Baguio', 0.2833333333333333), ('Blackfoot', 0.2833333333333333)]) vote_distance_weights: ('liberal', 0.4) vote_prob: ('liberal', 0.3333333333333333) vote_distance_weights: ('liberal', [('liberal', 0.4), ('liberty', 0.4), ('Hibernia', 0.2)])

"Show me who your friends are and I’ll

tell you who you are?"

The concept of the k-nearest neighbor

classifier can hardly be simpler described.

This is an old saying, which can be found

in many languages and many cultures. It's

also metnioned in other words in the

Bible: "He who walks with wise men will

be wise, but the companion of fools will

suffer harm" (Proverbs 13:20 )

This means that the concept of the k-

nearest neighbor classifier is part of our

everyday life and judging: Imagine you

meet a group of people, they are all very

young, stylish and sportive. They talk

about there friend Ben, who isn't with them. So, what is your imagination of Ben? Right, you imagine him as

being yong, stylish and sportive as well.

If you learn that Ben lives in a neighborhood where people vote conservative and that the average income is

above 200000 dollars a year? Both his neighbors make even more than 300,000 dollars per year? What do you

think of Ben? Most probably, you do not consider him to be an underdog and you may suspect him to be a

conservative as well?

The principle behind nearest neighbor classification consists in finding a predefined number, i.e. the 'k' - of

training samples closest in distance to a new sample, which has to be classified. The label of the new sample

will be defined from these neighbors. k-nearest neighbor classifiers have a fixed user defined constant for the

number of neighbors which have to be determined. There are also radius-based neighbor learning algorithms,

which have a varying number of neighbors based on the local density of points, all the samples inside of a

fixed radius. The distance can, in general, be any metric measure: standard Euclidean distance is the most

common choice. Neighbors-based methods are known as non-generalizing machine learning methods, since

they simply "remember" all of its training data. Classification can be computed by a majority vote of the

nearest neighbors of the unknown sample.

The k-NN algorithm is among the simplest of all machine learning algorithms, but despite its simplicity, it has

been quite successful in a large number of classification and regression problems, for example character

recognition or image analysis.

Now let's get a little bit more mathematically:

As explained in the chapter Data Preparation, we need labeled learning and test data. In contrast to other

classifiers, however, the pure nearest-neighbor classifiers do not do any learning, but the so-called learning set

LS is a basic component of the classifier. The k-Nearest-Neighbor Classifier (kNN) works directly on the

learned samples, instead of creating rules compared to other classification methods.

72

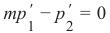

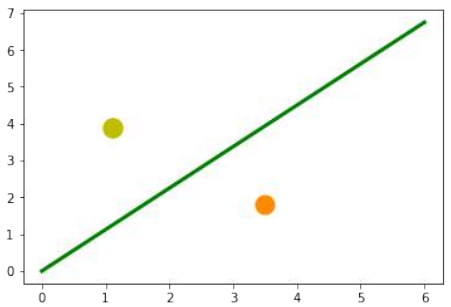

Nearest Neighbor Algorithm: